Everything posted by ResidentialBusiness

-

7 Simple Steps to Register a DBA Under Your LLC

Registering a DBA under your LLC is a straightforward process that requires careful attention to detail. First, you’ll need to choose a unique name that effectively represents your business. After that, it’s essential to conduct a name search to guarantee no conflicts exist. Once you’ve gathered the necessary documentation, you can complete the filing form and submit it with the required fee. Comprehending each step can make a significant difference in your registration process. What’s next? Key Takeaways Verify the desired DBA name for availability by checking state databases and ensuring no trademark conflicts exist. Complete the Assumed Name Certificate form with your LLC’s registered name, desired DBA name, and business type. Submit the completed DBA registration form to the Secretary of State, along with the $25 filing fee for incorporated entities. Set a reminder for the DBA renewal every ten years, ensuring timely compliance to avoid penalties. If operating in multiple states, register the DBA in each state to adhere to local regulations and maintain legitimacy. Understand What a DBA Is A DBA, or “doing business as,” is a crucial concept for LLCs looking to broaden their brand identity. By allowing your LLC to operate under a name different from its registered legal name, you improve your branding and marketing efforts. Known as an assumed name or trade name, a DBA can help distinguish multiple business operations under one LLC. To add a DBA to your LLC, you’ll need to understand how to register a DBA under an LLC, which typically involves checking name availability, completing a registration form, and filing it with the appropriate agency. For example, if you’re in dba Utah, verify you comply with local regulations. Remember, although a DBA provides a business identity, it doesn’t grant exclusive rights to the name. Conduct a Name Search Conducting a name search is a crucial step when you’re looking to register a DBA for your LLC. You must verify that your desired name is distinguishable from existing entities registered in Texas. Utilize online resources like state databases and search engines to check availability thoroughly. Here’s a quick guide to help you: Steps Description Start your search Use state databases and search engines Check trademarks Verify your name doesn’t infringe on trademarks Request preliminary Contact via phone or email for initial checks Confirm final checks Await the Secretary of State’s determination Reflect your business Choose a name that resonates with your audience Understanding how to add a DBA to an existing LLC includes performing a Utah DBA search for any overlap. Complete the DBA Filing Form Once you’ve confirmed that your desired DBA name is available, it’s time to complete the DBA filing form. Start by gathering important information, including your LLC’s registered name, the desired DBA name, and your business type. You can find the DBA registration form online or as a paper version, depending on your state’s requirements. Carefully fill out the form, ensuring all details are accurate to avoid delays. Once completed, submit the form along with the required filing fee, which usually ranges from $10 to $35. Don’t forget to keep a copy of the filed form and any receipts for your records, as these documents are essential for maintaining compliance and managing your DBA effectively. Gather Required Documentation Before you begin the DBA registration process, it’s vital to gather the necessary documentation to guarantee a smooth filing. Start by determining the appropriate agency for your DBA registration, as this can vary by state, county, or city regulations. You’ll need fundamental information, including your LLC’s registered name, the desired DBA name, and your business type. Prepare any required forms, like an Assumed Name Certificate if you’re in Texas. Be ready to provide identification details such as your LLC’s principal address and the names of the owners or members. Finally, don’t forget to check the required filing fee, which typically ranges from $10 to $35, depending on your jurisdiction. Submit Your DBA Registration Now that you’ve gathered the necessary documentation, it’s time to submit your DBA registration. Start by completing the Texas Assumed Name Certificate form, ensuring you include your LLC’s registered name and the desired DBA. Required Documentation and Fees To successfully submit your DBA registration in Texas, you’ll need to gather specific documentation and prepare for associated fees. First, complete a Texas Assumed Name Certificate, which requires details about your assumed name, the type of business entity, and your contact information. The filing fee is $25 when submitted to the Secretary of State, but if you’re filing at the county level for unincorporated businesses, fees may vary. Before registering, conduct a name availability search to verify your desired DBA isn’t already in use. Depending on local regulations, you might likewise need to publish a notice of your DBA in an approved newspaper. Filing Methods Available Once you’ve gathered the necessary documentation and fees to register your DBA, it’s time to explore the various filing methods available in Texas. If you’re an incorporated entity, you’ll need to file a Texas Assumed Name Certificate with the Secretary of State, which has a filing fee of $25. For unincorporated businesses, the filing occurs at the county clerk’s office where your principal office is located, and fees may vary by county. You can likewise register online through SOSDirect, offering a convenient option for submitting your Assumed Name Certificate. Before filing, make certain to check the availability of your desired name to verify it complies with state regulations and is distinguishable from existing businesses. Confirmation of Filing Status After you submit your DBA registration, you’ll receive confirmation of filing from either the Secretary of State or your local county clerk, depending on where you filed. This confirmation typically includes vital details like the filing date, the registered name, and a registration number, which you may need for business dealings and banking. It’s important to keep a copy of the filing receipt and any confirmation documents for your records and future reference. If you don’t receive confirmation within a few weeks, follow up with the appropriate office to verify your DBA has been processed. Monitor Renewal Deadlines To keep your DBA active, it’s essential to monitor its renewal deadline, which occurs every 10 years in Texas. Mark the expiration date on your calendar and consider setting a reminder for about 90 days before it’s necessary to avoid any lapses. This proactive approach guarantees you maintain your rights to the assumed name and prevents potential penalties for operating under an expired DBA. Track Expiration Dates Tracking the expiration dates of your DBA is vital, especially since an assumed name certificate in Texas needs to be renewed every 10 years. You must file a new Assumed Name Certificate before the previous one expires to maintain your DBA’s validity and guarantee uninterrupted business operations. The renewal fee is the same as the initial registration fee, which is $25 at the state level. Keeping track of this expiration date helps you avoid penalties and guarantees compliance with state regulations. Operating under an unregistered DBA can lead to legal consequences, so it’s important to stay organized. Utilize calendar reminders or tracking tools to monitor these deadlines, safeguarding your business’s legal standing and brand identity. Set Reminders Early Setting reminders early is vital for ensuring you don’t miss the renewal deadline for your DBA registration. In Texas, an assumed name certificate must be renewed every ten years, requiring you to file again before the current one expires. To avoid losing your legal right to use the name, set reminders at least three months before the expiration date. Utilize digital calendar alerts or task management apps to help you track these significant deadlines effectively. The renewal fee remains the same as the initial filing fee, which is $25 at the state level. Proactive management of these renewal deadlines is fundamental for maintaining business continuity and preventing any potential penalties for lapses in your DBA registration. Maintain Compliance With State Regulations Maintaining compliance with state regulations is vital for your LLC, especially regarding registering a Doing Business As (DBA) name. In Texas, you must file an Assumed Name Certificate with the Secretary of State if your business is incorporated, or with the county clerk if unincorporated. This registration costs $25 and needs renewal every ten years to remain compliant. Before filing, check name availability in state databases to avoid conflicts with existing names. Remember, failing to register a DBA can lead to serious penalties, including fines or jail time for intentional noncompliance. If you operate in other states, make sure you register your DBA there too, as local laws vary and compliance is vital for your business’s legitimacy. Frequently Asked Questions How Do I Set up a DBA Under My LLC? To set up a DBA under your LLC, start by checking name availability through your state’s agency. Once you confirm your desired name is free, complete the DBA filing form with details about your LLC and the chosen name. Pay the registration fee, which varies by state, and keep track of renewal deadlines, as most DBAs require renewal every few years. Finally, retain your DBA registration receipt for your records. What Comes First, DBA or LLC? You should establish your LLC first before registering a DBA. The LLC acts as the legal entity that protects your personal assets, whereas a DBA allows you to operate under a different name. By forming the LLC first, you guarantee compliance with state regulations and maintain liability protection. Once your DBA is in place, you can then file for a DBA, providing flexibility for branding without altering the legal structure of your business. Is a DBA Covered Under an LLC? A DBA isn’t a separate legal entity; it operates under your LLC’s umbrella. This means your LLC remains responsible for all actions taken under the DBA. Although a DBA can improve your business’s branding, it doesn’t provide trademark protection unless you register the name separately. You can register multiple DBAs for different business ventures, but remember, it’s mandatory in Texas to register your DBA to avoid potential penalties and fines. Why Add DBA to LLC? Adding a DBA to your LLC improves your business’s branding and market presence. It allows you to operate under a more appealing name, which can attract more customers. You can additionally diversify into different product lines without creating separate legal entities. This flexibility helps you adapt to market changes or rebrand as needed. Furthermore, a DBA boosts your visibility in directories, potentially increasing customer engagement and interest in your offerings. Conclusion Registering a DBA under your LLC is a straightforward process that can improve your business identity. By following the steps outlined—choosing a unique name, conducting a name search, and submitting the necessary forms—you guarantee compliance with state regulations. Don’t forget to monitor renewal deadlines every ten years to keep your registration active. By maintaining these practices, you’ll position your LLC for growth and avoid potential legal issues. Stay organized to make the most of your business opportunities. Image via Google Gemini This article, "7 Simple Steps to Register a DBA Under Your LLC" was first published on Small Business Trends View the full article

-

7 Simple Steps to Register a DBA Under Your LLC

Registering a DBA under your LLC is a straightforward process that requires careful attention to detail. First, you’ll need to choose a unique name that effectively represents your business. After that, it’s essential to conduct a name search to guarantee no conflicts exist. Once you’ve gathered the necessary documentation, you can complete the filing form and submit it with the required fee. Comprehending each step can make a significant difference in your registration process. What’s next? Key Takeaways Verify the desired DBA name for availability by checking state databases and ensuring no trademark conflicts exist. Complete the Assumed Name Certificate form with your LLC’s registered name, desired DBA name, and business type. Submit the completed DBA registration form to the Secretary of State, along with the $25 filing fee for incorporated entities. Set a reminder for the DBA renewal every ten years, ensuring timely compliance to avoid penalties. If operating in multiple states, register the DBA in each state to adhere to local regulations and maintain legitimacy. Understand What a DBA Is A DBA, or “doing business as,” is a crucial concept for LLCs looking to broaden their brand identity. By allowing your LLC to operate under a name different from its registered legal name, you improve your branding and marketing efforts. Known as an assumed name or trade name, a DBA can help distinguish multiple business operations under one LLC. To add a DBA to your LLC, you’ll need to understand how to register a DBA under an LLC, which typically involves checking name availability, completing a registration form, and filing it with the appropriate agency. For example, if you’re in dba Utah, verify you comply with local regulations. Remember, although a DBA provides a business identity, it doesn’t grant exclusive rights to the name. Conduct a Name Search Conducting a name search is a crucial step when you’re looking to register a DBA for your LLC. You must verify that your desired name is distinguishable from existing entities registered in Texas. Utilize online resources like state databases and search engines to check availability thoroughly. Here’s a quick guide to help you: Steps Description Start your search Use state databases and search engines Check trademarks Verify your name doesn’t infringe on trademarks Request preliminary Contact via phone or email for initial checks Confirm final checks Await the Secretary of State’s determination Reflect your business Choose a name that resonates with your audience Understanding how to add a DBA to an existing LLC includes performing a Utah DBA search for any overlap. Complete the DBA Filing Form Once you’ve confirmed that your desired DBA name is available, it’s time to complete the DBA filing form. Start by gathering important information, including your LLC’s registered name, the desired DBA name, and your business type. You can find the DBA registration form online or as a paper version, depending on your state’s requirements. Carefully fill out the form, ensuring all details are accurate to avoid delays. Once completed, submit the form along with the required filing fee, which usually ranges from $10 to $35. Don’t forget to keep a copy of the filed form and any receipts for your records, as these documents are essential for maintaining compliance and managing your DBA effectively. Gather Required Documentation Before you begin the DBA registration process, it’s vital to gather the necessary documentation to guarantee a smooth filing. Start by determining the appropriate agency for your DBA registration, as this can vary by state, county, or city regulations. You’ll need fundamental information, including your LLC’s registered name, the desired DBA name, and your business type. Prepare any required forms, like an Assumed Name Certificate if you’re in Texas. Be ready to provide identification details such as your LLC’s principal address and the names of the owners or members. Finally, don’t forget to check the required filing fee, which typically ranges from $10 to $35, depending on your jurisdiction. Submit Your DBA Registration Now that you’ve gathered the necessary documentation, it’s time to submit your DBA registration. Start by completing the Texas Assumed Name Certificate form, ensuring you include your LLC’s registered name and the desired DBA. Required Documentation and Fees To successfully submit your DBA registration in Texas, you’ll need to gather specific documentation and prepare for associated fees. First, complete a Texas Assumed Name Certificate, which requires details about your assumed name, the type of business entity, and your contact information. The filing fee is $25 when submitted to the Secretary of State, but if you’re filing at the county level for unincorporated businesses, fees may vary. Before registering, conduct a name availability search to verify your desired DBA isn’t already in use. Depending on local regulations, you might likewise need to publish a notice of your DBA in an approved newspaper. Filing Methods Available Once you’ve gathered the necessary documentation and fees to register your DBA, it’s time to explore the various filing methods available in Texas. If you’re an incorporated entity, you’ll need to file a Texas Assumed Name Certificate with the Secretary of State, which has a filing fee of $25. For unincorporated businesses, the filing occurs at the county clerk’s office where your principal office is located, and fees may vary by county. You can likewise register online through SOSDirect, offering a convenient option for submitting your Assumed Name Certificate. Before filing, make certain to check the availability of your desired name to verify it complies with state regulations and is distinguishable from existing businesses. Confirmation of Filing Status After you submit your DBA registration, you’ll receive confirmation of filing from either the Secretary of State or your local county clerk, depending on where you filed. This confirmation typically includes vital details like the filing date, the registered name, and a registration number, which you may need for business dealings and banking. It’s important to keep a copy of the filing receipt and any confirmation documents for your records and future reference. If you don’t receive confirmation within a few weeks, follow up with the appropriate office to verify your DBA has been processed. Monitor Renewal Deadlines To keep your DBA active, it’s essential to monitor its renewal deadline, which occurs every 10 years in Texas. Mark the expiration date on your calendar and consider setting a reminder for about 90 days before it’s necessary to avoid any lapses. This proactive approach guarantees you maintain your rights to the assumed name and prevents potential penalties for operating under an expired DBA. Track Expiration Dates Tracking the expiration dates of your DBA is vital, especially since an assumed name certificate in Texas needs to be renewed every 10 years. You must file a new Assumed Name Certificate before the previous one expires to maintain your DBA’s validity and guarantee uninterrupted business operations. The renewal fee is the same as the initial registration fee, which is $25 at the state level. Keeping track of this expiration date helps you avoid penalties and guarantees compliance with state regulations. Operating under an unregistered DBA can lead to legal consequences, so it’s important to stay organized. Utilize calendar reminders or tracking tools to monitor these deadlines, safeguarding your business’s legal standing and brand identity. Set Reminders Early Setting reminders early is vital for ensuring you don’t miss the renewal deadline for your DBA registration. In Texas, an assumed name certificate must be renewed every ten years, requiring you to file again before the current one expires. To avoid losing your legal right to use the name, set reminders at least three months before the expiration date. Utilize digital calendar alerts or task management apps to help you track these significant deadlines effectively. The renewal fee remains the same as the initial filing fee, which is $25 at the state level. Proactive management of these renewal deadlines is fundamental for maintaining business continuity and preventing any potential penalties for lapses in your DBA registration. Maintain Compliance With State Regulations Maintaining compliance with state regulations is vital for your LLC, especially regarding registering a Doing Business As (DBA) name. In Texas, you must file an Assumed Name Certificate with the Secretary of State if your business is incorporated, or with the county clerk if unincorporated. This registration costs $25 and needs renewal every ten years to remain compliant. Before filing, check name availability in state databases to avoid conflicts with existing names. Remember, failing to register a DBA can lead to serious penalties, including fines or jail time for intentional noncompliance. If you operate in other states, make sure you register your DBA there too, as local laws vary and compliance is vital for your business’s legitimacy. Frequently Asked Questions How Do I Set up a DBA Under My LLC? To set up a DBA under your LLC, start by checking name availability through your state’s agency. Once you confirm your desired name is free, complete the DBA filing form with details about your LLC and the chosen name. Pay the registration fee, which varies by state, and keep track of renewal deadlines, as most DBAs require renewal every few years. Finally, retain your DBA registration receipt for your records. What Comes First, DBA or LLC? You should establish your LLC first before registering a DBA. The LLC acts as the legal entity that protects your personal assets, whereas a DBA allows you to operate under a different name. By forming the LLC first, you guarantee compliance with state regulations and maintain liability protection. Once your DBA is in place, you can then file for a DBA, providing flexibility for branding without altering the legal structure of your business. Is a DBA Covered Under an LLC? A DBA isn’t a separate legal entity; it operates under your LLC’s umbrella. This means your LLC remains responsible for all actions taken under the DBA. Although a DBA can improve your business’s branding, it doesn’t provide trademark protection unless you register the name separately. You can register multiple DBAs for different business ventures, but remember, it’s mandatory in Texas to register your DBA to avoid potential penalties and fines. Why Add DBA to LLC? Adding a DBA to your LLC improves your business’s branding and market presence. It allows you to operate under a more appealing name, which can attract more customers. You can additionally diversify into different product lines without creating separate legal entities. This flexibility helps you adapt to market changes or rebrand as needed. Furthermore, a DBA boosts your visibility in directories, potentially increasing customer engagement and interest in your offerings. Conclusion Registering a DBA under your LLC is a straightforward process that can improve your business identity. By following the steps outlined—choosing a unique name, conducting a name search, and submitting the necessary forms—you guarantee compliance with state regulations. Don’t forget to monitor renewal deadlines every ten years to keep your registration active. By maintaining these practices, you’ll position your LLC for growth and avoid potential legal issues. Stay organized to make the most of your business opportunities. Image via Google Gemini This article, "7 Simple Steps to Register a DBA Under Your LLC" was first published on Small Business Trends View the full article

-

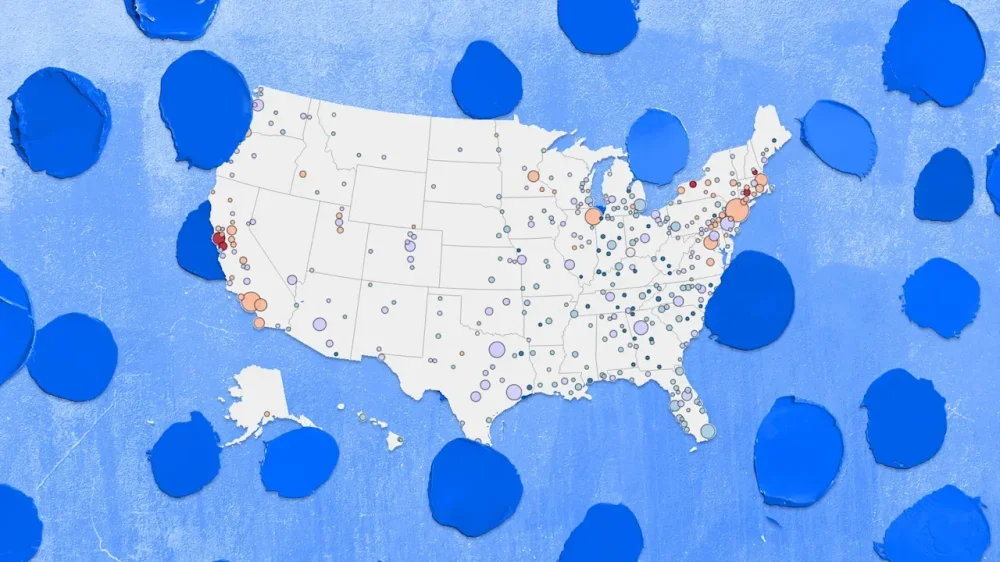

What Is a Community Engagement Platform and Why Is It Necessary?

A community engagement platform is a specialized tool that connects local governments with residents, enhancing public participation in governance. These platforms are vital for nurturing transparency and collaboration, ensuring that diverse voices are represented. They streamline feedback processes and utilize interactive features to empower communities. Comprehending how these platforms operate and their benefits can reveal their significant role in modern governance, especially as trends continue to evolve in this space. What changes might lie ahead? Key Takeaways A community engagement platform is software designed to enhance public participation between residents and local governments through interactive tools and data analytics. These platforms improve communication and collaboration by centralizing public interactions and providing real-time updates on projects for transparency. They empower community voices, enabling diverse perspectives to be represented and fostering a collaborative environment in local governance. Community engagement platforms streamline resource management, reducing time spent on administrative tasks and maximizing return on investment for engagement efforts. By ensuring compliance with legislative requirements, these platforms maintain accurate records of community interactions and promote accountability in public participation. Definition of a Community Engagement Platform A Community Engagement Platform is a specialized software solution that local governments use to improve public participation. This citizen engagement software improves communication and collaboration between residents and their local governments, allowing for more effective feedback collection. Typically, these platforms include interactive surveys, map-based engagement features, and data analytics tools to provide actionable insights for decision-making. By centralizing public interactions, civic engagement software streamlines the management of communication, increasing transparency in governance. Local governments can gather geolocated data from citizens, which is crucial for urban planning and addressing community needs effectively. As a thorough tool, the community engagement platform supports various departments in engaging with residents, promoting a more inclusive and responsive governance model. In the end, these platforms help guarantee that citizens’ voices are heard, making local governments more accountable to their communities. Importance of Community Engagement Community engagement plays an essential role in shaping effective governance and nurturing a sense of community. By utilizing public engagement software and community engagement tools, organizations can improve transparency and trust in decision-making processes. Engaging with residents enables you to gather valuable insights, leading to evidence-based decisions that address real needs. Benefits of Community Engagement Tools to Facilitate Engagement Outcomes of Effective Engagement Improves transparency Public engagement software Builds trust and credibility Gathers diverse perspectives Community engagement tools Informed decision-making Empowers underrepresented groups Digital platforms Strengthens community ties An inclusive approach guarantees equitable representation, allowing all voices to be heard. When residents feel a sense of ownership, they’re more likely to participate actively, eventually strengthening community bonds and governance. Key Features of Community Engagement Platforms When exploring community engagement platforms, you’ll find that a user-friendly interface is crucial for encouraging participation. These platforms often feature multi-channel communication tools, allowing you to reach community members through various methods like surveys, social media, and forums. User-Friendly Interface An effective user-friendly interface is crucial for community engagement platforms, as it directly influences how easily both administrators and members navigate the system. A clean design and adherence to accessibility standards minimize the learning curve, allowing you to focus more on community-building activities rather than technical challenges. This interface promotes inclusivity, accommodating users with different learning styles and preferences, thereby enhancing overall engagement. Furthermore, reduced training time on a user-friendly platform allows your organization to allocate more resources to meaningful community interactions and initiatives. Engaging experiences through a well-designed interface cultivate trust and encourage ongoing participation from community members, ultimately leading to a lively and active community where everyone feels valued and involved. Multi-Channel Communication Tools Effective communication is essential for promoting engagement within any community, and multi-channel communication tools significantly boost this aspect of community engagement platforms. These tools allow for seamless interaction across various channels, such as social media, email, and SMS. This integration improves outreach and broadens participation in surveys and discussion forums. Here’s how multi-channel communication tools function: Feature Benefit Timely Notifications Keeps community members informed Diverse Communication Caters to different user preferences Cohesive Messaging Strengthens relationships with residents Benefits of Using a Community Engagement Platform Using a community engagement platform can considerably improve community participation by providing various tools that allow every resident to share their opinions and concerns. It furthermore streamlines communication processes, making it easier for local governments to manage feedback and respond quickly to community needs. This efficiency not merely encourages a more inclusive environment but additionally strengthens the relationship between citizens and their local government. Enhanced Community Participation Community engagement platforms greatly bolster participation by breaking down geographical barriers and allowing individuals to contribute their voices anytime, anywhere. These platforms create an inclusive environment where everyone can engage in decision-making processes. Here are some benefits you can expect: Democratized Participation: Anyone can share their views, regardless of location. Diverse Engagement Tools: Use interactive surveys, polls, and discussion forums to gather input. AI Features: Leverage sentiment analysis and predictive analytics for informed decisions. User-Friendly Interface: Enjoy seamless navigation and multi-channel integration with social media. Centralized Feedback: Streamline communication and boost transparency within your community. Streamlined Communication Processes When local governments implement a community engagement platform, they considerably improve communication processes by centralizing interactions across various channels. This centralization reduces response times and administrative efforts, making it easier for you to manage feedback. The user-friendly interface amplifies transparency, keeping you informed about project updates and timelines, which helps build trust. Advanced AI features enable real-time data processing and sentiment analysis, allowing for quicker collection and interpretation of community feedback, so decisions can be made more effectively. With multi-channel integration, you receive timely notifications through your preferred communication methods, which boosts participation. Furthermore, these platforms facilitate efficient documentation and reporting of interactions, ensuring accountability and compliance with engagement laws. Enhancing Communication and Collaboration How can local governments and residents effectively bridge communication gaps? A Community Engagement Platform serves as an essential tool for enhancing communication and collaboration. By acting as a central hub, it promotes consistent messaging and proactive interactions between local authorities and community members. Key features include: Real-time updates on project timelines, promoting transparency and trust. Diverse engagement methods like surveys and discussion forums that guarantee various voices are heard. Multi-channel integration, allowing outreach through social media, email, and SMS for broader community involvement. Advanced AI capabilities for sentiment analysis, helping to identify trends and process feedback efficiently. Streamlined communication efforts, making it easier for residents to access information and participate in decisions. These elements collectively encourage ongoing dialogue and collaboration, ultimately enhancing the relationship between local governments and the community they serve. Empowering Community Voices Empowering voices within the community is a vital aspect of nurturing a collaborative environment where all residents have a say in local governance. Community engagement platforms play a significant role in this by removing logistical barriers. Many individuals can’t attend in-person meetings because of work, transportation, or childcare issues. By enabling digital participation, these platforms have seen a 47% increase in engagement, allowing more residents to share their perspectives. Utilizing multimedia and interactive tools guarantees diverse voices are represented, promoting inclusivity. These platforms provide a secure space for community feedback, empowering residents to influence local decisions and feel connected to the civic process. Engaging through digital channels as well allows for real-time communication and updates, guaranteeing that community voices are heard and valued throughout project developments. In the end, this empowerment strengthens community ties and improves the overall effectiveness of local governance. Improving Decision-Making Processes Improving decision-making processes involves gathering improved community insights that reflect diverse perspectives. By integrating feedback from various groups, you guarantee that decisions are well-informed and representative of the community’s needs. Trust and transparency play key roles in this process, as they cultivate stronger connections and facilitate more effective collaboration among community members. Enhanced Community Insights As community engagement platforms evolve, they play a crucial role in improving decision-making processes by gathering a wide range of perspectives from community members. By leveraging these platforms, you can gain valuable insights that lead to more informed decisions. Here are some key benefits: Diverse Perspectives: Collecting input from various community members guarantees a thorough comprehension of issues. Evidence-Based Decisions: Real problems and challenges are identified, supporting data-driven choices. AI Integration: Advanced features enable sentiment analysis and trend identification for deeper community awareness. Inclusivity: Multimedia tools accommodate different learning styles, leading to broader data representation. Transparency: Real-time updates improve communication and build trust among community members. Utilizing these insights eventually strengthens your organization’s decision-making capabilities. Diverse Perspectives Integration When community engagement platforms effectively integrate diverse perspectives, they improve decision-making processes by guaranteeing that all voices are heard and considered. These platforms gather input from various community members, including those often overlooked, breaking down barriers that silence them. By utilizing multimedia and interactive tools, they accommodate different learning styles, enhancing inclusivity and allowing meaningful contributions. Data collection methods, such as surveys and polls, yield representative results that deepen comprehension of community intricacies, leading to more informed decisions. Engaging a wider array of voices not just enriches discussions but additionally nurtures a sense of empowerment among residents. This engagement guarantees that decisions reflect a broader range of needs and opinions, in the end resulting in more confident and equitable outcomes. Trust and Transparency Building Trust and transparency are essential pillars in community engagement platforms, as they directly influence the effectiveness of decision-making processes. By improving transparency, these platforms provide real-time updates, nurturing trust in government actions. They likewise collect and share community feedback, ensuring diverse voices are heard, which leads to informed decisions. Key benefits include: Real-time project updates that build trust Inclusion of diverse community feedback Data-driven insights for addressing local challenges Transparent communication that combats misinformation Continuous engagement that adapts to community needs Ultimately, these elements streamline accountability and improve decision-making, allowing organizations to align their goals with public sentiment, which bolsters community support for various initiatives. Facilitating Inclusivity and Representation Community engagement platforms play a vital role in facilitating inclusivity and representation by allowing a broader spectrum of citizens to contribute their voices to decision-making processes. These platforms enable participation from diverse groups, ensuring that a variety of opinions are heard and considered. By accommodating different learning styles and preferences, they prioritize accessibility, allowing individuals from various backgrounds to engage meaningfully. Digital platforms also eliminate logistical barriers like transportation and childcare, making it easier for underheard groups to participate in community discussions. In addition, the inclusion of multilingual features allows participants to engage in their native languages, enhancing inclusivity and ensuring language isn’t a barrier to participation. By effectively reaching hard-to-access populations, community engagement platforms cultivate a more equitable representation in public discourse and decision-making, which is fundamental for building a community that truly reflects its members’ needs and perspectives. Supporting Social Research and Community Understanding Community engagement platforms play an essential role in identifying the real issues facing your community by collecting valuable data and insights. These tools improve your knowledge base, enabling a better comprehension of complex social dynamics and supporting evidence-based decisions. Identifying Community Issues How can local governments effectively pinpoint the pressing issues faced by residents? By utilizing community engagement platforms, they can gather valuable data and insights directly from the people. These platforms allow you to share your perspectives through surveys and feedback tools, which helps identify real problems and comprehend complex community issues. Engaging with residents reduces uninformed opinions and promotes accurate information dissemination. Key benefits include: Access to diverse viewpoints from various community members Improved awareness of ongoing projects and initiatives Better comprehension of the needs of underheard groups Evidence-based decision-making supported by reliable data Strengthened community ties through open communication Enhancing Knowledge Base Engagement plays a fundamental role in enhancing the knowledge base necessary for grasping local social dynamics. Community engagement platforms facilitate the collection of targeted feedback through surveys and discussions, offering valuable insights that drive social research on pressing issues. By gathering diverse perspectives, these platforms help identify real problems within the community, leading to a clearer comprehension of complex dynamics. Engaging citizens digitally increases transparency and allows for thorough information sharing, reducing uninformed public opinion. Furthermore, by educating the community about ongoing projects, these platforms encourage broader audience involvement, enhancing overall knowledge and awareness. In the end, this process supports a more informed community, empowering individuals to participate actively in local discussions and initiatives. Supporting Evidence-Based Decisions Though gathering data from various sources can be time-consuming, utilizing community engagement platforms greatly streamlines the process of supporting evidence-based decisions. These platforms improve social research by providing essential insights into community issues. By asking targeted questions, they help identify real problems and nurture a deeper comprehension of local intricacies. Here are a few benefits: Promotes transparency and openness about community projects Reduces uninformed public opinion through accessible information Gathers diverse perspectives to improve awareness on critical issues Engages a broader audience, ensuring representative insights Supports informed decision-making for more effective community initiatives With these tools, you can easily collect and analyze data, leading to better-informed decisions that truly reflect community needs. Compliance With Legislative Requirements When organizations prioritize compliance with legislative requirements, they often turn to community engagement platforms to streamline their efforts. These platforms help document engagement activities, ensuring transparency in public projects and making it easier to meet specific legal mandates. By reducing administrative burdens on local governments, these tools allow for a more efficient compliance process. The historical data collected through engagement platforms can serve as essential evidence for compliance, demonstrating that organizations adhere to community engagement laws. By maintaining accurate records of community interactions, these platforms improve accountability and build trust between citizens and local authorities. They additionally help organizations avoid potential legal issues related to public participation, as all engagement activities are properly documented and readily accessible. In the end, utilizing a community engagement platform not just supports compliance but also cultivates a more engaged and informed community. Maximizing Resource Management and Cost-Effectiveness To maximize resource management and cost-effectiveness, organizations can leverage community engagement platforms that simplify and streamline public participation processes. By adopting these platforms, you can substantially reduce the time and effort required for in-person events, allowing for more engagement activities without stretching financial resources. This streamlined approach improves productivity and enables you to host multiple projects simultaneously, reducing financial unpredictability. Automate tasks associated with public participation. Save approximately 55% of time on analysis and reporting. Allocate staffing and budget resources more efficiently. Facilitate unlimited projects with an initial investment. Maximize return on investment for community engagement efforts. Real-Time Communication and Updates Real-time communication and updates are vital components of effective community engagement, as they keep community members informed about project developments and important milestones. Community engagement platforms provide timely updates, ensuring everyone is aware of significant changes. By opting in for notifications, users can maintain consistent communication, which keeps them engaged in ongoing discussions. These platforms enable organizations to respond swiftly to community feedback, nurturing trust and transparency in decision-making processes. When you receive current and relevant information, it reduces the spread of misinformation and clarifies misconceptions about projects or initiatives. Moreover, regular updates and reminders about upcoming events or deadlines improve participation rates, encouraging active involvement from community members. This flow of information not only keeps everyone informed but also strengthens the overall connection within the community, making it easier for you to contribute meaningfully. To summarize, real-time communication is fundamental for effective community engagement. Hybrid Engagement Strategies Hybrid engagement strategies represent a potent approach to community involvement, seamlessly blending traditional face-to-face interactions with digital platforms. This combination allows for broader participation and accommodates diverse community needs. They reach individuals who face logistical barriers, like work or childcare. Hybrid strategies promote inclusivity by facilitating varied voices and perspectives. Digital tools improve in-person events, ensuring accessibility and flexibility. You can engage at your convenience, from various locations. They can notably boost engagement rates, as shown by a 47% increase in digital engagement among consumers with businesses. Future Trends in Community Engagement Platforms As community engagement platforms evolve, they’re increasingly integrating advanced AI features that improve how data is processed and interpreted. These innovations allow you to gain deeper insights into community feedback and sentiment. Moreover, the shift toward hybrid engagement strategies is becoming more common, blending digital and traditional methods to guarantee everyone can participate. Future platforms will likely improve their smart analytics and automation capabilities, streamlining feedback processing and reducing the time spent on data analysis. This advancement will help you respond more quickly to community needs. With the rise of misinformation, platforms are also focusing on delivering accurate information, cultivating trust between local governments and residents. Finally, expect expanded multi-channel integration, allowing seamless communication across various channels. Trend Description Impact on Engagement Advanced AI Integration Improved data processing and insights Better comprehension of community sentiment Hybrid Engagement Combination of digital and traditional methods Increased accessibility Misinformation Management Providing accurate, thorough information Improved transparency and trust Smarter Analytics Streamlined feedback processing Faster responsiveness Multi-channel Integration Seamless communication across platforms Maximized outreach and participation Frequently Asked Questions What Is a Community Engagement Platform? A community engagement platform is a software tool that helps you connect with local officials and fellow residents. It streamlines communication by offering interactive surveys, discussion boards, and project pages, making it easier for you to share your feedback and ideas. By centralizing these interactions, it improves public participation in local governance. Furthermore, it collects valuable data that can inform urban planning and improve decision-making processes, ensuring your voice is heard in community matters. What Is Community Engagement and Why Is It Important? Community engagement is the process of involving citizens in decision-making to address local issues. It’s important as it nurtures collaboration and ownership, enhancing transparency and trust in governance. By including diverse voices, especially from underrepresented groups, it guarantees all perspectives are considered. This leads to more informed decisions, stronger relationships between citizens and local governments, and increased satisfaction with initiatives. What Are the 3 C’s of Community Engagement? The 3 C’s of community engagement are Communication, Collaboration, and Community. Communication involves sharing timely updates, ensuring transparency to build trust. Collaboration encourages diverse voices, allowing for collective problem-solving and stronger community ties. Finally, engaging the Community empowers residents to influence local decisions, nurturing a sense of ownership. What Is the Purpose of a Community Engagement Plan? A community engagement plan’s purpose is to actively involve residents in decision-making processes, ensuring their input shapes local governance. It improves transparency by clearly communicating goals and timelines, nurturing trust between community members and authorities. By identifying community needs through structured engagement, it leads to informed decisions. The plan promotes inclusivity, using various methods to cater to diverse demographics, and strengthens accountability by documenting feedback, supporting responsive policy development. Conclusion In conclusion, a community engagement platform is essential for promoting effective communication between local governments and residents. By encouraging transparency and collaboration, these platforms empower individuals to participate in governance actively. Key features, such as real-time updates and hybrid engagement strategies, improve the overall experience and guarantee diverse voices are heard. As community needs evolve, these platforms will continue to adapt, shaping the future of public participation and bolstering community ties. Image via Google Gemini and ArtSmart This article, "What Is a Community Engagement Platform and Why Is It Necessary?" was first published on Small Business Trends View the full article

-

How Peter Mandelson’s Jeffrey Epstein links were exposed

Documents disclosed on Friday revealed payments from disgraced financier to former power broker’s husbandView the full article

-

WordPress Announces AI Agent Skill For Speeding Up Development via @sejournal, @martinibuster

WordPress announced a new AI agent skill that enables a clear feedback loop for AI, speeding up building and experimenting with WordPress. The post WordPress Announces AI Agent Skill For Speeding Up Development appeared first on Search Engine Journal. View the full article

-

Why a Tennessee proposal to ban sports betting on campus is too little, too late

In the months after a 2018 Supreme Court decision opened the door for states to legalize sports betting within their borders, giddy lawmakers across the country couldn’t move quickly enough. No one wanted to miss out on the billions of dollars in tax revenue that the high court had suddenly placed within their reach—or, worse yet, to watch that easy money go to neighboring states whose leaders had the presence of mind to move first. Within a month of the decision, Delaware Gov. John Carney bet $10 on a Phillies game—the first legal single-game sports bet outside of Nevada. Many states were more concerned with getting sportsbooks online in time for a big-ticket event (the Super Bowl, March Madness) than building an infrastructure to regulate the multibillion-dollar industry—a dynamic that journalist Danny Funt details in his book Everybody Loses: The Tumultuous Rise of American Sports Gambling. Lawmakers in some states even passed laws authorizing sports gambling before the Supreme Court decided Murphy v. NCAA, so they’d be ready to jump after a favorable ruling. Eight years later, it’s clear that this gold rush has had (and I am being diplomatic here) some negative consequences. Sports media outlets have become hopelessly intertwined with gambling behemoths eager to turn more fans into paying customers. Athletes who do not perform to bettors’ satisfaction are often subjected to racist abuse, death threats, or some combination thereof. And gambling addiction has spiked, thanks to the proliferation of app-based mobile betting that allows users to get their fixes anytime, anywhere. A 2025 study found that internet searches for help with gambling addiction increased 23% between 2018 and June 2024, and that they surged more with the arrival of online sportsbooks than they did when brick-and-mortar casinos opened. Over the last few years, a series of high-profile scandals have demonstrated the extent to which legalization has warped the actual games on which people are betting all this money. In 2024, the NBA issued a lifetime ban to Toronto Raptors forward Jontay Porter for his part in a conspiracy in which he pulled himself early from games to ensure that “under” bets on his performance would hit. Miami Heat guard Terry Rozier was implicated in a similar scheme last year, as were two Cleveland Guardians pitchers who were charged with rigging ball-or-strike bets on specific pitches in exchange for cash bribes. Then, earlier this month, federal prosecutors named 39 players across 17 teams who were allegedly part of a point-shaving ring that fixed men’s college basketball games during the 2023-24 and 2024-25 seasons. According to the indictment, bettors offered players bribes in the low five figures to underperform in agreed-upon games, and then wagered heavily on outcomes they had good reason to believe would go their way. Leagues and sportsbooks typically frame corruption as rare and make examples of those who are involved in it. But the mere knowledge that scandals like this exist can throw the entire enterprise into doubt: If you are a gambler who is angry about a bad bet, it’s very easy to wonder if you were cheated by perpetrators who were just lucky enough not to get caught. A new bill in Tennessee, where residents wagered $1.3 billion on sports over a two-month period last year, is maybe the most significant effort yet to retreat from the status quo. Introduced by a pair of Democratic lawmakers, state Rep. John Ray Clemmons and state Sen. Jeff Yarbro, the proposal would ban state-licensed sportsbooks from taking bets from people who are on the campuses of public colleges and universities, as well as from people at venues where those schools’ teams are playing games. Sportsbooks use the geolocation capabilities of smartphones to determine app users’ eligibility, so logistically speaking, rejecting bets from phones that are located within newly designated restricted areas would not be especially complicated. Colleges and universities would also be required to block people from accessing online sportsbooks while connected to campus networks. A handful of states have previously imposed modest limits on betting on college sports—for example, banning proposition bets on college athletes, or prohibiting wagering on in-state school teams. The scope of Clemmons and Yarbro’s proposal is broader: It would prevent people on campus from placing any type of sports bet, college or otherwise. The rationales for targeting restrictions at college students are straightforward: Gambling addiction has hit young people hard, and young men the hardest. A Pew Research Center study last year found that 31% of adults between ages 18 and 29 had bet on sports in the previous year—the most of any age group. A 2023 survey commissioned by the NCAA found that more than a quarter of college-age adults had placed a bet online, and overall, 58% had bet in some form. In 2024, a Pennsylvania addiction therapist told 60 Minutes about a troubling new archetype of patient he’d encountered in recent years: college students who gamble away their federal student loan money. Clemmons echoed many of these concerns in an email to me, explaining that he was motivated by rising addiction rates among young people, sportsbooks’ efforts to target young people with advertising, the ongoing harassment of student-athletes, and “a desire to prevent students from losing their parents’ hard-earned money to sportsbooks.” If you are a policymaker looking to enact more robust protections for those whom the data shows are most vulnerable, “the people who are physically present on a college campus” is a pretty good place to start. At the same time, the bill’s parameters demonstrate the challenges inherent in trying to provide oversight to an industry that has, to date, been allowed to set a new land-speed record every year. Bettors have long demonstrated their willingness to move around in order to place bets. In his book, Funt writes that before New York authorized sports betting, New York City residents would simply walk across the George Washington Bridge until their phones registered their presence in New Jersey, where betting was legal. Given what we know about how addiction works and how prevalent it is, I’m not sure that requiring college students to cross the street in order to place a wager is going to be, in the scheme of things, a significant deterrent. It’s also worth contemplating all the people and behaviors to whom this law would not apply. It doesn’t affect private schools, which means that while students at the University of Tennessee might be temporarily locked out of their FanDuel accounts, students at Vanderbilt might not even realize if and when a ban takes effect. It doesn’t affect private property, which means that students who live off campus would be free to continue wagering from the comfort of their couches. It doesn’t affect access to federally regulated prediction sites like Kalshi, which function as backdoor sportsbooks accessible to anyone 18 and older. Since Tennessee already prohibits anyone under 21 from betting with state-licensed sportsbooks, the people who would be barred from wagering under this law and who are not barred from wagering under existing law are, basically, fans at certain sporting events, and college juniors and seniors at public schools, if they happen to be on school property at that moment. By email, Clemmons noted the legislature’s limited jurisdiction over nonpublic property, and he asserted that geo-targeting campuses and sports venues “seems the most effective, legal way to accomplish our primary aims.” In response to my question about the merits of, for example, raising the minimum betting age or barring college students from betting regardless of their physical location, Clemmons said that if they pass this law and determine that “more action is necessary,” they will “certainly look to have those discussions.” I don’t mean to suggest that lawmakers considering responses like this one to the various crises before them are falling down on the job. When there is this much evidence over this many years that the post-Murphy free-for-all is ruining this many lives, I would prefer people in power do what they can to mitigate the harm rather than shrug their shoulders and do nothing. I’m simply saying that at this point, eight years after the Supreme Court empowered the gambling industry to begin swallowing sports whole, it is going to be really, really challenging for lawmakers, in Tennessee or anywhere else, to start putting the proverbial toothpaste back in the tube. This is largely the result of the states’ own choices: They could have proceeded more cautiously after Murphy, by more aggressively limiting the pools of eligible bettors, or imposing more onerous tobacco-style restrictions on sportsbook advertising, or simply deciding to wait a little while before putting virtual casinos in millions of pockets. But they wanted the money that would come with acting fast. Now, they’re paying the true price. View the full article

-

Busy Season 2026: Chaos Looms as DOGE Cuts and OBBBA Changes Collide

Downsizing, backlogs, and confusion become national policy. By CPA Trendlines JOIN Join the Busy Season Barometer Go PRO for members-only access to more CPA Trendlines Research. View the full article

-

Busy Season 2026: Chaos Looms as DOGE Cuts and OBBBA Changes Collide

Downsizing, backlogs, and confusion become national policy. By CPA Trendlines JOIN Join the Busy Season Barometer Go PRO for members-only access to more CPA Trendlines Research. View the full article

-

7 Essential Feedback Survey Questions for Better Insights

Comprehending customer feedback is vital for any business looking to improve its offerings. By asking the right questions, you can gain insights into what drives purchases, the value customers perceive, and how they experience your product. These seven fundamental survey questions can clarify customer goals, identify barriers, and assess satisfaction, in the end guiding your strategies. Exploring these questions will help you improve customer experiences and drive growth, but what insights will you uncover? Key Takeaways Ask about customer goals to understand what motivates their purchasing decisions and improve offerings. Include questions on pricing perception to gauge sensitivity and fair pricing expectations among customers. Gather feedback on product features to identify strengths, weaknesses, and desired improvements for better satisfaction. Use NPS questions to measure customer loyalty and likelihood of recommending your brand to others. Collect demographic insights to tailor marketing strategies and enhance customer experiences based on specific segments. Understanding Customer Goals Grasping customer goals is vital for any business aiming to improve its offerings and boost user satisfaction. By asking effective feedback questions, like “What is your main goal for using this product?” you can uncover valuable insights into customer motivations. This comprehension allows you to prioritize feature development that aligns with what customers want to achieve, driving user engagement. Consider incorporating employee survey questions and manager feedback survey questions to gather insights from your team as well. These employee feedback surveys can highlight challenges customers face in reaching their goals, helping you identify pain points and refine your offerings. Regularly evaluating customer goals through well-structured company survey questions nurtures a continuous improvement cycle, ensuring your products remain relevant. For employee survey feedback examples, focus on questions that encourage open dialogue about how to better support user success, aligning your business strategies with customer needs. Identifying Barriers to Purchase When you look to improve sales, identifying barriers to purchase is crucial. Comprehending your customers’ motivations and how they perceive pricing can uncover key insights that drive their purchasing decisions. Purchase Motivation Assessment How can comprehension of the barriers to purchase improve your business strategy? Identifying what’s holding customers back can greatly improve your approach. By using effective feedback survey questions, you can gather insights into customer purchase motivations and common objections. For instance, asking about specific problems they aimed to solve can clarify their needs. Moreover, competitor analysis feedback helps you understand why potential customers may prefer alternatives and what features they desire. Incorporating employee feedback questions can likewise reveal insights, ensuring your team aligns with customer expectations. Utilize employee survey questions examples to refine your strategies further, evolving your offerings to address these barriers and improve customer satisfaction. In the end, addressing these insights can drive improved purchasing decisions. Pricing Perception Insights Why does pricing perception hold such a significant influence over consumer purchasing decisions? Comprehending pricing perception insights is crucial, as 60% of consumers prioritize price when deciding. Utilizing feedback survey questions about price sensitivity can help identify specific price points where products feel too expensive. Collecting insights on competitors’ pricing reveals how your offerings stack up, allowing you to address barriers that may deter purchases. Open-ended questions about fair pricing can clarify customer expectations and their perceived value of your products. By addressing these pricing concerns, you can potentially boost conversion rates, since 70% of customers are more inclined to buy when prices align with their perceived value. This strategic approach guarantees your pricing resonates better with your target audience. Assessing Customer Satisfaction Evaluating customer satisfaction is vital for businesses aiming to understand their clients’ experiences and improve overall service quality. Customer satisfaction surveys often include questions that assess the overall experience, ease of use, and likelihood of repeat purchases. One effective metric for this is the Customer Satisfaction Score (CSAT), which asks respondents to rate their satisfaction on a scale of 1-10. These surveys can yield actionable insights about service performance, such as problem-solving effectiveness, enabling targeted improvements. Research indicates that companies actively gathering and acting on customer satisfaction feedback can experience up to a 12% increase in revenue because of improved customer retention and loyalty. Regularly measuring customer satisfaction helps you identify trends over time, allowing you to track improvements and adjust strategies based on real-time feedback. This proactive approach nurtures a deeper connection with customers, ensuring they feel valued and understood. Gathering Feedback on Product Features Gathering feedback on product features is essential for comprehending what you value most and how those features impact your experience. By asking targeted questions, you can identify key attributes, desired improvements, and any challenges you face during your use of the product. This information not just guides future development but additionally guarantees that enhancements align with your needs and expectations. Key Product Attributes To effectively improve your product, obtaining feedback on key attributes is essential, as it can guide your development efforts. Start by asking questions like, “What features do you find most useful?” to identify strengths that should be highlighted in your marketing strategies. Furthermore, inquire about missing features with questions such as, “What features or services do you wish we offered?” This can help shape your product roadmap. Use rating scales to evaluate customer satisfaction with specific attributes, providing quantifiable data on performance. Open-ended questions like, “What do you like least about our product?” can reveal pain points affecting user experience. Integrating these insights into employee feedback questions for managers or workshop feedback surveys can improve overall product quality and customer satisfaction. Desired Feature Enhancements How can you guarantee your product evolves to meet customer expectations? Gathering feedback on desired feature improvements is essential. By using an employee survey questionnaire or implementing staff feedback questions, you can discover what features customers wish you offered. Open-ended questions like, “What services do you wish we provided?” yield valuable insights. Moreover, incorporating ranking questions helps identify which enhancements would greatly boost user satisfaction. Competitive analysis questions, such as “What features do you find most valuable in competitor products?” can spotlight areas for innovation. Regularly analyzing feedback guarantees you’re responsive to changing preferences, ultimately driving engagement and loyalty. Consider manager feedback survey examples and event survey questions for attendees to gain a thorough view of expectations. User Experience Challenges User experience challenges often arise when products fail to meet customer expectations, particularly regarding specific features. To effectively gather feedback on these challenges, consider using targeted questions that investigate user experiences. Here are some crucial areas to focus on: Identify which features users find most valuable. Ask about issues encountered with particular features. Evaluate the ease of use for different functionalities. Gather insights on how features impact overall satisfaction. Utilizing a mix of rating scales and open-ended questions can provide valuable insights, similar to employee survey examples or post-event survey questions examples. Incorporating manager feedback questions and meeting feedback survey questions can also improve comprehension, whereas workshop evaluation questions can pinpoint usability barriers, guiding prioritized improvements in your product roadmap. Measuring Loyalty and Recommendation Potential Measuring customer loyalty and recommendation potential is crucial for businesses aiming to thrive in competitive markets. One effective method is the Net Promoter Score® (NPS), which asks customers how likely they’re to recommend your business on a scale from 0 to 10. By analyzing these scores, you can identify promoters and detractors, gaining insights into customer loyalty. Consider including NPS questions in your feedback form, along with post-event survey questions for attendees to measure their satisfaction levels. Furthermore, using employee survey sample questions can help gauge internal perspectives on customer interactions. Leadership feedback surveys can provide insights into management effectiveness in promoting loyalty. Regularly tracking these metrics allows you to identify trends, implement targeted strategies, and make informed decisions. Remember, customer feedback often reveals areas for improvement, helping you align your services with customer expectations and improve their experience. Exploring Marketing Channels As businesses endeavor to improve their market presence, grasping how customers find your products or services becomes essential for effective marketing strategies. Utilizing surveys can uncover valuable insights about your marketing channels, helping you allocate your advertising budget wisely. Identify the most effective platforms for lead generation. Analyze customer responses regarding their first contact with your brand. Gather perceptions of your marketing messages to inform future campaigns. Discover barriers to conversion related to your marketing efforts. Incorporating staff survey questions or an employee questionnaire can also improve awareness of internal perspectives. Consider manager survey questions for employees to evaluate team awareness of marketing strategies. Furthermore, event survey questions and post-event survey questions can gauge the effectiveness of corporate events, providing vital meeting feedback questions and corporate event feedback. This all-encompassing approach allows you to refine strategies and boost overall marketing effectiveness. Collecting Demographic Insights Comprehending how customers interact with your brand is just the beginning; collecting demographic insights can greatly improve your marketing efforts. By segmenting respondents based on characteristics like age, gender, location, and income, you can tailor your strategies to meet specific needs. Incorporating demographic questions into management survey questions and post-event survey questions allows you to correlate demographics with customer preferences. This not only improves product development but also boosts customer satisfaction by offering personalized experiences. When crafting conference feedback or workshop assessment questions, make certain you’re respectful of privacy concerns, keeping questions relevant to the survey’s purpose. Furthermore, analyzing demographic insights can help you allocate resources more effectively, focusing on your most valuable customer segments. Whether you’re using meeting evaluation questions or questions to ask employees for manager feedback, keep in mind that these insights are essential for making informed decisions in your marketing strategy. Frequently Asked Questions What Are the Best Survey Questions for Feedback? To gather effective feedback, start with questions that assess overall satisfaction, like “How satisfied are you with our service on a scale of 1–10?” Follow up with open-ended inquiries, such as “What do you appreciate most?” This allows for qualitative insights. Targeted questions, like “What nearly prevented you from purchasing?” reveal barriers. Furthermore, metrics like Net Promoter Score help measure loyalty, whereas asking about desired features guides future improvements. These questions provide thorough insights. What Are Some Insightful Questions to Ask? To gain valuable insights, consider asking questions that directly address your customers’ experiences and needs. For example, inquire what problems they aimed to solve by choosing your service. To measure satisfaction, use a scale from 1 to 10. Open-ended questions like “What can we improve?” encourage detailed feedback, whereas asking if they’d recommend you helps assess loyalty. Moreover, comprehending why they might choose a competitor can reveal areas for improvement in your offerings. What Are the Best Questions to Ask for Feedback? When seeking effective feedback, ask a mix of quantitative and qualitative questions. For instance, request an overall satisfaction rating on a scale from 1 to 10, and include open-ended queries about specific likes and dislikes. Inquire about barriers to purchase, such as, “What nearly stopped you?” Additionally, ask what features customers wish were available, and utilize NPS questions like, “Would you recommend us?” This approach yields valuable insights for improvement. What Are Good 360 Feedback Questions? Good 360 feedback questions focus on specific, observable behaviors. You might ask, “How well does this person communicate with their peers?” or “What’s their approach to teamwork on projects?” Incorporating rating scales, like 1 to 5, can quantify responses, whereas open-ended questions like, “What’s one area for improvement?” allow for detailed insights. This combination helps in identifying strengths and areas for growth, ultimately improving overall performance and engagement within the team. Conclusion Incorporating these seven crucial feedback survey questions can greatly improve your comprehension of customer experiences and preferences. By focusing on motivations, perceived value, and barriers to purchase, you can identify key areas for improvement. Furthermore, evaluating customer satisfaction and loyalty, along with exploring marketing channels, provides valuable insights into your audience. In the end, leveraging this feedback will enable you to refine your offerings, boost customer satisfaction, and strengthen your brand’s position in the market. Image via Google Gemini This article, "7 Essential Feedback Survey Questions for Better Insights" was first published on Small Business Trends View the full article

-

Kevin Warsh’s nomination as Fed chair to spark rethink of bank’s role

Donald The President’s pick has called for a sweeping overhaul View the full article

-

Businesses fear blowback from Saudi-UAE rift

Emirates workers have had visas to the kingdom rejected since tensions erupted between the Gulf powerhousesView the full article

-

Inside Apple’s Bad Bunny Super Bowl halftime show strategy