Everything posted by ResidentialBusiness

-

CFPB blocks state enforcement of federal consumer laws

The Consumer Financial Protection Bureau has withdrawn guidance that allowed states to bring enforcement actions broadly under federal consumer protection laws. View the full article

-

Supreme Court lets Trump end deportation protections for Venezuelans

The U.S. Supreme Court let Donald The President’s administration on Monday strip temporary protected status from Venezuelans living in the United States that had been granted under his predecessor Joe Biden, as the Republican president moves to ramp up deportations as part of his hardline approach to immigration. The court granted the Justice Department’s request to lift San Francisco-based U.S. District Judge Edward Chen’s order that had halted Homeland Security Secretary Kristi Noem’s decision to terminate the deportation protection conferred to Venezuelans under the temporary protected status, or TPS, program. The court’s brief order was unsigned, as is typical when the justices act on an emergency request. The court, however, left open the door to any challenges by migrants if the administration seeks to invalidate work permits or other TPS-related documents that were issued to expire in October 2026, which is the end of the TPS period extended by Biden. The Department of Homeland Security has said about 348,202 Venezuelans were registered under Biden’s 2023 TPS designation. Liberal Justice Ketanji Brown Jackson was the sole member of the court to publicly dissent from the decision. The action came in a legal challenge by plaintiffs including some of the TPS recipients and the National TPS Alliance advocacy group, who said Venezuela remains an unsafe country. The President, who returned to the presidency in January, has pledged to deport record numbers of migrants in the United States illegally and has taken actions to strip certain migrants of temporary legal protections, expanding the pool of possible deportees. The TPS program is a humanitarian designation under U.S. law for countries stricken by war, natural disaster or other catastrophe, giving recipients living in the United States deportation protection and access to work permits. The designation can be renewed by the U.S. homeland security secretary. The U.S. government under Biden, a Democrat, twice designated Venezuela for TPS, in 2021 and 2023. In January, days before The President returned to office, the Biden administration announced an extension of the programs to October 2026. Noem, a The President appointee, rescinded the extension and moved to end the TPS designation for a subset of Venezuelans who benefited from the 2023 designation. Chen ruled that Noem violated a federal law that governs the actions of agencies. The judge also said the revocation of the TPS status appeared to have been predicated on “negative stereotypes” by insinuating the Venezuelan migrants were criminals. “Generalization of criminality to the Venezuelan TPS population as a whole is baseless and smacks of racism predicated on generalized false stereotypes,” Chen wrote, adding that Venezuelan TPS holders were more likely to hold bachelor’s degrees than American citizens and less likely to commit crimes than the general U.S. population. The San Francisco-based 9th U.S. Circuit Court of Appeals on April 18 declined the administration’s request to pause the judge’s order. Justice Department lawyers in their Supreme Court filing said Chen had “wrested control of the nation’s immigration policy” away from the government’s executive branch, headed by The President. “The court’s order contravenes fundamental Executive Branch prerogatives and indefinitely delays sensitive policy decisions in an area of immigration policy that Congress recognized must be flexible, fast-paced, and discretionary,” they wrote. The plaintiffs told the Supreme Court that granting the administration’s request “would strip work authorization from nearly 350,000 people living in the U.S., expose them to deportation to an unsafe country and cost billions in economic losses nationwide.” The State Department currently warns against travel to Venezuela “due to the high risk of wrongful detentions, terrorism, kidnapping, the arbitrary enforcement of local laws, crime, civil unrest, poor health infrastructure.” The The President administration in April also terminated TPS for thousands of Afghans and Cameroonians in the United States. Those actions are not part of the current case. In a separate case on Friday, the Supreme Court kept in place its block on The President’s deportations of Venezuelan migrants under a 1798 law historically used only in wartime, faulting his administration for seeking to remove them without adequate legal process. —Andrew Chung, Reuters View the full article

-

I Love These Sony ANC Headphones, and They Just Dropped in Price by $150

We may earn a commission from links on this page. Deal pricing and availability subject to change after time of publication. Audio enthusiasts need no introduction to Sony's 1000X series. They've been around since 2016, improving on the previous iteration to eventually land on the latest Sony WH-1000XM6, the best over-ear headphones for ANC your money can buy in 2025. As you can imagine, that means the still excellent 1000XM5 is dropping in price. If you're willing to splurge, it's a good time to do so during Amazon's Memorial Day Sale. The WH-1000XM5 are currently $298 (originally $399.99), matching Black Friday and Christmas prices, according to price-tracking tools. Sony WH-1000XM5 Brand: Sony, Color: Silver, Ear Placement: Over Ear $399.99 at Amazon Get Deal Get Deal $399.99 at Amazon I have been a loyal customer of the 1000X line for many years as my go-to headphones for most activities. The WH-1000XM5 came out in May of 2022 to an "outstanding" review from PCMag for their top-notch audio quality, but also for their exceptional audio when using its best-in-class active-noise cancelling (ANC) (most headphones lose their audio quality when using ANC). The headphones are also well-designed to be comfortable for long sessions. The ear controls use tapping and swiping, which aren't my favorite, but it's what all the modern headphones are moving toward. There's an app that comes with the headphones that lets you adjust your EQ settings to your liking, including what the swiping and tapping functions do on your headphones. A great touch on these headphones that is often neglected is a Stereo 3.5mm connection, perfect for those who want to use a wired cable without worrying about battery life. Speaking of battery life, Sony says you can expect about 30 hours, but it will vary depending on your usage of ANC. They are compatible with AAC, LDAC and SBC codecs and have multipoint connection (you can pair them with more than one device at the same time). If you're looking for excellent ANC headphones with great audio quality at a good price, the WH-1000XM5 are a great deal right now. View the full article

-

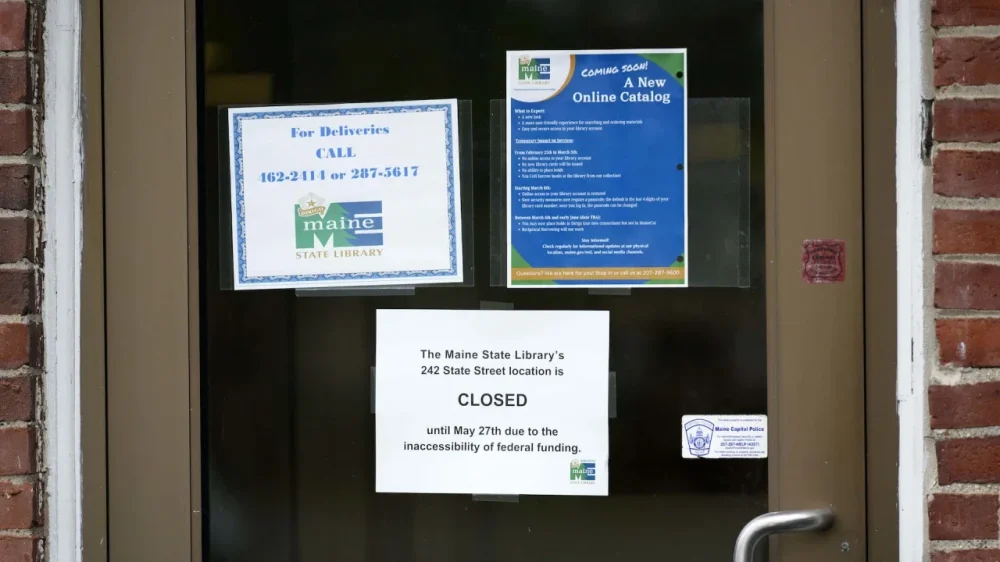

What libraries are getting rid of after Trump’s order to dismantle the IMLS

Libraries across the United States are cutting back on e-books, audiobooks, and loan programs after the The President administration suspended millions of dollars in federal grants as it tries to dissolve the Institute of Museum and Library Services. Federal judges have issued temporary orders to block the The President administration from taking any further steps toward gutting the agency. But the unexpected slashing of grants has delivered a significant blow to many libraries, which are reshuffling budgets and looking at different ways to raise money. Maine has laid off a fifth of its staff and temporarily closed its state library after not receiving the remainder of its annual funding. Libraries in Mississippi have indefinitely stopped offering a popular e-book service, and the South Dakota state library has suspended its interlibrary loan program. E-book and audiobook programs are especially vulnerable to budget cuts, even though those offerings have exploded in popularity since the COVID-19 pandemic. “I think everyone should know the cost of providing digital sources is too expensive for most libraries,” said Cindy Hohl, president of the American Library Association. “It’s a continuous and growing need.” Library officials caught off guard by The President’s cuts President Donald The President issued an executive order March 14 to dismantle the IMLS before firing nearly all of its employees. One month later, the Maine State Library announced it was issuing layoff notices for workers funded through an IMLS grant program. “It came as quite a surprise to all of us,” said Spencer Davis, a library generalist at the Maine State Library who is one of eight employees who were laid off May 8 because of the suspended funding. In April, California, Washington, and Connecticut were the only three states to receive letters stating the remainder of their funding for the year was canceled, Hohl said. For others, the money hasn’t been distributed yet. The three states all filed formal objections with the IMLS. Rebecca Wendt, California state library director, said she was never told why California’s funding was terminated while the other remaining states did not receive the same notice. “We are mystified,” Wendt said. The agency did not respond to an email seeking comment. Popular digital offerings on the chopping block Most libraries are funded by city and county governments, but receive a smaller portion of their budget from their state libraries, which receive federal dollars every year to help pay for summer reading programs, interlibrary loan services, and digital books. Libraries in rural areas rely on federal grants more than those in cities. Many states use the funding to pay for e-books and audiobooks, which are increasingly popular, and costly, offerings. In 2023, more than 660 million people globally borrowed e-books, audiobooks, and digital magazines, up from 19% in 2022, according to OverDrive, the main distributor of digital content for libraries and schools. In Mississippi, the state library helped fund its statewide e-book program. For a few days, Erin Busbea was the bearer of bad news for readers at her Mississippi library: Hoopla, a popular app to check out e-books and audiobooks had been suspended indefinitely in Lowndes and DeSoto counties due to the funding freeze. “People have been calling and asking, ‘Why can’t I access my books on Hoopla?’ ” said Busbea, library director of the Columbus-Lowndes Public Library System in Columbus, a majority-Black city northeast of Jackson. The library system also had to pause parts of its interlibrary loan system allowing readers to borrow books from other states when they aren’t available locally. “For most libraries that were using federal dollars, they had to curtail those activities,” said Hulen Bivins, the Mississippi Library Commission executive director. States are fighting the funding freeze The funding freeze came after the agency’s roughly 70 staff members were placed on administrative leave in March. Attorneys general in 21 states and the American Library Association have filed lawsuits against the The President administration for seeking to dismantle the agency. The institute’s annual budget is below $300 million and distributes less than half of that to state libraries across the country. In California, the state library was notified that about 20%, or $3 million, of its $15 million grant had been terminated. “The small library systems are not able to pay for the e-books themselves,” said Wendt, the California state librarian. In South Dakota, the state’s interlibrary loan program is on hold, according to Nancy Van Der Weide, a spokesperson for the South Dakota Department of Education. The institute, founded in 1996 by a Republican-controlled Congress, also supports a national library training program named after former first lady Laura Bush that seeks to recruit and train librarians from diverse or underrepresented backgrounds. A spokesperson for Bush did not return a request seeking comment. “Library funding is never robust. It’s always a point of discussion. It’s always something you need to advocate for,” said Liz Doucett, library director at Curtis Memorial Library in Brunswick, Maine. “It’s adding to just general anxiety.” —Nadia Lathan, Associated Press/Report for America Lathan is a corps member for the Associated Press/Report for America Statehouse News Initiative. Report for America is a nonprofit national service program that places journalists in local newsrooms to report on undercovered issues. View the full article

-

This new ruling cuts protections for transgender workers

Federal judge and The President appointee Matthew J. Kacsmaryk issued a ruling on Friday that will significantly alter the protections that transgender employees are entitled to in the workplace. The decision impacts the current guidance on workplace harassment from the Equal Employment Opportunity Commission, in a move that reflects the agency’s new priorities under the The President administration and new acting chair Andrea Lucas. In the ruling, Kacsmaryk struck down a section of the EEOC’s guidance that applied to trans and gender-nonconforming workers, arguing the agency did not have the authority to foist those guidelines on employers. The agency’s guidance had stated that misgendering employees, denying them access to appropriate bathrooms, or barring them from dressing in line with their gender identity could constitute workplace harassment. Updates to workplace harassment guidance The EEOC had updated its guidance on workplace harassment last year for the first time in decades, following a major Supreme Court ruling in 2020 that codified workplace protections for LGBTQ+ employees. (Over the last two years, the agency has also fielded well over 6,000 charges that alleged discrimination on the basis of sexual orientation or gender identity.) But Kacsmaryk ruled that the agency’s interpretation of the Supreme Court decision was too broad and imposed “mandatory standards” on employers, contradicting the EEOC’s claim that the guidance was not legally binding. Kacsmaryk also cited the “biological differences between men and women” and said the EEOC’s guidance “contravenes Title VII’s plain text by expanding the scope of ‘sex’ beyond the biological binary.” A new administration’s priorities The President had already undermined protections for LGBTQ+ workers in one of his first executive orders, which dictated that the government would only recognize two biological sexes. And even prior to this ruling, the new administration had already influenced the EEOC’s priorities: In her new capacity as acting chair, Lucas said the agency would now focus on “defending the biological and binary reality of sex and related rights” and complying with The President’s executive orders. Over the last few months, there have been several reports that the EEOC is dismissing lawsuits that were already underway involving allegations of discrimination against trans or gender-nonconforming workers. The agency is also reportedly de-prioritizing new charges related to gender identity and discouraging EEOC judges from hearing existing cases that are under investigation. (The EEOC has not commented on these reports.) Since The President dismissed EEOC commissioners Jocelyn Samuels and Charlotte Burrows, the agency has lacked a quorum and been unable to make formal revisions to its guidance—including the workplace harassment guidelines, which Lucas had voted against when they were issued in 2024. Earlier this month, however, The President nominated a new commissioner who would secure a Republican majority at the EEOC if confirmed, enabling the agency to revoke prior guidance and make other consequential changes to worker protections. View the full article

-

Why We're Not Getting That AI-Powered Siri Anytime Soon

If you were under the assumption that Siri was soon going to be supercharged with AI, you wouldn't be alone. In fact, Apple has advertised as much since last WWDC, showing off its ChatGPT-like assistant in commercials and promotional materials. It's been nearly a year since WWDC 2024, and that new Siri is still not here. The thing is, it likely won't be for a long time. How long is anyone's guess (I've been tracking the delays here), but one thing seems for certain: Apple is not showing off AI Siri at next month's WWDC 2025. Apple's AI program is a mess In Mark Gurman's latest piece for Bloomberg, he describes a chaotic situation regrading Apple's AI department. The piece is a fascinating and in-depth look at Apple's AI woes, and I won't give a detailed summary of the entire article. However, I will briefly discuss what's going on, and how it related to AI Siri: Executive leadership at Apple, including senior vice president of Software Engineering Craig Federighi, didn't believe AI was worth the investment, and didn't want to allocate resources away from Apple's core software components in order to develop the technology. But once Federighi used ChatGPT following its late 2022 launch, he did a 180. He and other Apple executives began meeting with the big AI companies to learn everything they could, and pushed for iOS 18 to have "as many AI-powered features as possible." While Apple's AI department already existed before this scramble (the company had poached Google's artificial intelligence chief John Giannandrea), the engineers simply couldn't match the quality or accuracy of the tools provided by other companies like OpenAI, Anthropic, or Google, who had a huge head start on generative AI. That lag manifested in two ways: First, many of the AI features that Apple did bring to market were half-baked. Apple Intelligence's notification summaries feature, for example, infamously made some major mistakes, such as "summarizing" a BBC news alert to say that United Healthcare shooting suspect Luigi Mangione had shot himself. (Apple later disabled the feature for news alerts.) Second, because Apple couldn't rely on its own tech to carry Apple Intelligence, they outsourced some tech to another AI company. While there was much debate about which company they should work with (Giannandrea wanted to work with Google and bring Gemini to iOS), Apple eventually settled for ChatGPT—which is why OpenAI's bot is built into your iPhone today. Apple's lack of AI focus meant they missed the rush to acquire GPUs—the main processing unit used for training and running AI models. They also have strict privacy policies when it comes to user data, which severely limits what data they can use to train their models. (Some might say that's actually a good thing, and take a pause to think about the companies that do have a plethora of data to train with.) Siri While Apple was able to get some AI features working "well" enough to ship, Siri was never among them. In order to bring AI to Siri, the company had to split Siri's "brain" in two—one featuring the existing code, used for traditional Siri tasks like setting timers and making calls, and the other for AI. While the AI-side in a vacuum can work, integrating it with the other half of Siri's brain is problematic, and is the cause of much of the delay. But rather than wait until Apple figured out how to get AI Siri working to actually show off its new features, the company went ahead and marketed them heavily. During WWDC 2024, we saw prerecorded demos of Siri taking complex requests, and generating helpful answers by accessing both a knowledge base about the user in question, as well as an awareness of what was happening on-screen. A prime example was an Apple employee asking Siri about their mom's travel itinerary: Siri dove through the employee's messages with their mom to draw up the plans. Apple even hired Bella Ramsey of The Last of Us to promote AI Siri features: In the commercial, Ramsey sees someone at a party they recognize but don't remember the name of. Ramsey then asks Siri "What's the name of the guy I had a meeting with a couple of months ago at Cafe Granel?" Siri immediately responds with the acquaintance's name, pulling from a calendar entry. Ramsey doesn't need to say the exact date or where to pull the information from, since AI Siri is presumably contextually aware, and can understand vague responses. (Apple has since deleted the ad from its YouTube account Since then, AI features have trickled in on various iOS 18 updates, but not AI Siri. We've been following reports (mostly from Gurman) that Apple's engineers were having trouble getting it to work, and each delay pushed AI Siri's release to the next major iOS 18 update. At one point, we thought it could come with iOS 18.4: As Gurman reports in this latest piece, that was the plan, but Federighi himself was surprised to see that the AI Siri features didn't work on a beta for 18.4. Siri's big AI upgrade was delayed again, and now "indefinitely." There are no plans to announce Siri's new features alongside iOS 19 next month. While the goal is to get AI Siri out for iOS 19 at some point, the situation is dire—Siri's features reportedly don't work a third of the time, and every time you fix one of Siri's major bugs, "three more crop up." Gurman's sources say Apple has an AI department in Zurich working on a new LLM-based Siri that scraps the two-sided brain of the current assistant. Siri also has a new leader, Mike Rockwell, who replaced Giannandrea this spring. Some sources even say that Apple's internal chatbot is now rivaling ChatGPT, which could prove useful if integrated with Siri. There are reasons to be mildly optimistic about Siri's long-term future, but there's no denying the last year has been a disaster. If you're excited for Siri's next big development, lower your expectations for the short-term. View the full article

-

Trump and Putin talk for 2 hours on the phone but fail to reach a Russia-Ukraine ceasefire deal

President Donald The President and Russian President Vladimir Putin spoke for more than two hours Monday, after the White House said the U.S. leader has grown “frustrated” with the war in Ukraine. The President planned a separate call with Ukrainian leader Volodymyr Zelenskyy in hopes of making progress toward a ceasefire. After the call, Putin said Russia was ready to continue discussing an end to the fighting, but he indicated there was no major breakthrough in what he termed a “very informative and very frank” conversation with The President. Putin said the warring countries should “find compromises that would suit all parties.” “At the same time, I would like to note that, in general, Russia’s position is clear. The main thing for us is to eliminate the root causes of this crisis,” the Russian president said. The White House did not immediately provide its own account of the call. The President has struggled to end a war that began with Russia’s invasion in February 2022. That makes these conversations a serious test of his reputation as a deal maker after having claimed he would quickly settle the conflict once he was back in the White House, if not even before he took office. The President expressed his hopes for a “productive day” Monday—and a ceasefire—in a social media post over the weekend. His effort will also include calls to NATO leaders. But ahead of the call, Vice President JD Vance said The President is “more than open” to walking away from trying to end the war if he feels Putin isn’t serious about negotiation. “He’s grown weary and frustrated with both sides of the conflict,” White House press secretary Karoline Leavitt told reporters Monday ahead of the call. “He has made it clear to both sides that he wants to see a peaceful resolution and ceasefire as soon as possible.” The Republican president is banking on the idea that his force of personality and personal history with Putin will be enough to break any impasse over a pause in the fighting. “I’d say we’re more than open to walking away,” Vance told reporters before leaving Rome after meeting with Pope Leo XIV. Vance said The President has been clear that the U.S. “is not going to spin its wheels here. We want to see outcomes.” The President’s “sensibilities are that he’s got to get on the phone with President Putin, and that is going to clear up some of the logjam and get us to the place that we need to get to,” said The President’s envoy, Steve Witkoff. “I think it’s going to be a very successful call.” The President’s frustration builds over failure to end war Still, there are fears that The President has an affinity for Putin that could put Ukraine at a disadvantage with any agreements engineered by the U.S. government. Bridget Brink said she resigned last month as the U.S. ambassador to Ukraine “because the policy since the beginning of the administration was to put pressure on the victim, Ukraine, rather than on the aggressor, Russia.” Brink said the sign that she needed to depart was an Oval Office meeting in February where The President and his team openly berated Zelenskyy for not being sufficiently deferential to them. “I believe that peace at any price is not peace at all,” Brink said. “It’s appeasement, and as we know from history, appeasement only leads to more war.” The President’s frustration about the war had been building before his post Saturday on Truth Social about the coming calls. The President said his discussion with Putin would focus on stopping the “bloodbath” of the war. It also will cover trade, a sign that The President might be seeking to use financial incentives to broker some kind of agreement after Russia’s invasion led to severe sanctions by the United States and its allies that have steadily eroded Moscow’s ability to grow. The President’s hope, according to the post, is that “a war that should have never happened will end.” Vance said The President would press Putin on whether he was serious about negotiating an end to the conflict, saying The President doesn’t believe he is and that The President may wash his hands of trying to end the war. “It takes two to tango,” Vance said, adding that “if Russia is not willing to do that, then we’re eventually just going to have to say, ‘This is not our war. It’s Joe Biden’s war. it’s Vladimir Putin’s war.’ ” His treasury secretary, Scott Bessent, said Sunday on NBC’s Meet the Press that The President had made it clear that a failure by Putin to negotiate “in good faith” could lead to additional sanctions against Russia. Bessent suggested the sanctions that began during the administration of Democratic President Joe Biden were inadequate because they did not stop Russia’s oil revenues, due to concerns that doing so would increase U.S. prices. The United States sought to cap Russia’s oil revenues while preserving the country’s petroleum exports to limit the damage from the inflation that the war produced. No ceasefire but an exchange of prisoners Putin recently rejected an offer by Zelenskyy to meet in person in Turkey as an alternative to a 30-day ceasefire urged by Ukraine and its Western allies, including Washington. Instead, Russian and Ukrainian officials met in Istanbul for talks, the first such direct negotiations since March 2022. Those talks ended Friday after less than two hours, without a ceasefire in place. Still, both countries committed to exchange 1,000 prisoners of war each, with Ukraine’s intelligence chief, Kyrylo Budanov, saying on Ukrainian television Saturday that the exchanges could happen as early as this week. While wrapping up his four-day trip to the Middle East, The President said Friday that Putin had not gone to Turkey because The President himself wasn’t there. “He and I will meet, and I think we’ll solve it, or maybe not,” The President told reporters after boarding Air Force One. “At least we’ll know. And if we don’t solve it, it’ll be very interesting.” Zelenskyy met with Vance and Secretary of State Marco Rubio in Rome on Sunday, as well as European leaders, intensifying his efforts before the Monday calls. The Ukrainian president said on the social media site X that during his talks with the American officials, they discussed the negotiations in Turkey and that “the Russians sent a low-level delegation of non-decision-makers.” He also said he stressed that Ukraine is engaged in ”real diplomacy” to have a ceasefire. “We have also touched upon the need for sanctions against Russia, bilateral trade, defense cooperation, battlefield situation, and upcoming prisoners exchange,” Zelenskyy said. “Pressure is needed against Russia until they are eager to stop the war.” The German government said Chancellor Friedrich Merz and French, British, and Italian leaders spoke with The President late Sunday about the situation in Ukraine and his upcoming call with Putin. A brief statement gave no details of the conversation, but said the plan is for the exchange to be continued directly after the The President-Putin call. Witkoff spoke Sunday on ABC’s This Week and Brink appeared on CBS’s Face the Nation. —Josh Boak and Zeke Miller, Associated Press View the full article

-

EY accused of ‘serious’ failings in audits of collapsed NMC Health

FTSE 100 group failed after disclosure of billions of dollars of off-balance sheet debtsView the full article

-

5 facts about the brain to improve your decision-making skills

Emily Falk is a Professor of Communication, Psychology, and Marketing at the University of Pennsylvania, where she directs the Communication Neuroscience Lab and the Climate Communication Division of the Annenberg Public Policy Center. Her work has been covered in the New York Times, Washington Post, BBC, Forbes, and Scientific American, among other outlets. What’s the big idea? Every moment is filled with how we’ve decided to spend our time, and that time defines us. We make value judgements (often automatically) of our options and follow similar patterns, day in and day out. When we decide we want to change in some way, it can be extremely difficult to snap out of our typical decision-making to opt for something else, even if we genuinely care about living differently. By understanding how the brain calculates values to drive daily decisions, we can learn how to explore paths that are more aligned with new goals and evolving self-image. By expanding the power and possibility of our choices, we increase the capacity for inner, societal, and cultural growth. Below, Emily shares five key insights from her new book, What We Value: The Neuroscience of Choice and Change. Listen to the audio version—read by Emily herself—in the Next Big Idea App. 1. Our brains shape what we value. Neuroscience research (first in monkeys and then in humans) has shown that a network of brain regions, known as the value system, computes our daily choices in a process called value calculation. This happens whether we’re aware of it or not. Each choice we make is first shaped by what options our brain considers. When I imagined choosing between a run or doing extra work, other options like heading to a karaoke bar with my coworkers or going for a walk with my grandma rarely entered the calculation. In the next phase of decision-making, the value system assigns a subjective worth to each choice based on our past experiences, current context, and future goals. Going for a run is hard. It’s the end of the day, and I’m already tired. Sure, the run would be good for my health, but look how many unread emails there are…so I choose to finish up the emails. After we choose, the value system keeps track of how things went, making us more likely to repeat choices that were more rewarding than we expected and less likely to repeat choices that were less rewarding than we expected. After choosing the work, my body felt kind of blah, but my students were making progress on interesting problems, and that felt good. The value our brains assign to different options is dynamic and can shift depending on context, like what mood we’re in, what other people are saying or doing, and which parts of the choice we pay attention to. Understanding this can help us identify opportunities to change, and ways to align choices with our bigger-picture goals and values. For me, I knew I needed to find a way to make moving around and spending time with people I love more compelling to my brain in the moment. 2. Our brains shape—and limit—who we are. Our sense of who we are is an important factor in shaping our choices. Neuroscientists have identified brain regions that help construct our sense of “me” and “not me,” and when we make decisions this self-relevance is deeply intertwined with the value system. Together they guide us toward choices that are aligned with our perceptions of who we already are. “We tend to favor choices that reinforce our existing identity, sometimes at the cost of new opportunities and experiences.” Having a coherent sense of who we are can be useful, but it also limits us. We tend to favor choices that reinforce our existing identity, sometimes at the cost of new opportunities and experiences. When I kept choosing work, my identity as a hard-working researcher who always hits deadlines and invests in mentoring students weighed heavily on my value calculations. I didn’t think of myself as an athlete, and in the process, I deprived myself of chances to improve as a runner, dancer, or any number of other options. A mountain of research data shows that I’m not alone in this. Most of us cling to ideas and behaviors we consider “ours,” a phenomenon called the endowment effect. Sometimes, this helps us affirm our core values and reinforce choices that are compatible with longer-term goals, but it can also leave us with a bounded notion of self, meaning that it limits the way we see ourselves. When confronted with evidence that our past behaviors weren’t optimal, or that others want us to do things differently, it can make us defensive, leading us to double down on past choices, which can make change harder. When my grandma told me that she wished we could spend more time together, I barely let her finish the sentence before defensively explaining that of course we spent time together—she’d come to my house and hang out with my kids while I cooked dinner, and often we’d even steal a few minutes to walk around the block together. But, no, she patiently explained, that was not what she was after. It wasn’t until later when I was able to think about what really matters to me that I could see that what she was asking for was something that I wanted too. 3. We don’t decide alone. Neuroscientists have identified brain regions that help us understand what other people think and feel. The brain’s social-relevance system helps us connect and coordinate with other people, also shaping the decisions we make. Our sense of what others are doing or thinking strongly affects what we choose, what we’re willing to change, and the possibilities we consider. Feeling a sense of status, belonging, and connection serves as a powerful reward, and we often make choices shaped by these forces. When I imagined what other researchers were doing, I imagined them hard at work making progress—not going for runs or walks with their grandmas. It was important to me to be seen as a serious scientist. When I imagined my collaborator’s appreciation of my speedy email replies and my students’ gratitude for timely feedback, the decision to keep working made even more sense. “Feeling a sense of status, belonging, and connection serves as a powerful reward.” But, although the social relevance system can help us understand others, it can also mislead us. I was imagining what others would think and feel if I left work, and my social relevance system was very convincing, even though in reality, I didn’t actually know. I had never fact-checked those assumptions. The same can be said when our social relevance system paints such a vivid picture that we feel certain we know why a co-worker didn’t invite us to lunch or why our spouse is frustrated. By keeping us aligned with others, the social relevance system can do useful things like keep us current on trends. Or it can do harmful things like deluding us into agreeing with popular but patently false social media posts. It also shapes what we think is acceptable and desirable regarding whether we should spend a beautiful autumn afternoon at the office or on a jog. 4. We can work with our brains to create change. Many of us have been taught that hard work and pain are necessary for achievement. Theodore Roosevelt went so far as to suggest that “Nothing in the world is worth having or worth doing unless it means effort, pain, difficulty…” But neuroscience research highlights that small shifts in how we frame decisions can change our value calculations. My hope is that understanding how this works might make change easier. Our value system serves as the bridge between where we are now and where we want to be, and the self- and social-relevance systems shape what we value. If we want to change ourselves, the people we care about, or even society, we need to harness these systems and leverage key ingredients for change, such as shifting focus, letting go of defensiveness, and expanding where the inputs to our value calculations come from in the first place. Based on what we know about the brain’s value system, we can shift our focus to different aspects of a situation to align our emotions with our objectives. For instance, we can leverage the brain’s natural tendency to prioritize immediate gratification to make future-oriented choices feel more rewarding in the present. Instead of choosing between immediate and future rewards, we can find ways to bridge them. Returning to the whisper in the back of my mind, reminding me to spend more time with my grandma, I knew I needed to find a way to make walks with Grandma Bev compatible with the other immediate pressures factoring into my value calculations. One day, walking home from work, a podcast episode created a small bridge in my thinking. The episode of How to Save a Planet highlighted the joy of biking. What if, instead of driving to Bev’s, arriving stressed from the traffic and struggling to find parking, I biked? What if I had some time to myself outside and got some exercise on the way? The immediate reward of checking something off of my work to-do list was offset by the opportunity to enjoy a bike ride in addition to the reward of spending time with my grandma. Hearing these regular people on the podcast teetering around on bikes also made me think that I could be that kind of person too. “We can shift our focus to different aspects of a situation to align our emotions with our objectives.” There was still the issue of not wanting my grandma to be right when she pointed out that we weren’t spending much quality time together. Should I admit I was wrong? Using what we know about the self-relevance system, to combat defensiveness, we can focus on “self-transcendent values,” which focus on the things that matter most to us. Zooming out like this to focus on our core values, or the well-being of others and the world, allows us to see that being wrong about something doesn’t have to mean we’re a bad person, or that everything about us has to change. We can hold onto a core sense of self while opening ourselves to changing what’s not working and letting go of preconceived notions of who we are. Finally, turning to the social relevance system, the people who most immediately come to mind when we make choices get an outsized influence. When I was thinking about the people I spent my days with at work, it made work rewards salient. And this isn’t just true when it comes to decisions about how we spend our afternoons. All kinds of decisions, from the products we buy to what we think is important politically, are shaped by the voices we imagine most readily. Research shows that the people we spend time with are often similar to us in many ways. To counteract known biases in the social relevance system, we can audit who is and isn’t part of our social networks and actively bring in new ideas through what we read, listen to, and spend time with. This expands our universe of choices, provides new and unexpected perspectives, and improves our ability to come up with creative solutions to problems. 5. Shaping the future starts in our minds. Our choices don’t just shape our own lives; they ripple outward, influencing culture and collective values. Research shows that norms are deeply shaped by those around us. Just as observing others influences our value calculations, we serve as role models for the people who see what we do. In this way, our own value calculations influence the people around us. When I share stories about my grandma with my students and colleagues, I’m not just telling them about my family. I’m opening a possibility for them to prioritize spending time with their loved ones. Consciously and unconsciously, our actions change what others value. The ways we see ourselves can influence what is possible for others, and what we think is possible shapes the culture of the future. This article originally appeared in Next Big Idea Club magazine and is reprinted with permission. View the full article

-

‘Is the cheese pull challenge accepted?’: Chili’s and TGI Fridays reignite their rivalry over mozzarella sticks on social media

Chili’s and TGI Fridays are in a full-blown mozzarella stick feud. Last week, TGI Fridays unveiled its new menu with a post on X: “New menu’s out. mozz sticks hit harder. happy hour’s calling. life’s good.” The next day, the chain appeared to throw shade at its fast casual rival, Chili’s Grill & Bar. new menu’s out. mozz sticks hit harder. happy hour’s calling. life’s good. — TGI Fridays (@TGIFridays) May 13, 2025 “Somebody tell [chili pepper emoji] to stay in their lane,” TGI Fridays posted on May 14. “Y’all are not mozzarella stick people. We are. That’s it. That’s the tweet.” Chili’s clapped back by sharing a screenshot of the post: “@ us next time… Also, we honestly didn’t know you were still open. Congrats!” @ us next time… also, we honestly didn’t know you were still open. congrats! pic.twitter.com/t32gxjNivm — Chili's Grill & Bar (@Chilis) May 15, 2025 Plot twist: the original tweet wasn’t even real. It was part of a marketing stunt pulled off by Chili’s. “The gag is this wasn’t even a real tweet,” TGI Fridays admitted in a reply to a commenter. the gag is this wasn't even a real tweet 😅 — TGI Fridays (@TGIFridays) May 16, 2025 Even so, the jab likely stung. TGI Fridays has only 85 restaurants left in the U.S., down from about 270 at the start of last year. Its parent company filed for Chapter 11 bankruptcy in late 2024, blaming the pandemic for ongoing financial struggles. Just last month, the chain closed another 30 locations. “OMG, I think I just witnessed a murder,” one X user wrote. Another added insult to injury: “they closed the Fridays near me….and opened another chilis…” Chili’s responded: “was probably for the best.” was probably for the best — Chili's Grill & Bar (@Chilis) May 16, 2025 Fans also dredged up a 2021 lawsuit against TGI Fridays over its frozen mozzarella sticks, which were found to contain cheddar instead of mozzarella. “Do they actually contain mozzarella now or nah?” one person asked. Refusing to back down, TGI Fridays proposed a showdown. “Is the cheese pull challenge accepted or nah??” the company asked. is the cheese pull challenge accepted or nah??🥱🥱🥱 https://t.co/fDHmZiIbrj — TGI Fridays (@TGIFridays) May 15, 2025 So… Team Chili’s or Team Fridays? View the full article

-

Seven Ways to Turn Your Entryway Into a More Functional Space

If you’re like most folks, you use your home's entryway to hang a few coats, store an unruly pile of shoes, and give you a place to drop your keys and the mail when you walk in. If you have the space, you might use it as a full-fledged mud room—a space to shed your wet coats, boots, and umbrellas before stepping into the house proper. But your entryway could be a lot more than that—especially if you live in a small space and need to make use of every inch of square footage. Just because it’s a space you and your guests pass through on the way to somewhere else doesn’t mean you can’t use it for other purposes. And you can enhance the space for the guest experience as well, turning a transitional room no one remembers into a multi-functional space. Here are seven options for getting more out of your entryway. Use it as an office spaceIf you ever work from home or just need a space to do household paperwork or to noddle away at your hobbies, your entryway can easily be transformed into a tiny office. A fold-down desk that compresses into an unobtrusive wall unit when not in use, combined with an adjustable stool or other mobile seating that can be used for putting on and taking off shoes when you’re not working, is all you need. If you have the wall space, throw in extra shelving or a narrow filing cabinet (or combination piece like this filing cabinet/printer stand/shelving unit) for maximum office storage in a minimal footprint. When you’re not working, you can fold everything up and instantly have a clear entryway again. Turn it into a libraryNeed a place to store your books and set up a cozy reading nook? The entryway could be that spot. A row of shelves (or other book storage) along one wall, a small but comfy chair (with a slim console table for cups of tea or literary cocktails), and a floor lamp that’s great for reading, and you’re all set. When not in use, this area can also set a warm, comfortable vibe for visitors. Assemble a gallery displayIf you have lots of art pieces, collectibles, or mementos to display, your entryway is an ideal place to put them all. Hanging pictures or installing floating shelves to display your items not only beautifies the space, giving it a cohesive and personal style, it also makes it a lot more inviting for guests. Instead of moving through a chaotic or antiseptic place that pushes them to kick off their shoes and get into the main part of the house as quickly as possible, your entryway could be a place people want to linger. Build a conversation nookIf your home lacks a space to sit and chat without distractions, your entryway might be the ideal spot. If you have room for a shallow sofa like this one, or a pair of small accent chairs like these, you can set up a spot where you can visit with guests. Having a space like this in your entryway also makes it ideal for those casual encounters with neighbors—instead of standing awkwardly in the doorway for fifteen minutes, invite them in to sit. Add storageIf you’re hurting for storage space, consider all that underused wall and floor space in your entryway. You can store anything there, after all—it doesn’t have to be entryway-related, and no one will ever know what’s in those drawers and cabinets unless you tell them. Even a small foyer can be made into a storage paradise with some shelving, a shallow wardrobe or armoire, or a dual-purpose storage bench. Use it as a barYou want to host sophisticated gatherings fueled by social lubricants, but your bar is currently also your kitchen counter? Look to your entryway. A narrow bar cart against one wall could be the answer. If you want to make it a space people will linger in after securing their cocktails, add some floating shelves at counter heights (typically 36-48 inches high) to set down glasses. To really commit, a slim beverage refrigerator for beer, wine, and mixers next to the bar cart is a great addition. Turn it into your family information centerYour entryway can be the place where everyone in the house knows to look for daily information. A cork board or chalkboard (or a combination) mounted in the entryway makes it easy for everyone coming and going to check the day’s schedule, look for reminders, or pick up and drop off items for later retrieval. This can be a better option than the kitchen because it will be the last and first place people see when leaving or entering the house, increasing the chances that you’ll actually remember the things you’re reminding one another about. (When you have guests staying over, it’s also a perfect spot to mark down things like the WiFi password or important phone numbers.) View the full article

-

More storms to hit central U.S. after deadly storms and tornado damage

More severe storms were expected to roll across the central U.S. this week following the weather-related deaths of more than two dozen people and a devastating Kentucky tornado. The National Weather Service said a “multitude of hazardous weather” would impact the U.S. over the next several days—from thunderstorms and potentially baseball-size hail on the Plains, to heavy mountain snow in the West and dangerous heat in the South. Areas at risk of thunderstorms include communities in Kentucky and Missouri that were hit by Friday’s tornadoes. In London, Kentucky, people whose houses were destroyed scrambled Sunday to put tarps over salvageable items or haul them away for safe storage, said Zach Wilson. His parents’ house was in ruins and their belongings scattered. “We’re trying the hardest to get anything that looks of value and getting it protected, especially pictures and papers and things like that,” he said. Here’s the latest on the recent storms, some tornado history, and where to look out for the next weather impacts. Deadly storms claim dozens of lives At least 19 people were killed and 10 seriously injured in Kentucky, where a tornado on Friday damaged hundreds of homes and tossed vehicles in southeastern Laurel County. Officials said the death toll could rise and that three people remained in critical condition Sunday. Wilson said he raced to his parents’ home in London, Kentucky, after the storm. “It was dark and still raining, but every lightning flash, it was lighting up your nightmares: Everything was gone,” he said. “The thankful thing was me and my brother got here and got them out of where they had barricaded themselves.” Survey teams were expected on the ground Monday so the state can apply for federal disaster assistance, Gov. Andy Beshear said. Some of the two dozen state roads that had closures could take days to reopen. In St. Louis, five people died and 38 were injured as the storm system swept through on Friday, according to Mayor Cara Spencer. More than 5,000 homes in the city were affected, she said. On Sunday, city inspectors were going through damaged areas to condemn unsafe structures, Spencer said. She asked for people not to sightsee in damaged areas. A tornado that started in the St. Louis suburb of Clayton traveled at least 8 miles (13 kilometers), had 150-mph (241-kph) winds, and had a maximum width of 1 mile (1.6 kilometers), according to the weather service. It touched down in the area of Forest Park, home to the St. Louis Zoo and the site of the 1904 World’s Fair and the Olympic Games that same year. In Scott County, about 130 miles (209 kilometers) south of St. Louis, a tornado killed two people, injured several others, and destroyed multiple homes, Sheriff Derick Wheetley wrote on social media. The weather system spawned tornadoes in Wisconsin and temporarily enveloped parts of Illinois—including Chicago—in a pall of dust. Two people were killed in the Virginia suburbs of Washington, D.C., by falling trees while driving. The storms hit after the The President administration cut staffing of weather service offices, with outside experts worrying about how it would affect warnings in disasters such as tornadoes. A history of tornadoes The majority of the world’s tornadoes occur in the U.S., which has about 1,200 annually. Researchers in 2018 found that deadly tornadoes were happening less frequently in the traditional “Tornado Alley” of Oklahoma, Kansas, and Texas, and more frequently in parts of the more densely populated and tree-filled South. They can happen any time of day or night, but certain times of the year bring peak “tornado season.” That’s from May into early June for the southern Plains, and earlier in the spring on the Gulf Coast. The deadliest tornado in Kentucky’s history was hundreds of yards wide when it tore through downtown Louisville’s business district in March 1890, collapsing multistory buildings including one with 200 people inside. Seventy-six people were killed. The last tornado to cause mass fatalities in Kentucky was a December 2021 twister that lasted almost five hours. It traveled some 165 miles (266 kilometers), leaving a path of destruction that included 57 dead and more than 500 injured, according to the weather service. Officials recorded at least 41 tornadoes during that storm, which killed at least 77 people statewide. On the same day, a deadly tornado struck the St. Louis area, killing six people at an Amazon facility in nearby Illinois. More storms threaten in coming days Thunderstorms with potentially damaging winds were forecast for a region stretching from northeast Colorado to central Texas. And tornadoes will again be a threat particularly from central Kansas to Oklahoma, according to the weather service. Meanwhile, triple-digit temperatures were forecast for parts of south Texas, with the potential to break daily records. The hot, dry air also sets the stage for critical wildfire conditions through early this week in southern New Mexico and West Texas. Up to a foot of snow was expected in parts of Idaho and western Montana. —Matthew Brown and Carolyn Kaster, Associated Press View the full article

-

Google Ads bug stalls spending for New Customer Acquisition

A confirmed bug in Google Ads caused New Customer Acquisition (NCA) campaigns to stop spending budgets starting May 15. The issue has affected advertisers relying solely on this bidding strategy to reach new customers. The details: Google acknowledged the problem in an email shared by Google Ads consultant Benoit Legendre. “We are aware of an issue where the campaigns running on New Customer Acquisition only, have stopped spending starting May 15th,” Google said. The company added that its engineering team is actively working on a fix, but initially provided no specific timeline. Why we care. Advertisers using NCA-only bidding have seen campaign performance stall for days, potentially disrupting customer acquisition goals and monthly spend pacing. Google Ads is a critical advertising platform. Issues like this can have wide-reaching financial and strategic implications. The workaround. While the fix was pending, Google advised advertisers to temporarily switch bidding from “New Customer Acquisition only” to “New and existing customers.” This change can resume campaign delivery until the issue is resolved. The update. As of 11:45am ET on May 19, Google’s Ads Liaison Ginny Marvin confirmed that the engineering team “fully mitigated the issue.” NCA campaign performance should now begin returning to normal. First seen. The bug was first brought to broader attention via Legendre’s LinkedIn post, spotted by PPC News Feed and shared by Hana Kobzová. View the full article

-

What Ginnie Mae's newest executive is planning for mortgages

COO Joseph Gormley weighed in on cuts at the securitization guarantor and efforts to improve the industry's efficiencies and the government's. View the full article

-

Lenders feel better, not exuberant, about housing market

The Mortgage Bankers Association latest forecast reflects the industry's current views on where their business is going, said Mike Fratantoni. View the full article

-

HR panicked my employee by sending a mysterious meeting request right before the weekend

A reader writes: We received and validated some complaints about language used by a member of my team — off-color jokes, insensitive comments, etc. I agreed with HR that this did not rise to the level of a formal warning, but we would have a documented sit-down with the associate to explain it wasn’t acceptable and should not happen again, and further instances would have escalating consequences. Before this, the employee was a high performer without issues. HR scheduled the meeting on Friday for the following Monday with a very generic subject line and said that she wished to discuss “communication” and included my manager in the invite as a courtesy (she is aware of the situation and supports the approach). My employee immediately rang me, asking what the topic was. I explained as best as I could and that we would go into details together. But I am not keen on the communication on this topic. I would have preferred to raise this in our regular 1:1 meeting and then follow up with an email including all and summarizing the topic. Am I right to think that this approach should have been more transparent up-front, especially over the weekend? Yes, absolutely. Most people who receive a mysterious request to meet with HR, their manager, and their manager’s manager the following week with no details about the topic and the subject line “communication” would be a little concerned, at a minimum. Others would be full-on panicking. Leaving that hanging over them all weekend with no information is unkind. And yes, this is something you should be able to handle on your own in a one-on-one meeting, anyway. If HR wants to be there, fine — but they should have coordinated with you about how it would be handled and not sent this cryptic email on their own. It’s crappy. It sounds like once your employee asked you about it, you told them the basics, which was the right move rather than compounding the mystery and refusing to explain. Ideally at that point you’d say something like, “We’ve had some complaints about some language you’ve used and we want to clarify what is and isn’t okay. As long as we come out of that meeting on the same page I don’t expect it will need to be addressed again after that.” That way they know the topic and they’re also clear that they’re not about to be fired. You have plenty of standing to tell HR that you think this was a bad way to handle it and that it unnecessarily panicked the employee, and ask that they coordinate with managers on this sort of communication in the future. The post HR panicked my employee by sending a mysterious meeting request right before the weekend appeared first on Ask a Manager. View the full article

-

What the post-Brexit reset deal means for the UK

Agreement ranges over food, fishing, defence and youth mobility, but much remains to be finalisedView the full article

-

AI and Work (Some Predictions)

One of the main topics of this newsletter is the quest to cultivate sustainable and meaningful work in a digital age. Given this objective, it’s hard to avoid confronting the furiously disruptive potentials of AI. I’ve been spending a lot time in recent years, in my roles as a digital theorist and technology journalist, researching and writing about this topic, so it occurred to me that it might be useful to capture in one place all of my current thoughts about the intersection of AI and work. The obvious caveat applies: these predictions will shift — perhaps even substantially — as this inherently unpredictable sector continues to evolve. But here’s my current best stab at what’s going on now, what’s coming soon, and what’s likely just hype. Let’s get to it… Where AI Is Already Making a Splash When generative AI made its show-stopping debut a few years ago, the smart money was on text production becoming the first killer app. For example, business users, it was thought, would soon outsource much of the tedious communication that makes up their day — meeting summaries, email, reports — into AI tools. A fair amount of this is happening, especially when it comes to lengthy utilitarian communication where the quality doesn’t matter much. I recently attended a men’s retreat, for example, and it was clear that the organizer had used ChatGPT to create the final email summarizing the weekend schedule. And why not? It got the job done and saved some time. It’s becoming increasingly clear, however, that for most people the act of writing in their daily lives isn’t a major problem that needs to be solved, which is capping the predicted ubiquity of this use case. (A survey of internet users found that only around 5.4% had used ChatGPT to help write emails and letters. And this includes the many who maybe experimented with this capability once or twice before moving on.) The application that has instead leaped ahead to become the most exciting and popular use of these tools is smart search. If you have a question, instead of turning to Google you can query a new version of ChatGPT or Claude. These models can search the web to gather information, but unlike a traditional search engine, they can also process the information they find and summarize for you only what you care about. Want the information presented in a particular format, like a spreadsheet or a chart? A high-end model like GPT-4o can do this for you as well, saving even more extra steps. Smart search has become the first killer app of the generative AI era because, like any good killer app, it takes an activity most people already do all the time — typing search queries into web sites — and provides a substantially, almost magically better experience. This feels similar to electronic spreadsheets conquering paper ledger books or email immediately replacing voice mail and fax. I would estimate that around 90% of the examples I see online right now from people exclaiming over the potential of AI are people conducting smart searches. This behavioral shift is appearing in the data. A recent survey conducted by Future found that 27% of US-based respondents had used AI tools such as ChatGPT instead of a traditional search engine. From an economic perspective, this shift matters. Earlier this month, the stock price for Alphabet, the parent company for Google, fell after an Apple executive revealed that Google searches through the Safari web browser had decreased over the previous two months, likely due to the increased use of AI tools. Keep in mind, web search is a massive business, with Google earning over $175 billion from search ads in 2023 alone. In my opinion, becoming the new Google Search is likely the best bet for a company like OpenAI to achieve profitability, even if it’s not as sexy as creating AGI or automating all of knowledge work (more on these applications later). The other major success story for generative AI at the moment is computer programming. Individuals with only rudimentary knowledge of programming languages can now produce usable prototypes of simple applications using tools like ChatGPT, and somewhat more advanced projects with AI-enhanced agent-style helpers like Roo Code. This can be really useful for quickly creating tools for personal use or seeking to create a proof-of-concept for a future product. The tech incubator Y Combinator, for example, made waves when they reported that a quarter of the start-ups in their Winter 2025 batch generated 95% or more of their product’s codebases using AI. How far can this automated coding take us? An academic computer scientist named Judah Diament recently went viral for noting that the ability for novice users to create simple applications isn’t new. There have been systems dedicated to this purpose for over four decades, from HyperCard to VisualBasic to Flash. As he elaborates: “And, of course, they all broke down when anything slightly complicated or unusual needs to be done (as required by every real, financially viable software product or service).” This observation created major backlash — as does most expressions of AI skepticism these days — but Diament isn’t wrong. Despite recent hyperbolic statements by tech leaders, many professional programmers aren’t particularly worried that their jobs can be replicated by language model queries, as so much of what they do is experience-based architecture design and debugging, which are unrelated skills for which we currently have no viable AI solution. Software developers do, however, use AI heavily: not to produce their code from scratch, but instead as helper utilities. Tools like GitHub’s Copilot are integrated directly into the environments in which these developers already work, and make it much simpler to look up obscure library or AI calls, or spit out tedious boilerplate code. The productivity gains here are notable. Programming without help from AI is rapidly becoming increasingly rare. The Next Big AI Application Language model-based AI systems can respond to prompts in pretty amazing ways. But if we focus only on outputs, we underestimate another major source of these models’ value: their ability to understand human language. This so-called natural language processing ability is poised to transform how we use software. There is a push at the moment, for example, led by Microsoft and its Copilot product (not to be confused with GitHub Copilot), to use AI models to provide natural language interfaces to popular software. Instead of learning complicated sequences of clicks and settings to accomplish a task in these programs, you’ll be able to simply ask for what you need; e.g., “Hey Copilot, can you remove all rows from this spreadsheet where the dollar amount in column C is less than $10 dollars then sort everything that remains by the names in Column A? Also, the font is too small, make it somewhat larger.” Enabling novice users to access to expert-level features in existing software will aggregate into huge productivity gains. As a bonus, the models required to understand these commands don’t have to be nearly as massive and complicated as the current cutting-edge models that the big AI companies use to show off their technology. Indeed, they might be small enough to run locally on devices, making them vastly cheaper and more efficient to operate. Don’t sleep on this use case. Like smart search, it’s also not as sexy as AGI or full automation, but I’m increasingly convinced that within the next half-decade or so, informally-articulated commands are going to emerge as one of the dominate interfaces to the world of computation. What About Agents? One of the more attention-catching storylines surrounding AI at the moment is the imminent arrival of so-called agents which will automate more and more of our daily work, especially in the knowledge sectors once believed to be immune from machine encroachment. Recent reports imply that agents are a major part of OpenAI’s revenue strategy for the near future. The company imagines business customers paying up to $20,000 a month for access to specialized bots that can perform key professional tasks. It’s the projection of this trend that led Elon Musk to recently quip: “If you want to do a job that’s kinda like a hobby, you can do a job. But otherwise, AI and the robots will provide any goods and services that you want.” But progress in creating these agents has recently slowed. To understand why requires a brief snapshot of the current state of generative AI technology… Not long ago, there was a belief in so-called scaling laws that argued, roughly speaking, that as you continued to increase the size of language models, their abilities would continue to rapidly increase. For a while this proved true: GPT-2 was much better than the original GPT, GPT-3 was much better than GPT-2, and GPT-4 was a big improvement on GPT-3. The hope was that by continuing to scale these models, you’d eventually get to a system so smart and capable that it would achieve something like AGI, and could be used as the foundation for software agents to automate basically any conceivable task. More recently, however, these scaling laws have begun to falter. Companies continue to invest massive amounts of capital in building bigger models, trained on ever-more GPUs crunching ever-larger data sets, but the performance of these models stopped leaping forward as much as they had in the past. This is why the long-anticipated GPT-5 has not yet been released, and why, just last week, Meta announced they were delaying the release of their newest, biggest model, as its capabilities were deemed insufficiently better than its predecessor. In response to the collapse of the scaling laws, the industry has increasingly turned its attention in another direction: tuning existing models using reinforcement learning. Say, for example, you want to make a model that is particularly good at math. You pay a bunch of math PhDs $100 an hour to come up with a lot of math problems with step-by-step solutions. You then take an existing model, like GPT-4, and feed it these problems one-by-one, using reinforcement learning techniques to tell it exactly where it’s getting certain steps in its answers right or wrong. Over time, this tuned model will get better at solving this specific type of problem. This technique is why OpenAI is now releasing multiple, confusingly-named models, each seemingly optimized for different specialties. These are the result of distinct tunings. They would have preferred, of course, to simply produce a GPT-5 model that could do well on all of these tasks, but that hasn’t worked out as they hoped. This tuning approach will continue to develop interesting tools, but it will be much more piecemeal and hit-or-miss than what was anticipated when we still believed in scaling laws. Part of the difficulty is that this approach depends on finding the right data for each task you want to tackle. Certain problems, like math, computer programming, and logical reasoning, are well-suited for tuning as they can be described by pairs of prompts and correct answers. But this is not the case for many other business activities, which can be esoteric and bespoke to a given context. This means many useful activities will remain un-automatable by language model agents into the foreseeable future. I once said that the real Turing Test for our current age is an AI system that can successfully empty my email inbox, a goal that requires the mastery of any number of complicated tasks. Unfortunately for all of us, this is not a test we’re poised to see passed any time soon. Are AGI and Superintelligence Imminent? The Free Press recently published an article titled “AI Will Change What it Means to Be Human. Are We Ready?”. It summarized a common sentiment that has been feverishly promoted by Silicon Valley in recent years: that AI is on the cusp of changing everything in unfathomably disruptive ways. As the article argues: OpenAI CEO Sam Altman asserted in a recent talk that GPT-5 will be smarter than all of us. Anthropic CEO Dario Amodei described the powerful AI systems to come as “a country of geniuses in a data center.” These are not radical predictions. They are nearly here. But here’s the thing: these are radical predictions. Many companies tried to build the equivalent of the proposed GPT-5 and found that continuing to scale up the size of their models isn’t yielding the desired results. As described above, they’re left tuning the models they already have for specific tasks that are well-described by synthetic data sets. This can produce cool demos and products, but it’s not a route to a singular “genius” system that’s smarter than humans in some general sense. Indeed, if you look closer at the rhetoric of the AI prophets in recent months, you’ll see a creeping awareness that, in a post-scaling law world, they no longer have a convincing story for how their predictions will manifest. A recent Nick Bostrom video, for example, which (true to character) predicts Superintelligence might happen in less than two years (!), adds the caveat that this outcome will require key “unlocks” from the industry, which is code for we don’t know how to build systems that achieve this goal, but, hey, maybe someone will figure it out! (The AI centrist Gary Marcus subsequently mocked Bostrom by tweeting: “for all we know, we could be just one unlock and 3-6 weeks away from levitation, interstellar travel, immortality, or room temperature superconductors, or perhaps even all four!”) Similarly, if you look closer at AI 2027, the splashy new doomsday manifesto which argues that AI might eliminate humanity as early as 2030, you won’t find a specific account of what type of system might be capable of such feats of tyrannical brilliance. The authors instead sidestep the issue by claiming that within the next year or so, the language models we’re tuning to solve computer programming tasks will somehow come up with, on their own, code that implements breakthrough new AI technology that mere humans cannot understand. This is an incredible claim. (What sort of synthetic data set do they imagine being able to train a language model to crack the secrets of human-level intelligence?) It’s the technological equivalent of looking at the Wright Brother’s Flyer in 1903 and thinking, “well, if they could figure this out so quickly, we should have interstellar travel cracked by the end of the decade.” The current energized narratives around AGI and Superintelligence seem to be fueled by a convergence of three factors: (1) the fact that scaling laws did apply for the first few generations of language models, making it easy and logical to imagine them continuing to apply up the exponential curve of capabilities in the years ahead; (2) demos of models tuned to do well on specific written tests, which we tend to intuitively associate with intelligence; and (3) tech leaders pounding furiously on the drums of sensationalism, knowing they’re rarely held to account on their predictions. But here’s the reality: We are not currently on a trajectory to genius systems. We might figure this out in the future, but the “unlocks” required will be sufficiently numerous and slow to master that we’ll likely have plenty of clear signals and warning along the way. So, we’re not out of the woods on these issues, but at the same time, humanity is not going to be eliminated by the machines in 2030 either. In the meantime, the breakthroughs that are happening, especially in the world of work, should be both exciting and worrisome enough on their own for now. Let’s grapple with those first. #### For more of my thoughts on AI, check out my New Yorker archive and my podcast (in recent months, I often discuss AI in the third act of the show). For more on my thoughts on technology and work more generally, check out my recent books on the topic: Slow Productivity, A World Without Email, and Deep Work. The post AI and Work (Some Predictions) appeared first on Cal Newport. View the full article

-

AI and Work (Some Predictions)