Everything posted by ResidentialBusiness

-

SBA Implements New Verification Measures to Combat Loan Fraud

The U.S. Small Business Administration (SBA) has introduced a series of new verification protocols aimed at preventing fraud within its loan programs and ensuring that financial support reaches only eligible American small business owners. The changes follow recent findings by the Department of Government Efficiency (DOGE), which uncovered widespread abuse of SBA loan programs. According to data from the U.S. Social Security Administration, over $630 million in loans were granted to applicants with birthdates suggesting they were either younger than 11 or older than 115 years old. Key measures now in place include citizenship and date-of-birth verification as part of the SBA loan application process. These updates are intended to safeguard the integrity of the agency’s programs and restore public trust. Fraud Prevention Measures The SBA now requires lenders to verify the citizenship status of all applicants to ensure businesses are not owned wholly or partially by illegal aliens. This new protocol aligns with an executive order aimed at ending taxpayer subsidization of individuals in the country unlawfully. In addition, loan applications must now include verified dates of birth. Any applicant reporting an age under 18 or over 115 will automatically be flagged under the SBA’s fraud alert system. The SBA stated these changes are designed to deter applicants from using identities belonging to deceased individuals or minors. “With the help of DOGE, the SBA has already made a number of common-sense reforms to prevent the rampant fraud we’ve seen over the last four years,” said SBA Administrator Kelly Loeffler. “Unlike the previous Administration, we respect the American taxpayer and are dedicated to ensuring every dollar entrusted to this agency goes to support eligible, legitimate small businesses. With these simple fraud prevention measures, we will end the abuse of our loan programs – with stronger safeguards to hold bad actors accountable.” Examples of Past Abuse The SBA outlined examples of fraud that occurred under previous policies: In June 2024, the agency approved a $783,000 loan to a business that was 49% owned by an illegal alien. However, the SBA identified the individual’s immigration status during a February audit and stopped disbursement, ensuring no funds were released. Between 2020 and 2021, DOGE found more than 3,000 SBA loans, totaling $333 million, were issued to borrowers over the age of 115 according to Social Security records. During the same period, DOGE identified over 5,500 loans worth approximately $300 million that were disbursed to children under the age of 11. Commitment to Reform The SBA emphasized that the new safeguards are part of a broader commitment to fiscal responsibility and program integrity. These steps are expected to reduce fraud and improve public confidence in the agency’s mission to support genuine small business development. This article, "SBA Implements New Verification Measures to Combat Loan Fraud" was first published on Small Business Trends View the full article

-

How to scale smarter with AI agents

Today’s B2B CEOs are tasked with a delicate balancing act: driving growth, improving efficiency, and creating seamless customer experiences, all while navigating unprecedented market complexity. Meanwhile, the revenue professionals responsible for executing these goals face their own challenges. Buying journeys have become increasingly labyrinthine, with big buying teams and long sales cycles. Seventy-seven percent of B2B buyers say their last purchasing decision was very complex or difficult, with more than 800 interactions on average with potential vendors. Misalignment across revenue teams compounds the issue, making it nearly impossible to deliver efficient, relevant, and cohesive buyer experiences. This complexity creates a cycle of inefficiency, where teams work harder to achieve diminishing returns. Fortunately, we’re at a pivotal moment in technology. AI and data advances are empowering organizations to simplify complex revenue cycles. Among these innovations, AI agents offer a promising solution. AI agents aren’t simple software add-ons. They’re intelligent partners that enable teams to act faster, collaborate more effectively, and scale more strategically. Let’s explore how CEOs can equip their teams with AI agents to achieve sustainable growth. AI agents are partners, not tools AI agents represent a significant evolution in business technology. Unlike traditional software, which passively waits for human input, AI agents actively analyze data, surface opportunities, make recommendations, and drive results in real time. For CEOs, this distinction is critical. AI agents don’t just automate repetitive tasks; they perform work that aligns with strategic goals. From identifying early buying signals to optimizing customer engagement, AI agents seamlessly integrate into workflows to ensure every touchpoint is efficient, personalized, and impactful. In a world in which breaking down silos and acting on intelligence faster than competitors defines success, AI agents are the bridge between vision and execution. Why good data powers great outcomes AI agents are only as effective as the data that fuels them. AI agents are built on large language models (LLMs) trained on public data. That data can sometimes produce sketchy results—like when Google’s AI search raised (and then dashed) Disney fans’ hopes by describing the impending release of Encanto 2 because it pulled its data from a fan fiction site. The fallout of misinformation in business can do much more damage than simply disappointing movie-goers. Poor-quality data can lead to disjointed recommendations and faulty business decisions. Not only that, but if you only use public data to feed your AI agents, you’ll have the same output as everyone else relying solely on LLMs. The solution for this lies within a business’s own walls. Enterprises have massive amounts of data that LLMs have not seen. Feeding this data to AI agents allows them to produce differentiated, contextualized output. For instance, integrating intent data into a sales-focused AI agent’s “diet” yields personalized outreach based on individual prospects’ needs. It’s also important that the data AI agents use is clean, accurate, and comprehensive—and that it spans the entire revenue organization. Shared data ensures that AI agents can piece together the full picture of the buyer journey—from early intent signals to post-sale engagement. What CEOs get wrong about AI agents AI agents are difficult to implement. AI agents don’t necessarily require complex overhauls. Scalable, modular solutions make it easier than ever to adopt AI incrementally, starting with specific use cases and expanding as success builds. Example:Many of our customers quickly deploy our conversational email agent for one-use case (such as re-engaging closed/lost opportunities) and build from there. This enables teams to see the immediate value of AI agent-led contextual email conversations, while at the same time laying the foundation for broader adoption. AI agents are only about efficiency. While AI agents excel at streamlining processes, their real value lies in their ability to drive strategic outcomes across industries. Example:Johnson & Johnson uses AI agents in drug discovery to optimize chemical synthesis processes. AI enhances efficiency, but more importantly, it drives strategic advancements in pharmaceutical innovation by accelerating development timelines and improving cost-effectiveness. The ROI of AI agents: Real-world impact Harri, a global leader in workforce management technology for the hospitality industry, faced a challenge familiar to many CEOs—the need to scale engagement without increasing resources. To support their strong marketing team in generating demand, Harri implemented an AI agent through 6sense as part of its outreach strategy. The AI agent autonomously identified high-intent prospects and delivered timely, personalized messages at scale, enabling Harri to engage buyers more efficiently and effectively. The results: They generated more than $12 million in pipeline and $3 million in closed/won deals in just one quarter. Campaigns achieved a 34% view-through-rate (VTR) rate, far exceeding the initial goal of 20%. They scaled marketing efforts without compromising on personalized engagement. By scaling outreach, improving engagement, and targeting high-value opportunities, Harri took pressure off its team, while achieving significant growth and enhancing the buyer experience. Pave the way for smarter growth AI agents are still so new that CEOs who aren’t using them yet can get ahead of the competition by learning to incorporate them now. These agents simplify complexity, align revenue teams, and deliver results. By integrating AI agents, CEOs can create seamless, personalized buying journeys that meet today’s expectations while driving growth. With significant AI advancements ahead, having a clear strategy is essential. By proactively adopting AI agents, organizations can address challenges and position themselves for sustained success in a rapidly evolving market. Jason Zintak CEO of 6sense. View the full article

-

Gold hits record high as investors seek haven assets

Gold hits record high as investors seek haven assetsView the full article

-

Leading Thoughts for April 10, 2025

IDEAS shared have the power to expand perspectives, change thinking, and move lives. Here are two ideas for the curious mind to engage with: I. Eric Potterat on putting in the practice you need for success: “Effort is perhaps both the easiest and hardest aspect of mindset to practice. Easy because you know what needs to be done: more practicing, more studying, more exercising, more time. Hard because: more work. For some people (and many high performers) hard work is innate. They keep at it naturally; they don’t have to make themselves do it. But most of us are what I like to call “human”: we have a limit. When we reach that fork in our day when we could spend an hour practicing that thing we care about or rot our brain watching viral videos or reality shows, too often we opt for the videos. We’ll practice tomorrow. We suffer from (or benefit from, depending on whether you are sitting comfortably on your couch or not) an intention-behavior gap. We intend to do something, but we don’t do it.” Source: Learned Excellence: Mental Disciplines for Leading and Winning from the World's Top Performers II. Michael Pilarczyk on knowing what matters to you: “There’s a reason behind every choice you make. There are reasons you decide to do or not do something, and those reasons chart your course. In other words, the meaning you assign to your thoughts determines your personality, your behavior, how you feel, how you react, and what you accomplish. Everything you do has a reason. There is a close relationship between your personal values and the choices you make. And this all directly affects your behavior.” Source: Master Your Mindset: Live a Meaningful Life * * * Look for these ideas every Thursday on the Leading Blog. Find more ideas on the LeadingThoughts index. * * * Follow us on Instagram and X for additional leadership and personal development ideas. View the full article

-

It’s time to ditch generic product claims

The Fast Company Impact Council is an invitation-only membership community of leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual dues for access to peer learning, thought leadership opportunities, events and more. What’s in a claim? Sometimes a product can’t be defined by its claim, and that has become a huge problem for the consumer packaged goods industry. Take Dr. Bronner’s and Scrumbles, for example, which both recently announced they’re dropping their B Corp certification for what they perceive to be weakening standards that allow greenwashing. The changing claims landscape What B Lab Global has done is admirable. In 2006, they set out to recognize businesses that were a force for good—meeting high standards of social and environmental performance, transparency, and accountability. They deserve credit for their part in starting a global movement that redefined the role of business in society and helped usher in a new era of capitalism where purpose and profit are both priorities. But something pivotal happened along the way that revolutionized how deeply we’re able to understand products, yet B Corp and many of today’s product claims don’t account for it: the proliferation of data. Consumers initially saw the “B” and assumed it signified health, sustainability, or ethical practices. But as access to information increased, people started digging deeper. And what did they find? Sometimes, not much. The B Corp label, like many generic claims, became an umbrella term indicating different things to different people—or nothing at all. A consumer reckoning is here This problem isn’t unique to B Corp; it’s a symptom of a larger consumer reckoning. Consider the term “clean beauty.” It lacks a standardized definition, leaving its meaning up to interpretation. For some, it equates to products with safe ingredients; for others, it might be about sustainable packaging. But even “safe” and “sustainable” are too vague to tell us what we really want to know, such as if a fragrance is allergen-free or if its packaging is compostable. Shopping has almost become a guessing game; but it’s one the modern consumer refuses to play. I had my own “aha” moment when I was pregnant with my first daughter and started to become hyperaware of what ingredients and materials were in the things I was putting in and on my body. Through my extensive research, I quickly discovered how much of what we’re exposed to is toxic to human health and even started an Excel spreadsheet of what to avoid, that I consulted every time I made a purchase. It’s what led me to found Novi Connect, which gives brands and retailers the tools to provide data, signals, and even stories to consumers about their products. Ten years ago, this might have sounded excessive. But today, more consumers are demanding this level of transparency. They want clarity and precision, not ambiguity, and it’s time for brands and retailers to deliver. The power of granular data Here’s the good news: They can. With the proliferation of data and AI, we’re rapidly moving beyond binary labels and embracing a world of sophisticated, specific product attributes. This granularity allows brands and retailers to cater to the nuanced values of their customers. My favorite illustrative example of how this can show up is glycerin. Glycerin is one of the most benign, noncontroversial ingredients and is present in almost every product we use. But based on how it’s made, it can cater to consumers with very different values. If it’s derived from plants, that means it’s vegan; but that also typically means it’s derived from palm oil. Was the palm oil responsibly sourced? If so, that claim can be made to provide assurance that no deforestation or unfair labor practices were used in the production of the glycerin. Or, maybe no palm was used and the glycerin was derived from a less common feedstock like coconut oil. Now a palm-free claim can be made, which might be important to those looking for products that align with their environmental values. These are the questions shoppers are asking, and they’re demanding verified answers before deciding where to spend their money. The retailer responsibility While consumers are driving this change, the onus is on brands and retailers to embrace it and figure out how to make it work for their customer, and ultimately, their business. It’s important to note that there’s a delicate balance between presenting information for a seamless shopping experience and providing detailed product claims. Amazon is a poster example of what this can look like. They use the green leaf symbol to provide a high-level signal and draw the customer in, then also allow you to explore the details of why a product earned that designation. Their program includes 55 unique certifications a product can qualify for. That might sound overwhelming; but it takes into account that not all shoppers care about the same things, and not all certifications are relevant for all products. With this system, it’s easy to identify products that meet your personal criteria, whether you’re focused on ingredient health and safety; carbon emissions and reduction; agriculture and how products are grown and processed; and so forth. You can see how this approach respects the buyer’s need for both simplicity and depth. And Amazon is strengthening their bottom-line in the process, driving double digit increases in both page discovery and sales with their badge program. That’s how you align purpose and profit. When companies properly leverage data to enable people to shop with purpose by aligning purchases with beliefs, it creates a more personalized shopping experience that keeps the customer coming back. In today’s market where there are endless options and instant access to information, loyalty is paramount. After all, if you don’t have repeat customers, you don’t have a business. So the choice is clear: Embrace transparency or risk irrelevance. The future of retail belongs to those who empower consumers with the truth. Tell them exactly what’s in a claim. Kimberly Shenk is cofounder and CEO of Novi Connect. View the full article

-

US risky debt funds hit by historic outflows as Trump’s tariffs shake markets

Investors rush away from junk bonds and leveraged loans on rising recession fearsView the full article

-

Retailers have big questions about AI

The Fast Company Impact Council is an invitation-only membership community of leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual dues for access to peer learning, thought leadership opportunities, events and more. Retail is at a turning point. AI is no longer a futuristic idea or marketing buzzword—it’s a business necessity. Consumers expect intelligent, seamless, and personalized experiences at every touchpoint. The brands that deliver on those expectations will win. Those that don’t will fall behind. Still, when I talk with retail leaders, I hear the same concerns again and again: How do we make AI feel natural, not robotic? Can it really drive sales—or is it just a cost-cutting tool? How do we integrate AI without blowing up our current operations? And beyond the contact center, where else can AI have real impact? These aren’t just passing questions. They’re real blockers, slowing down progress. That’s why we launched an AI Lab webinar series, and write articles like this to get information out publicly with practical, business-first answers. AI needs to do more than automate Retailers have dipped their toes into AI—automated chatbots, product recommendations, predictive analytics—but too often, these tools operate in silos. That leads to clunky experiences and limited impact. The mindset is shifting. It’s no longer just about efficiency. It’s about impact. AI shouldn’t only reduce costs. It should increase engagement, drive revenue, and build customer loyalty. Here are three principles we’ve seen drive real success: 1. AI should sell, not just support Traditionally, retail AI has played defense—handling order tracking, return policies, and FAQs. But it’s time to put AI on offense. Think of guided selling: AI that acts like a smart associate, asking about customer preferences, budget, or style—and responding naturally. It’s the digital equivalent of a great in-store experience. One example: A luxury jewelry brand used conversational AI to recommend add-ons and upgrades based on a customer’s past purchases. The result? A 30% boost in upsells—with zero human agent involvement. The takeaway: AI can drive conversions and revenue. It just needs to be designed with that goal in mind. 2. Proactive > reactive Most AI waits for customers to initiate the conversation. That’s a missed opportunity. Take cart abandonment. Nearly 70% of online carts are abandoned before checkout. AI can spot hesitation—lingering on the checkout page, revisiting items—and respond in real time with: A one-click checkout to reduce friction A last-minute incentive A helpful AI assistant offering answers AI shouldn’t just respond when customers get stuck. It should help them move forward. 3. AI that works with people, not instead of them The most successful retailers don’t replace humans—they empower them. Think about frontline staff. AI can handle the repetitive stuff so humans can focus on high-value interactions: complex purchases, emotional moments, loyalty-building conversations. It also works the other way. Human agents generate valuable data—about buying habits, objections, preferences—that AI can learn from and use to personalize future experiences. That’s the real win: a human-AI partnership that gets smarter over time and drives better outcomes across the customer lifecycle. Rethink the AI roadmap Too often, brands start with customer support because it feels “safe.” But forward-thinking leaders are broadening their lens—and seeing greater return. We’re working with retailers that are embedding AI into every stage of the customer journey: Pre-purchase: Digital consultations, guided product discovery, preference-based recommendations In-purchase: Smart upsell suggestions, checkout support, frictionless payments Post-purchase: Delivery updates, service requests, loyalty rewards, re-engagement And here’s the kicker: these touchpoints don’t need to be siloed. The right AI platform can stitch them together into a seamless, personalized journey. What makes the difference Three things separate retailers who are winning with AI from those still spinning their wheels: Start with the customer, not the tech. Don’t ask, “What can this tool do?” Ask, “Where is the customer getting stuck—and how can we help them move forward?” Design for outcomes. If your AI project doesn’t tie back to a business metric—conversion, lifetime value, customer satisfaction (CSAT)—you’re flying blind. Make it measurable. Set clear goals. Track impact. Optimize based on results. This isn’t about proving AI works in general—it’s about proving it works for your brand. Final thought: Innovation without disruption AI doesn’t need to blow up your tech stack. It should integrate with your existing systems, layer in intelligence, and get smarter over time. We call it “innovation without disruption.” You don’t have to rip and replace. You just have to start with the right mindset—and the right partner. AI in retail isn’t just about answering questions. It’s about asking the right ones—and making sure your tech stack is ready to answer them in ways that actually move the business forward. John Sabino is CEO of LivePerson. View the full article

-

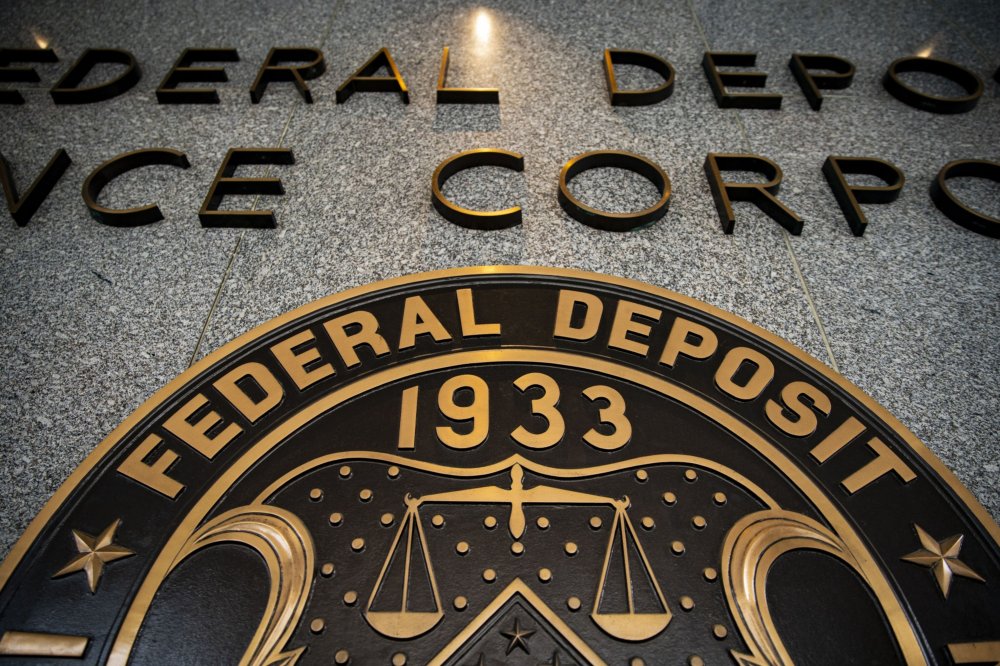

DOGE descends on FDIC in federal downsizing push

A Department of Government Efficiency team is working with FDIC leadership to "increase efficiency," which could include cuts to contracts and streamlining staff. FDIC says DOGE staffers have "appropriate clearances." View the full article

-

Trigger lead legislation reintroduced in Congress

Versions of the bill were introduced in the House and Senate, raising odds that a national trigger lead ban could be near. View the full article

-

Google Confirms: Structured Data Still Essential In AI Search Era via @sejournal, @MattGSouthern

Google AI search news: no special optimizations needed for AI features, but structured data still matters. The post Google Confirms: Structured Data Still Essential In AI Search Era appeared first on Search Engine Journal. View the full article

-

You Can Bring Back One-Tap 'Do Not Disturb' to Your Google Pixel

Software updates are great when they make features better, but that doesn't always happen. Case in point: a recent Google Pixel update that's brought in a flurry of complaints. One of the least popular changes altered how Pixels handle the Do Not Disturb feature. Previously, you could swipe down from the top of your Pixel's screen and press the Do Not Disturb button in quick settings to toggle it on or off. The March 2025 update changed that, forcing you to make multiple taps to activate or deactivate Do Not Disturb on your Google Pixel smartphone. Plenty of Google Pixel users took to Reddit to complain about this change. Fortunately, there are easy ways to fix this behavior. Restore one-tap Do Not Disturb to your Google Pixel smartphoneThe easiest way to bring back the old Do No Disturb button is by downloading an app. Simple DND lets you do exactly what the old button used to. Once you install the app and give it the required permissions, simply tap the Add Tile button to send a quick Do Not Disturb toggle back to your Pixel's quick settings pane. Once the tile is there, you can rearrange it as you see fit. Just swipe down from the top of your Pixel's screen and select the pencil icon to edit and reorder your quick settings tiles. You can use this to move the new Do Not Disturb button to your preferred location. This app was developed by someone who faced the exact same problem after the update, and doesn't do anything other than restoring the old Do Not Disturb button. The other alternative is to add a Do Not Disturb button to the lock screen and access it from there. This will require you to give up one of the two shortcuts on your lock screen and replace it with Do Not Disturb. You can change this by going to your Pixel phone's Settings app and navigating to Display > Lock screen > Shortcuts. Now select one of the shortcuts and pick Do Not Disturb to add it there. In the meantime, if you want Google to bring back the old, one-tap button, you can send your feedback to the company. On your Pixel, go to Settings > Tips & support > Send feedback. Be sure to describe the problem in detail so that the person reading it can understand the issue and pass it on to the relevant team accordingly. View the full article

-

Top Chinese general removed in latest Xi Jinping purge

He Weidong was the number-two officer in the People’s Liberation Army and a member of the Communist party’s PolitburoView the full article

-

Inside Bell Labs, the secretive New Jersey research compound where the future is made

Nokia Bell Labs has a long, storied history of producing Nobel Prize winners, creating innovative new technologies, and bolstering critical infrastructure that underlies most of the devices we all use every day. This week, it held a special event at its Murray Hill, New Jersey campus to celebrate its 100th anniversary, featuring appearances by politicians like New Jersey Governor Phil Murphy, business leaders, and even a robot named “Porcupine.” Fast Company was able to get a behind-the-scenes tour of the complex, and several of the projects and laboratories that are working on new and advancing technologies—labs that are typically shut away from the public eye. Nokia acquired Bell Labs in 2016 when it purchased Alcatel-Lucent. The vast complex is mostly empty now, as it was built for a time when thousands of workers would fill its labs and offices. The expansive campus houses a number of laboratories where, over the past century, numerous groundbreaking discoveries and inventions have been made or perfected, including cell phones, transistors, and solar cells. Despite Bell Labs’ relatively small in-office workforce, there are still researchers and scientists toiling away on numerous projects, which include augmenting undersea data cable technology, creating real-time AI platforms to increase mining operations, and new tech related to telecommunications devices and arrays. This is all largely tech that flies under the public’s radar, but is critical for supporting cell phones and wireless internet works. For example, because of the work that’s been done at Bell Labs and other facilities over the past couple of decades, much of our wireless and telecom infrastructure was able to handle the surge in demand due to the COVID-19 pandemic, when much of work and schooling was done remotely. “If the pandemic had occurred a decade earlier,” one researcher said, “it would have crippled us.” There was also a demonstration related to Nokia’s ongoing “Industrial GPT” research, which includes training robots to understand and react to voice commands. One demonstration even included a robot named “Porcupine” that has the ability to find specific containers in a warehouse-like setting, or figure out if inventory is missing and how to replace it. The company is also hard at work on quantum computing projects, which have massive potential, if ever fully realized. Michael Eggleston, a physicist and research group leader at Nokia, says that despite what some business leaders say, quantum computers “are real, and they’re here.” However, there are many different types that can be used for different aims. “Whether or not the technology converges—that’s the big question,” he says. Eggleston and others are working on perfecting the underlying quantum technology before bringing quantum computing products and services to the market, where they stand to exponentially increase computing power across the board—something that could potentially dwarf the changes AI tech has recently brought to the world. The event also served as something of a swan song for the Bell Labs complex, as Nokia is preparing to transplant its labs and researchers to the new HELIX complex in nearby New Brunswick. That move is planned to be completed by 2028. View the full article

-

Inside Nokia Bell Labs, the secretive New Jersey research compound where the future is made

Nokia Bell Labs has a long, storied history—producing Nobel Prize winners, creating innovative new technologies, and bolstering critical infrastructure that underlies most of the devices we all use every day. This week, it held a special event at its Murray Hill, New Jersey campus to celebrate its 100th anniversary, and it featured appearances by politicians like New Jersey Governor Phil Murphy, business leaders, and even a robot named “Porcupine.” The expansive campus houses a number of laboratories where, over the past century, numerous groundbreaking discoveries and inventions have been made or perfected, including cell phones, transistors, and solar cells. Nokia acquired Bell Labs in 2016 when it purchased Alcatel-Lucent. The vast complex is mostly empty now, as it was built for a time when thousands of workers would fill its labs and offices. As a part of the anniversary celebrations, Fast Company was able to get a behind-the-scenes tour of the complex, and several of the projects and laboratories that are working on new and advancing technologies—labs that are typically shut away from the public eye. Despite Bell Labs’ relatively small in-office workforce, there are still researchers and scientists toiling away on numerous projects, which include augmenting undersea data cable technology, creating real-time AI platforms to increase mining operations, and new tech related to telecommunications devices and arrays. This is all largely tech that flies under the public’s radar, but is critical for supporting cell phones and wireless internet works. For example, because of the work that’s been done at Bell Labs and other facilities over the past couple of decades, much of our wireless and telecom infrastructure was able to handle the surge in demand due to the pandemic, when much of work and schooling was done remotely. “If the pandemic had occurred a decade earlier,” one researcher said, “it would have crippled us.” There was also a demonstration related to Nokia’s ongoing “Industrial GPT” research, which includes training robots to understand and react to voice commands. One demonstration even included a robot named “Porcupine” that has the ability to find specific containers in a warehouse-like setting or figure out if inventory is missing, and how to replace it. The company is also hard at work on quantum computing projects, which have massive potential, if ever fully realized. Michael Eggleston, a physicist and Research Group Leader at Nokia, says that despite what some business leaders say, quantum computers “are real, and they’re here.” However, there are many different types that can be used for different aims. “Whether or not the technology converges—that’s the big question,” he says. In effect, Eggleston and others are working on perfecting the underlying quantum technology before bringing quantum computing products and services to the market, where they stand to exponentially increase computing power across the board—something that could potentially dwarf the changes AI tech has recently brought to the world. The event also served as something of a swan song for the Bell Labs complex, as Nokia is preparing to transplant its labs and researchers to the new HELIX complex in nearby New Brunswick. That move is planned to be completed by 2028. View the full article

-

ChatGPT Will Soon Remember Everything You've Ever Told It

Be careful what you share with ChatGPT these days: It'll remember everything you say. That's because OpenAI is rolling out a new update to ChatGPT's memory that allows the bot to access the contents of all of your previous chats. The idea is that by pulling from your past conversations, ChatGPT will be able to offer more relevant results to your questions, queries, and overall discussions. Sam Altman, CEO of OpenAI, announced the changes on X, touting the usefulness of AI systems that know everything about you: This Tweet is currently unavailable. It might be loading or has been removed. ChatGPT's memory feature is a little over a year old at this point, but its function has been much more limited than the update OpenAI is rolling out today. ChatGPT could remember preferences or requests of yours—perhaps you have a favorite formatting style for summaries, or a nickname you want the bot to call you—and carry those memories along from chat to chat. However, it wasn't perfect, and couldn't naturally pull from past conversations, as a feature like "memory" might imply. Previously, the bot stored those data points in a bank of "saved memories." You could access this memory bank at any time and see what the bot had stored based on your conversations. It's a bit weird to see these entries when you didn't specifically ask ChatGPT to remember something for you—as if you found out a new friend was jotting down "useful facts" about you from past conversations. It's weird. As this feature is rolling out now, it isn't clear yet how it will affect these saved memories. In all likelihood, they'll disappear, as there's no need for a bank of specific memories when ChatGPT can simply pull from everything you've ever said to the bot. I don't personally use ChatGPT all that much outside testing new features to cover here, so I can't say whether I find this feature particularly useful or not. I can imagine how it might be helpful to be able to reference something you told the bot in a past conversation, especially without needing to establish the bot actually remembers that fact first, but I also don't love the idea of a chatbot "remembering" everything I've ever told it. Maybe that's because I'm not sold on the idea of generative AI as a personal assistant, or maybe it's because I'm sick of tech companies scooping so much of my data. We'll just have to see how useful this expanded memory turns out to be as users get their hands on it. This feature will roll out first to ChatGPT Plus and Pro subscribers, but there's no word at this time as to when free users can expect to try it out. Users in the U.K., EU, Iceland, Liechtenstein, Norway, and Switzerland will need to wait to use the feature as well, as local laws force extra reviews before it can launch. (Maybe all countries should force AI companies through extra reviews before shipping features.) How to disable ChatGPT's memoryIf you, like me, have reservations about your chatbot accessing every word of your past conversations, there is a way to disable this memory feature. I don't have the new feature yet, so it's possible this might change slightly. But at the moment, you can head to Settings > Personalization > Memory, then disable the toggle next to Reference saved memories. If you want to keep the memory feature on, but don't want ChatGPT to remember one chat in particular, you can launch a "temporary chat" to make sure the conversation is quarantined. (Just know OpenAI may still hold onto the transcript for up to 30 days.) View the full article

-

Wix’s New AI Assistant Enables Meaningful Improvements To SEO, Sales And Productivity via @sejournal, @martinibuster

Wix's new AI assistant enables users to improve SEO, streamline how they work, and use tools more intuitively to grow their business. The post Wix’s New AI Assistant Enables Meaningful Improvements To SEO, Sales And Productivity appeared first on Search Engine Journal. View the full article

-

Wall Street sell-off resumes as Trump’s China tariffs spook investors

Equities, dollar and oil slide as scale of US levies on Chinese imports deepens recession fearsView the full article

-

EU could tax Big Tech if Trump trade talks fail, says von der Leyen

European Commission president tells FT she wants ‘balanced’ deal but could hit US services in retaliationView the full article

-

Here's What 'Core Sleep' Really Means, According to Your Apple Watch

We may earn a commission from links on this page. Let's talk about one of the most confusing terms you’ll see on your fitness tracker—specifically your Apple Watch. Next to REM sleep, which you’ve probably heard of, and “deep” sleep, which feels self explanatory, there’s “core” sleep. And if you Google what core sleep means, you’ll get a definition that is entirely opposite from how Apple uses the term. So let’s break it down. On an Apple Watch, "core sleep" is another name for light sleep, which scientists also call stages N1-N2. It is not a type of deep sleep, and has no relation to REM. But in the scientific literature, "core sleep" is not a sleep stage at all. It can refer to a portion of the night that includes both deep and light sleep stages. There are a few other definitions, which I'll go into below. But first, since you're probably here because you saw that term in Apple Health, let's talk about how Apple uses it. "Core sleep" in the Apple Watch is the same as light sleepLet me give you a straightforward explanation of what you’re seeing when you look at your Apple sleep data. Your Apple Watch tries to guess, mainly through your movements, when you’re in each stage of sleep. (To truly know your sleep stages would require a sleep study with more sophisticated equipment, like an electroencephalogram. The watch is just doing its best with the data it has.) Apple says its watch can tell the difference between four different states: Awake Light (“core”) sleep Deep sleep REM sleep These categories roughly correspond to the sleep stages that neuroscientists can observe with polysomnography, which involves hooking you up to an electroencephalogram, or EEG. (That’s the thing where they attach wires to your head). Scientists recognize three stages of non-REM sleep, with the third being described as deep sleep. That means stages 1 and 2, which are sometimes called “light” sleep, are being labeled as “core” sleep by your wearable. In other words: Apple's definition of "core sleep" is identical to scientists' definition of "light sleep." It is otherwise known as N2 sleep. (More on that in a minute.) So why didn’t Apple use the same wording as everyone else? The company says in a document on its sleep stage algorithm that it was worried people would misunderstand the term "light sleep" if it called it that. The label Core was chosen to avoid possible unintended implications of the term light, because the N2 stage is predominant (often making up more than 50 percent of a night’s sleep), normal, and an important aspect of sleep physiology, containing sleep spindles and K-complexes. In other words, Apple thought we might assume that "light" sleep is less important than "deep" sleep, so it chose a new, important-sounding name to use in place of "light." A chart on the same page lays it out: non-REM stages 1 and 2 fall under the Apple category of “core” sleep, while stage 3 is “deep” sleep. That’s how Apple defined it in testing: If an EEG said a person was in stage 2 when the watch said they were in “core,” that was counted as a success for the algorithm. What are the known sleep stages, and where does core sleep fit in?Let’s back up to consider what was known about sleep stages before Apple started renaming them. The current scientific understanding, which is based on brain wave patterns that can be read with an EEG, includes these stages: Non-REM stage 1 (N1) N1 only lasts a few minutes. You’re breathing normally. Your body is beginning to relax, and your brain waves start to look different than they do when you’re awake. This would be considered part of your “light” sleep. The Apple Watch considers this to be part of your core sleep stage. Non-REM stage 2 (N2)Also usually considered “light” sleep, N2 makes up about half of your sleep time. This stage includes spikes of brain activity called sleep spindles, and distinctive brainwave patterns called K complexes. (These are what the Apple document mentioned above.) This stage of sleep is thought to be when we consolidate our memories. Fun fact: if you grind your teeth in your sleep, it will mostly be in this stage. This stage makes up most of what Apple reports as your core sleep. Non-REM stage 3 (N3) N3 is often called “deep” sleep, and this stage accounts for about a quarter of your night. It has the slowest brain waves, so it’s sometimes called “slow wave sleep.” It’s hard to wake someone up from this stage, and if you succeed, they’ll be groggy for a little while afterward. This is the stage where the most body repair tends to happen, including muscle recovery, bone growth in children, and immune system strengthening. As we age, we spend less time in N3 and more time in N2. (There was an older classification that split off the deepest sleep into its own stage, calling it non-REM stage 4, but currently that deepest portion is just considered part of stage 3.) REM sleepREM sleep is so named because this is where we have Rapid Eye Movement. Your body is temporarily paralyzed, except for the eyes and your breathing muscles. This is the stage best known for dreaming (although dreams can occur in other stages as well). The brain waves of a person in REM sleep look very similar to those of a person who is awake, which is why some sleep-tracking apps show blocks of REM as occurring near the top of the graph, near wakefulness. We don’t usually enter REM sleep until we’ve been through the other stages, and we cycle through these stages all night. Usually REM sleep is fairly short during the beginning of the night, and gets longer with each cycle. How much core sleep do I need? Using Apple's definition, in which core sleep is the same as light sleep, it's normal for almost half of your sleep to be core sleep. Sleep scientists give an approximate breakdown (although the exact numbers may vary from person to person, and your needs aren't always the same every night): N1 (very light sleep): About 5% of the total (just a few minutes) N2 (light or "core" sleep): About 45%, so just under four hours if you normally sleep for eight hours N3 (deep sleep): About 25%, so about two hours if you normally sleep for eight hours REM: About 25%, so also about two hours. How to get more core sleepIf your Apple watch says you're getting less core sleep than what I mentioned above, you might wonder how you can get more core (or light) sleep. Before you take any action, though, you should know that wearables aren't very good at knowing exactly what stage of sleep you are in. They're usually (but not always!) pretty good at telling when you are asleep versus awake, so they can be useful for knowing whether you slept six hours or eight. But I wouldn't make any changes to my routine based on the specific sleep stage numbers. The algorithm can easily miscategorize some of your light sleep as deep sleep, or vice versa. That said, the best way to get more core sleep is to get more and better sleep in general. Start with this basic sleep hygiene checklist. Among the most important items: Give yourself a bedtime routine with at least 30 minutes of wind-down time where you try to do something relaxing. Have a consistent wake-up time. Don't look at screens right before bed. Keep your bedroom dark and cool. Don't have alcohol or caffeine in the evenings. Improving your sleep overall will improve all your sleep stages, whether your Apple Watch can tell them apart or not. Other ways people use the term “core sleep”I really wish Apple had chosen another term, because the phrase “core sleep” has been used in other ways. It either doesn’t refer to a sleep stage at all, or if it is associated with sleep stages, it’s used to refer to deep sleep stages. In the 1980s, sleep scientist James Horne proposed that your first few sleep cycles (taking up maybe the first five hours of the night) constitute the “core” sleep we all need to function. The rest of the night is “optional” sleep, which ideally we’d still get every night, but it’s not a big deal to miss out from time to time. He described this idea in a 1988 book called Why We Sleep (no relation to the 2017 book by another author) but you can see his earlier paper on the topic here. He uses the terms “obligatory” and “facultative” sleep in that paper, and switched to the core/optional terminology later. You’ll also find people using the phrase “core sleep” to refer to everything but light sleep. For example, this paper on how sleep changes as we age compares their findings in terms of sleep stages with Horne’s definition of core sleep. In doing so, they describe core sleep as mainly consisting of deep sleep stages N3-N4 (in other words, N3 as described above). From there, somehow the internet has gotten the idea that N3 and REM are considered “core” sleep. I don’t know how that happened, and I don’t see it when I search the scientific literature. I have seen it on “what is core sleep?” junk articles on the websites of companies selling weighted blankets and melatonin gummies. For one final, contradictory definition, the phrase “core sleep” is also used by people who are into polyphasic sleep. This is the idea that you can replace a full night’s sleep with several naps during the day, something that biohacker types keep trying to make happen, even though it never pans out. They use the term pretty straightforwardly: If you have a nighttime nap that is longer than your other naps, that’s your “core sleep.” Honestly, that’s a fair use of the word. I'll allow it. So, to wrap up: Core sleep, if you’re a napper, is the longest block of sleep you get during a day. Core sleep, to scientists who study sleep deprivation, is a hypothesis about which part of a night’s sleep is the most important. But if you’re just here because you were wondering what Apple Health or your Apple Watch's sleep app means by "core sleep," it means stages N1-N2, or light sleep. View the full article

-

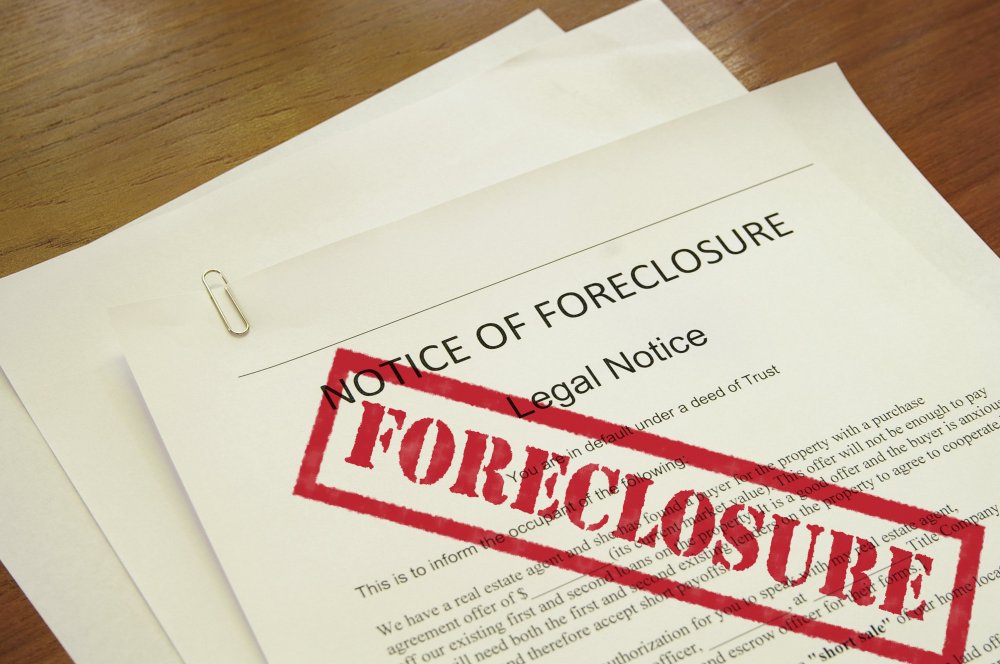

Foreclosures rise in Q1 as homeowners feel the squeeze

The latest foreclosure report adds to evidence from housing research groups that economic difficulties are beginning to impact a rising number of homeowners. View the full article

-

Kevin DeLory, Equity Prime chief lending officer, dead at 53

DeLory joined Equity Prime Mortgage from Carrington and helped to transition the Atlanta-based mortgage lender into a wholesale-only originator. View the full article

-

Try These Fixes If YouTube TV Stopped Working on Your Roku

The YouTube TV app has been disappearing for some Roku users. Luckily, if you are among those affected, there are a few fixes you can try for this issue. Starting this week, several users on both Reddit and Roku's forums have separately reported issues opening the YouTube TV app or even locating it on their Roku devices. Those who tried reinstalling the YouTube TV app were able to see an Open App button, but pressing it simply resulted in an error. What's worse is that the issues seem isolated to Roku, with users of devices like the Google TV Streamer 4K not issuing the same complaints. Luckily, this issue only seems to be impacting people with dedicated Roku devices and not smart TVs with Roku streaming built-in. Here's how you can try to bring back YouTube TV if it's disappeared from your Roku. Fix disappearing YouTube TV app on RokuRoku has acknowledged the problem and provided a temporary solution while it investigates. To restart using the YouTube TV app on your Roku, try manually updating the device. Follow these steps: Press the Home button on the remote. Select Settings. Click System. Go to Software update. Hit Check Now to start a manual update. Once the software update is complete, Roku says you might be able to see the YouTube TV app on your Roku device, but it's not guaranteed. If you're still experiencing the problem, some Reddit users have also proposed just accessing YouTube TV from the standard YouTube app instead, which you can find on the very bottom-left of the app's interface. This little hiccup comes at a bad time for both Roku and YouTube. Recently, Roku has been in the news for experimenting with a new way to force users to watch ads, while YouTube TV's pricing has also been going up quite a bit. So maybe the real solution to this issue is to just stop using YouTube TV. OK, we jest, but while the app has gotten a facelift, the service may not always offer value for your money if the content you need isn't on it. View the full article

-

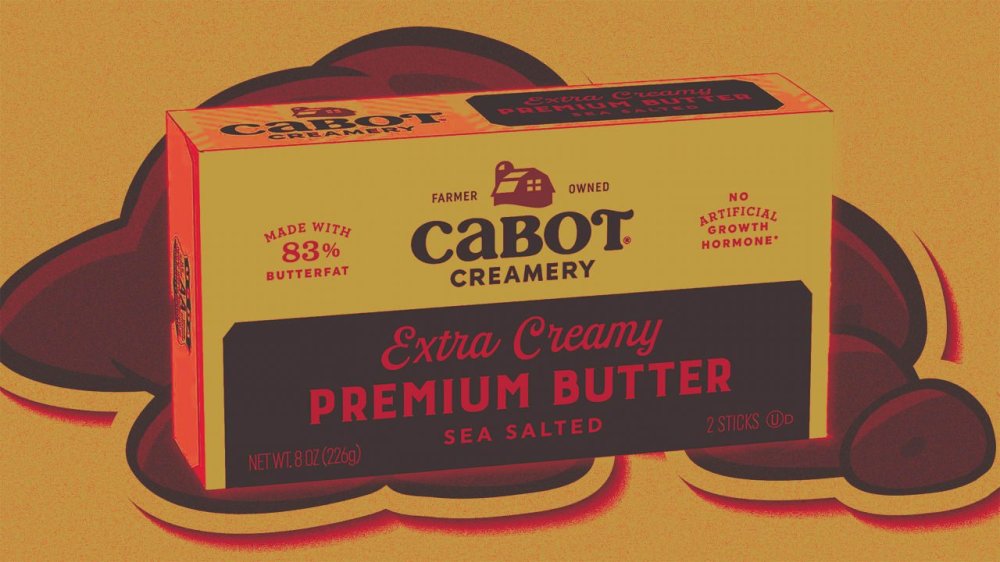

Butter recall: Cabot Creamery issues recall for possible fecal contamination

Another day, another recall. It if seems like there have been a number of recalls recently, you’re not imagining it. In the last month alone, there has been a recall for particular brands of soup, sparkling water, and vegetables. This time, it’s for butter. What’s happened? Agri-Mark, the maker of Cabot Creamery dairy products, conducted a voluntary recall for a single lot of its Extra Creamy Premium Butter, Sea Salted, totaling 1,700 pounds in 189 cases. Testing revealed an elevated level of coliform bacteria. The recall was initiated on March 26. Coliform bacteria often are considered indicators of fecal contamination. The U.S. Food and Drug Administration (FDA) has classified this as a Class III recall, which means the products are not likely to cause adverse health consequences. There have been no reported complaints or illnesses related to this product. “Agri-Mark successfully recovered 99.5% of the lot of the recalled product before it was sold to consumers,” the company said in a statement Wednesday. “A small amount—17 retail packages (8.5 lbs.)—was sold to consumers in Vermont,” where the company is based. Agri-Mark added that it has identified the cause and taken the appropriate internal actions to address it. No other products were affected. What states are affected? The recalled butter was distributed in seven states: Arkansas, Connecticut, Maine, New Hampshire, New York, Pennsylvania, and Vermont. What product is being recalled? Cabot Creamery 8 oz. Extra Creamy Premium Butter, Sea Salted Best by date: Sept. 9, 2025 UPC: 0 78354 62038 0 Impacted lot code: 090925-055 Item Number: 2038 What should I do if I have the recalled product? First, do not eat a recalled food—even when the product in question is being recalled as a precaution, according to Foodsafety.gov. The company stated that “there have been a variety of news reports that are incomplete and have dramatically misrepresented this recall with respect to the risk it posed to consumers.” Consumers who have any concerns or questions about this product can contact Cabot Creamery, 888-792-2268 (weekdays, 9:30 a.m.– 5 p.m. ET) or via email here. View the full article

-

Why Canva doubled down on a musical to hype its new products

Last year, when Canva used a rap song to promote its new suite of products for businesses, the reaction online was about what you’d expect. “Call 911 I’m having a cringe overdose.” “This is Lin-Manuel Miranda’s fault.” The performance at Canva’s annual summit, Canva Create (Disclosure: Fast Company is a Create media partner), reminded many of corporate musical escapades of the past, like Bank of America’s adaptation of U2’s “One” back in 2006, or Randi Zuckerberg’s Twisted Sister-inspired ode to crypto in 2022. But for Canva, it drove attention and traffic to the brand. More than 50 million people saw the rap battle within 48 hours, which boosted social media chatter about Canva Enterprise by 2,500%. Co-founder and COO Cliff Obrecht said at the time, “Haters gonna hate.” Today at Canva’s fourth Canva Create event at Los Angeles’ SoFi Stadium, the company doubled down on that confidence and rolled out another musical number. This time, to help unveil Visual Suite 2.0, which the company says is its biggest product launch since its founding in 2012. This year, the brand went for a Broadway-style tune that unspools the tale of how Canva used more than a billion pieces of user feedback to bring 45 of the most-asked-for updates to its products. The cast featured 42 performers including creator Tom McGovern, design influencer Roger Coles (who starred in last year’s performance), and a special appearance by Canva Design Advisory Board member Jessica Hische. There’s also 12 members of the Singers of Soul choir and an additional 12 members of the LA Marching Band. The lyrics of the song are inspired by and quoted directly from customer requests and feedback. And in case you missed any of the promised platform upgrades live, the song will be available on Spotify. Many cringe-happy observers will be quick to ask why Canva chose to put its message to music again. But the move illustrates the importance of self-awareness, and how crucial it is if you don’t want to be sloshed around by the tides of pop cultural trends, and instead.. ahem.. dance to your own tune. Community rap lessons Last year, more than 3,500 people attended Create, while millions of others tuned in to watch remotely, including at watch parties in cities from Tokyo to Delhi. It features 100 different speakers across 60 different stages. With more than 230 million monthly users, Canva has a large, attentive audience for its content. CMO Zach Kitschke says the event is the company’s biggest moment of the year for bringing its community together. Kitschke has observed over the years how big swings can cut through the noise of so much marketing. “It’s been a principle of our marketing, really since we launched, that people will remember these heightened experiences or moments,” he says. “Over 90% of our traffic is driven by brand and word-of-mouth these days, and that has come from a fostering of community. With the rap, it could have been a very, very boring topic that no one took note of, but learned you can change the conversation in a matter of minutes by creating a moment out of something like that.” What was often left out in the discourse around the rap last year, was that the idea came from Canva user and community member Roger Coles. At a time when more brands are looking to their hardcore fans and customers for how to connect with culture, Canva did just that. And that’s why it struck up the band again. Kitschke says that community runs through everything the brand does. “That means including them in our commercials, and putting them up on stage at events like this,” he says. “It’s not just marketing, but it’s an acknowledgement. And it’s a really special way to bring people into the fold.” View the full article

-

Google officially rollouts out links in AI Overviews to its own search results

A few weeks ago, we caught Google linking text within its AI Overviews to its own search results. Well, today that has become a new official feature within AI Overviews. “To help people more easily explore topics and discover relevant websites, we’ve added links to some terms within AI Overviews when our systems determine it might be useful,” a Google spokesperson told Search Engine Land. What it looks like. Here is a screenshot we posted of this back then: Clicking on those underlined links in the text of the AI Overview, both at the top and in the middle section, will take you back to a new Google Search. The smaller link icons take you to the side panel links, those go to publishers and external websites. Why. Google said they are doing this to make it easier for searchers to explore topics. Google told me they have seen that people often end up manually searching for certain terms as a separate query from these AI Overviews. Google said that during their extensive testing, they have heard from users that they find it helpful to be linked directly to a relevant results page in these cases. This helps reduce the need for searchers to enter a new query, instead they can just click on these links. Google says this leads to a “much better search experience.” Google’s systems prioritize linking to third party websites within the AI Overview response when Google has a high confidence that those websites will help the user find the information they’re seeking, a Google spokesperson told me. Where. Google said this new feature is available in English in the US, on both mobile and desktop. Why we care. Publishers have been begging Google to send them more traffic through Google Search. Now, with this new feature officially launching, you have to assume Google will send less traffic to publishers and more traffic to its own search results. Again, Google says this is about giving searchers what they want and making it easier for them to explore topics. But again, for publishers and site owners, this may not be a good thing. View the full article