Everything posted by ResidentialBusiness

-

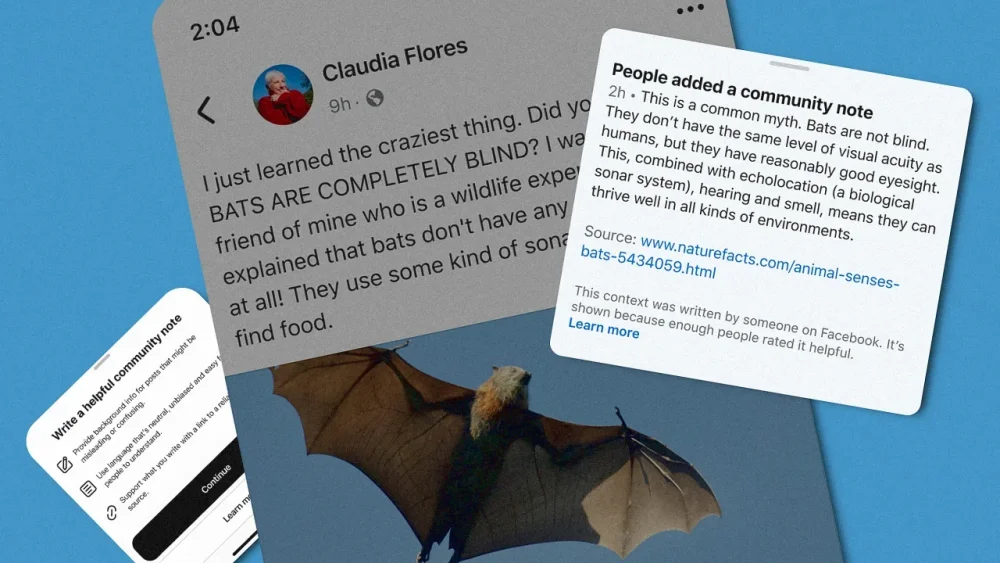

Meta launches Community Notes, a whitewashed version of Elon Musk’s fact-checking system

In January, Meta announced the end of third-party fact checkers on Facebook, Instagram, and Threads. The tech giant is betting on a new, community-driven system called Community Notes that draws on X’s feature of the same name and uses the X’s open algorithm as its basis. Meta is rolling out the feature today. Anyone who wants to write and rate community notes can sign up now. The rollout will be throttled and, initially, notes won’t appear publicly as Meta claims it needs time to feed the algorithm and ensure this system is working properly. The promise is enticing. A more scalable, less biased way to flag false or misleading content, driven by the wisdom of the crowd rather than the judgment of experts. But a closer look at the underlying assumptions and design choices raises questions about whether this new system can truly deliver on its promises. The concept, its UX implementation, and underlying technology surfaces challenges that, in my conversations with Meta’s designers, don’t seem to have any clear, categorical answer. It feels more like a work-in-progress and not a clear-cut answer to the shortcomings of third-party fact checking. Currently, Meta’s Community Notes are exclusively accessible on mobile devices within the Facebook, Instagram, and Threads apps. The mobile-first approach likely reflects the platform’s primary user base and usage patterns. Users who are eligible to contribute to Community Notes, after meeting specific criteria such as having a verified account and a history of platform engagement, can apply to be a contributor and add context to posts they believe contain misinformation (200,000 have already done so in the U.S., Meta tells me). Once in, they’ll find an option within the post’s menu to “Add a Community Note.” This triggers an overlay screen with a simple text editor that has a 500-character limit. The design also requires users to include a link, adding a layer of credibility to the note (although the link may not be a reliable source). [Images: Meta] Once a note is submitted, it’s evaluated by other Community Notes contributors. Meta uses an X’s open-source algorithm—which may evolve later as they learn more about how it all really works, Meta says—to determine whether the note is helpful and unbiased. The algorithm considers various factors, like the contributor’s rating history and whether individuals who typically disagree with certain types of notes approve of it or not. Allegedly, the latter is the firewall to avoid coordinated activism against certain types of posts (although the algorithm hasn’t been proved to be totally effective on X). The evaluation interface presents contributors with a clear and straightforward way to rate the note’s quality and helpfulness: a simple thumbs up/thumbs down system, which leads to another overlay menu in which they can select why they chose their option. Meta claims that if a note reaches a consensus among contributors with diverse viewpoints, it will then be publicly displayed beneath the original post, providing additional context without directly altering the post’s visibility or reach. The design aims to present the note as an informative supplement rather than a definitive judgment, allowing users to make their own informed decisions. [Images: Meta] An unsolvable problem? While the idea of crowdsourced fact-checking holds some theoretical appeal, Meta’s implementation appears to be riddled with the same vulnerabilities and unanswered questions that have affected X. On Elon Musk’s platform, Community Notes have failed to actually fact check. They also suffer from extreme latency, or the amount of time that notes take to appear. A report from Bloomberg found that on average a typical note took seven hours to show up on the platform, but it can take as much as 70 hours, meaning false posts can go viral before they get checked. Community Notes on X have also failed to reduce engagement with false information. And because just 12.5% of Community Notes are seen, it denies their intrinsic value to the community. And let’s not forget the potential to get gamed by particular interests. Meta’s own oversight board has pointed out “huge problems” with the plan. Still, the company’s rationale to favor Community News over third-party fact checkers hinges on two key arguments: scalability and reduced bias. Traditional fact-checking is a labor-intensive process that struggles to keep pace with the deluge of content on social media. That makes sense. By enlisting community members to flag and contextualize posts, Meta hopes to cover a much wider range of potentially problematic material. The social media company also argues that relying on a diverse group of contributors will mitigate the perceived bias of professional fact-checkers, who are often accused of political partisanship. The company and the designers cited a 2021 study by Allen et al published in the scientific journal Science Advances titles “Scaling up fact-checking using the wisdom of crowds” as evidence that balanced crowds can achieve accuracy comparable to experts. Cracks in the foundation A critical examination of the study reveals a significant gap between the research and Meta’s proposed implementation. The study explicitly required political balancing of raters to achieve accurate results. Meta, on the other hand, has not clearly explained how it will ensure viewpoint diversity among contributors without collecting sensitive political data. According to Meta’s designers, achieving diversity without directly collecting political data is a multifaceted challenge. They stated that they plan to use a combination of factors, such as a users’s past interactions, engagement with different types of content, and network connections, to infer a range of viewpoints. Their hope is that this approach will allow the system to identify and prioritize contributors who represent a variety of perspectives, though they admitted it was an ongoing area of development. Furthermore, the study only assessed the accuracy of headlines and ledes, not full articles. This raises serious concerns about the system’s ability to handle complex or nuanced misinformation, where the truth may lie in the details. The community note’s limit of 500 characters adds to this concern. When I asked how it would be possible to truly add deep context to a post in which the truth is not binary (and let’s face it, it almost never is), there wasn’t a clear answer but a silence followed by the explanation that they could always expand the length if users demand it. Links to external sources can be included to provide more in-depth information, though they admitted that this adds another step for the reader to take. It’s hard to imagine people clicking through in this era of content fast food. [Images: Meta] The company doesn’t have a plan for addressing one of the biggest issues with tackling misinformation—which happens both via Community Notes and third-party fact checking: the implied truth effect. Research shows that “attaching notes to a subset of fake news headlines increases perceived accuracy of headlines without warnings.” In the absence of these new notes, people might make the false assumption that a post is true. Meta’s designers say it will takes about the same time it takes on X for notes to go through the community fact-checking process, which means there will be plenty of time for fake news to go viral. Furthermore, X has shown that only a small percentage of posts get annotated, so the implied truth effect will, no doubt, be felt in Meta’s implementation of the same technology—at least in its current state. The old third-party fact checking suffered from similar latency problems. No penalty Under the previous system, posts that fact-checkers identified as false or misleading had their distribution reduced. Community Notes, in contrast, will simply provide additional context, without impacting the reach of the original content. This decision flies in the face of research suggesting that warnings alone are less effective than warnings combined with reduced distribution. Meta says it wants to prioritize providing users with context rather than suppressing content. Its belief is that users can make their own informed decisions when presented with additional information. The fear is that demoting posts could lead to accusations of censorship and further erode trust in the platform. Meta says it will be monitoring the system, evaluating the latency, coverage, and the downstream effects of viewership and sharing utilizing those metrics to guide future work, refinements, testing, and iterations. But Meta says there has been no A/B testing of Community Notes to see how it performs versus third-party fact-checking. Rather, the company is using this initial rollout phase as a public beta test, as a way to feed the algorithm with data from contributors so the system can get up and running. Fear, uncertainty, lots of doubt Twitter rolled out its proto version of Community Notes in 2020. Called Birdwatch, it continued to evolve with mixed results ever since Elon Musk took over and rebranded it with the current moniker. While Meta will use X’s open source algorithm as the basis of its rating system, feeding it with enough information to be operative could take quite a while. According to the Meta designers, the initial lack of public visibility is intended to allow them to train and thoroughly test the system and identify any potential problems before rolling it out to a wider audience. Meta isn’t saying how the notes will appear to all users, only pointing out in a press release that “the plan is to roll out Community Notes across the United States once we are comfortable from the initial beta testing that the program is working in broadly the way we believe it should.” Meta says it will gradually increase the visibility of the notes as it gains confidence in the system’s effectiveness, but did not provide a specific timeline or metrics for success. In a bid for transparency, Meta will release the algorithms that it uses. It’s yet to be seen if Meta’s Community Notes will be more effective than the previous third-party fact checking process. Nothing in the user experience suggests that it can solve the problems that X has had; logically, we can expect Meta to have many of the same issues, as well. In a historical moment where the truth is treated like malleable material, we could use a lot more certainties. Meta may have missed the chance to scientifically develop a new, non-derivative user experience that could avoid X’s problems. Instead, we are getting Musk’s broken toy with a coat of paint and the hope that, magically, it may work this time. View the full article

-

Blood moon 2025: Best time to see the March total lunar eclipse, supermoon, and full ‘worm’ moon

Tonight will be the perfect evening to stay up late in the United States, because the moon is going to put on a spectacular show. In the wee hours of the morning, night owls can witness a full blood moon, supermoon, “worm” moon, and a total lunar eclipse. In addition to Americans, parts of Europe, Australia, Asia, South America, and Africa can also get in on the action. Let’s take a deeper look at what that means and how you can see the spectacle: The March 2025 full moon is many things According to NASA, in the 1930s the Maine Farmers’ Almanac started publishing names for the full moons based on Native American traditions. This took off and became the preferred monikers for the celestial phenomenon. Each tribe had its own unique name. Northeastern and Southern tribes called the full moon in March the Worm Moon, because of the worm castings (worm waste) found during the month. Northern tribes called it the Sap or Sugar Moon, because it was time to tap maple trees. What is a total lunar eclipse? There are different types of lunar eclipses, NASA says. The Earth orbits the sun and the moon orbits the Earth. When these three line up, the moon enters the Earth’s shadow, creating a lunar eclipse. When the whole moon is in the darkest part of Earth’s shadow, called the umbra, a total lunar eclipse occurs. What is a blood moon and why is it red? During a total lunar eclipse, the moon appears to have a red tint. This is where the term “blood moon” comes from. A blood moon gets its hue from the light in Earth’s atmosphere. During a total lunar eclipse, Earth is positioned between the sun and the moon. Even though the sun’s direct light is blocked, the Earth refracts some light, which travels to the moon. Because of red light’s longer wavelength, it has an easier time traveling to the moon, giving it the red tint. What makes this moon a supermoon? While this is marvelous to look at, there are no superheroes here. A supermoon means the moon is at its closest point to the earth during a full moon. How and when can I see the eclipse and blood moon? The moon will be in totality—or completely eclipsed—for around 65 minutes on Thursday night, although it will technically be in the early-morning hours of Friday, March 14, for most time zones. According to Space.com, this will occur: Eastern Time: 2:26 a.m.–3:31 a.m. Central Time: 1:26 a.m.–2:31 a.m. Pacific Time: 11:26 p.m.–12:31 a.m. Diehard skygazers can make the most of the experience by heading outside around 75 minutes before totality to witness the moon travel fully through Earth’s shadow. It is best seen away from the bright lights of the city. One advantage of a lunar eclipse is that viewers don’t have to worry about hurting their eyes, unlike their solar counterparts. No special equipment is needed, but binoculars and telescopes would help you see the moon in greater detail. Happy viewing! View the full article

-

Looking for your next job? Use this AI trick to make a list of potential companies in seconds.

Identifying which companies you hope to work for is one of the biggest hurdles job seekers face. I know this because I was a search consultant for over 25 years. Now, I have an executive résumé and LinkedIn profile writing practice. And my clients almost always ask questions about how to find future employers. I advise them to use AI chatbot platforms like ChatGPT, Claude AI, and Perplexity. To help the platforms work their magic, I encourage them to use NAICS codes in their prompts. Here’s how to do this: What are NAICS codes? NAICS is the acronym for the North American Industry Classification System. It assigns six-digit codes to companies as follows: The first two digits in a NAICS code identify economic sectors (e.g., 23 for Construction). NAICS has 20 sectors. The third and fourth digits divide economic sectors into subsectors. Example: 23 becomes 2382 for Building Equipment Contractors, a type of construction company. NAICS has 99 subsectors. The fifth and sixth digits divide economic subsectors into industries. Example: 2382 becomes 238220 for Plumbing, Heating, and Air-Conditioning Contractors, a type of building equipment contractor. NAICS has 1,000-plus industries. Thus, you can use NAICS codes at different levels to identify where you want to work. Once you know that, you can ask AI chatbot platforms to find companies in those NAICS codes. How AI chatbots can help find companies I asked ChatGPT how it finds companies. It searches for and analyzes public information from filings, directories, and the internet. It does in a minute or two what would take a job seeker hours, days, or weeks. I ran several searches on different platforms to show you how to use these chatbots to speed up your job search. You can see my prompts and results below. Prompts to find target companies I used these prompts to find companies by industry, location, and size: Prompt 1: Please list all the companies in NAICS code 713210 (Casinos) in Nevada. Claude AI provided a list of 55 large casinos. When I asked it to limit its results to Reno, it gave me 20 casino and gaming establishments. Prompt 2: List the 20 largest companies in the US in NAICS code 221115 (Wind Electric Power Generation). Perplexity listed 20 companies. When asked, it also shared locations, descriptions, and the 21st through 40th-largest companies. Prompt 3: List the companies in NAICS code 441110 (New Car Dealers) in Washington State’s King County. Perplexity named 17 dealerships, which was a good start but not comprehensive. ChatGPT wouldn’t answer my query. Instead, it suggested I use Data Axle Reference Solutions, which I have recommended for years. DARS has a database of almost 100 million U.S. businesses. It’s the ultimate resource if you hit a dead-end finding target employers, and it’s searchable by NAICS codes. Prompts to find recruiting, private equity, and venture capital firms Job seekers also want to find potential sources of opportunities, such as recruiting and private investment firms. To identify these targets, I used the following prompts. They included a subsector, industries, and specific investment strategies: Prompt 1: Please list search firms that recruit executives for companies in NAICS code 3254 (Pharmaceutical & Medicine Manufacturing). ChatGPT provided a list of 25 firms, although I had to re-prompt it with “Any more?” several times. Prompt 2: List venture capital firms that invest in AI start-ups (NAICS code 541745). ChatGPT provided a list of 28 firms. While I had to re-prompt it with “Any more?” several times, I stopped asking before it was done sharing firms. Prompt 3: Please list private equity firms that acquire turnaround clothing retailers (NAICS code 458110). ChatGPT provided a list of 17 firms. Again, I re-prompted it several times. Perplexity, prompted and re-prompted, gave me a list of 18 firms. You can use different platforms and variables at will. Doing so enables you to assemble lists of potential target companies in minutes. View the full article

-

My Favorite Recipes to Get the Most Out of Your Dutch Oven

It took years of convincing before I finally started cooking with a Dutch oven, but once I started it quickly became my favorite pot to cook with. This dense and nearly indestructible pot is as versatile as it is beautiful to look at. The heavy cast iron allows for evenly distributed heat, and the glossy enameled surface is easy to clean. Plus they come in a rainbow of colors, which appeals to my need for whimsy in the kitchen. Contrary to what you might think, a good Dutch oven can be affordable too (for example, not $300). Read here for alternatives to the high-priced French brands. My brandless Dutch oven has been braising, baking, and frying for years and she has many more left. Here are my favorite recipes and ways to use a Dutch oven to the fullest. One-pot meals Credit: Allie Chanthorn Reinmann Few things are as relieving to me as not having a sink full of dishes, and the Dutch oven offers me this blessing. It’s the perfect solution for one pot meals because cast iron is up to the challenge of multiple cooking methods. It can spend hours on heat without scorching, and it can move seamlessly from the stovetop to the oven if you’re searing and braising. This savory sausage and beans recipe is my favorite one-pot dish. It’s flavorful, hearty, and requires very little attention. Aside from roughly chopping the veg, you barely have to prepare the ingredients. Leave the sausages whole and sear the links on all sides. Then simply dump in the rest of the ingredients and let them simmer until fork tender. Low and slow cooking Credit: Allie Chanthorn Reinmann The Dutch oven excels at low and slow cooking. Again, it’s that clever cast iron core. You can set the pot over low heat for hours and tough, chewy meats will tenderize into soft, buttery morsels. One of my favorite meals to make is this crispy chicken and rice recipe. While chicken doesn’t require tenderizing, rice and other dried grains and beans benefit from low and slow cooking. This dish is hearty, simple, and packed with bright flavors, thanks to the tomatoes and olives. Start by searing chicken thighs, skin-side down, and then cook the remaining ingredients. Nestle the thighs back into the rice during the last bit of cooking time for juicy chicken with crispy skin. Homemade breadsI’ve boasted already about the Dutch oven’s heavy duty cast iron construction, and I’ll never stop. These pots are called “ovens” for a reason—they’re great for baking. Dutch ovens can comfortably withstand temperatures of 500°F and sometimes higher (just check the details of the particular one that you buy). High temperatures like that are great for homemade breads, like this sourdough recipe I always make in my Dutch oven. Putting the lid on the pot encloses the bread in a small space and naturally creates a humid environment as the bread begins to cook. The extra humidity allows for maximum oven spring, leaving you with a well-risen loaf. For a bread that doesn’t require proofing, try this Irish soda bread recipe in your Dutch oven. It only requires four ingredients, and the resulting quick bread is fantastic with a smear of salty butter and raspberry jam. Deep frying Credit: Allie Chanthorn Reinmann One of the biggest challenges that comes with deep frying is keeping the temperature consistent. When you drop cold or room temperature batter into hot oil, it’s normal for the oil’s overall temperature to drop down. The bigger the batch, the more the temperature will dip, potentially resulting in a greasy batter. The best solution is to use a heavy pot. Unlike a thin-walled pot, the Dutch oven will help keep the temperature consistent so you can deep fry bigger batches. My favorite recipe that requires deep frying is this instant pancake apple fritter recipe. You can make this in a small pot, cooking one at a time, but I suggest the wider frying pool of a Dutch oven for cooking bigger batches. Big batches of soupSoup is the best way to show off your Dutch oven. You can easily find recipes that are one pot, low and slow, and undemanding. Sure, not every soup is like this, but I’m partial to the ones that are. It’s nice to carelessly toss ingredients into a giant pot and come back to reveal an impressive meal. This French onion soup recipe is one of my go-tos because it doesn’t require any babysitting. The onions soften easily in the pot and caramelize gently without too much intervention. The beef broth fills out the soup, while wine and herbs bring in complexity. Other than that, you just have to broil some stretchy cheese onto a slice of bread before digging in. In fact, I think broiling some stretchy cheese fits into all the recipes above (even the fritters). Enjoy your Dutch oven explorations, folks. Bonus tip: Use it as a coolerThe same qualities that allow a Dutch oven to hold onto heat also enables it to maintain a chill too. I use my Dutch oven as an attractive drink cooler for parties. It’s especially helpful when your fridge is packed and you could use a little extra external space. About an hour before your guests come over, put the Dutch oven in the fridge (the lid too if you’ll be chilling cans). Take the pot out and fill it halfway with ice. Tuck bottles of chilled wine, pre-mixed cocktails, or cans of seltzer into the ice and freshen it up with new ice when needed. View the full article

-

How to withstand algorithm updates and optimize for AI search

The SEO industry is undergoing a profound transformation in 2025. As large language models (LLMs) increasingly power search experiences, success now depends on withstanding traditional algorithm fluctuations and strategically positioning brands within AI knowledge systems. This article explores key insights and practical implementation steps to navigate this evolving landscape. Withstanding algorithm updates in 2025 Traditional algorithm updates remain a reality, but our approach to handling them must evolve beyond reactive tactics. The typical SEO response to traffic fluctuations follows a familiar pattern: Identify the drop date. Cross-check with known updates. Audit on-site changes. Analyze content. Review backlinks. Check competitors. Look for manual actions. This reactive methodology is no longer sufficient. Instead, we need data-driven approaches to identify patterns and predict impacts before they devastate traffic. Let me share three key strategies. Breaking down the problem with granular analysis The first step is drilling down to understand what changed after an update. Was the entire website affected, or just certain pages? Did the drop affect specific queries or query groups? Are particular sections or content types (like product pages vs. blog posts) impacted? Using filtering and segmentation, you can pinpoint issues with precision. For example, you might discover that a traffic drop: Primarily affected product pages rather than blog content. Or specifically impacted a single category despite maintaining rankings, potentially due to a SERP feature drawing clicks away from organic listings. Leveraging time series forecasting One of the most powerful approaches to algorithm analysis is using time series forecasting to establish a baseline of expected performance. Meta’s Prophet algorithm is particularly effective for this purpose, as it can account for: Daily and weekly traffic patterns. Seasonal fluctuations. Overall growth or decline trends. Holiday effects By establishing what your traffic “should” look like based on historical patterns, you can clearly identify when algorithm updates cause deviations from expected performance. The key metric here is the difference between actual and forecasted values. By calculating these deviations and correlating them with Google’s update timeline, you can quantify the impact of specific updates and distinguish true algorithm effects from normal fluctuations. SERP intent classification As search engines’ understanding of user intent evolves, tracking intent shifts becomes crucial. By analyzing how Google categorizes and responds to queries over time, you can identify when the search engine’s perception of user intent changes for your target keywords. This approach involves: Classifying search queries by intent (informational, commercial, navigational, etc.). Monitoring how SERP layouts change for each intent type. Identifying shifts in how Google interprets specific queries. When you notice declining visibility despite stable rankings, intent shifts are often the culprit. The search engine hasn’t necessarily penalized your content. It’s simply changed its understanding of what users want when they search those terms. Get the newsletter search marketers rely on. Business email address Sign me up! Processing... See terms. The rise of AI-driven search and entity representation While traditional algorithm analysis remains important, a new frontier has emerged: optimizing for representation within AI models themselves. This shift from ranking pages to influencing AI responses requires entirely new measurement and optimization approaches. Measuring brand representation in AI models Traditional rank tracking tools don’t measure how your brand is represented within AI models. To fill this gap, we’ve developed AI Rank, a free tool that directly probes LLMs to understand brand associations and positioning. Brand AI visibility tracking Here, I’ll illustrate the approach to measuring and interpreting AI visibility for one participating brand. We utilize two prompt modes and collect this data on a daily basis: Brand-to-Entity (B→E): “List ten things that you associate with Owayo.” Entity-to-Brand (E→B): “List ten brands that you associate with custom sports jerseys.” This bidirectional analysis creates a structured approach to AI model brand perception. The analysis performed after two weeks of data collection revealed that this brand is strongly associated with: “Custom sportswear” (weighted score 0.735). “Team uniforms” (0.626). This shows strong alignment with their core business. However, when looking at which brands AI models associate with their key product categories, dominant players like Nike (0.835), Adidas (0.733), and Under Armour (0.556) consistently outrank them. Tracking association strength over time In addition to an aggregate overview, tracking how these associations evolve daily is important, revealing trends and shifts in AI models’ understanding. What do AI models associate this brand with, and how does this perception change over time? For this brand, we observed that terms like “Custom Sports Apparel” maintained strong associations, while others fluctuated significantly. This time-series analysis helps identify stable brand associations and those that may be influenced by recent content or model updates. Competitive landscape analysis When analyzing which brands AI models associate with specific product categories, clear hierarchies emerge. Custom Basketball Jerseys – OpenAI – Ungrounded Responses For “Custom Basketball Jerseys,” Nike consistently holds Position 1, with Adidas and Under Armour firmly in Position 2 and Position 3, but where is Owayo? This visualization exposes the competitive landscape from an AI perspective, showing how challenging it will be to displace these established associations. Grounded vs. ungrounded responses A particularly valuable insight comes from comparing “grounded” responses (influenced by current search results) with “ungrounded” responses (from the model’s internal knowledge). Custom Basketball Jerseys – Google – Grounded Responses Custom Basketball Jerseys – Google – Ungrounded Responses This comparison reveals gaps between current online visibility and the AI’s inherent understanding. Ungrounded responses show stronger associations with cycling and esports jerseys, while grounded responses emphasize general custom sportswear. This highlights potential areas where their online content might be misaligned with their desired positioning. Strategic implications: Influencing AI representation These measurements aren’t just academic; they’re actionable. For this particular brand, the analysis revealed several strategic opportunities: Targeted content creation: Developing more content around high-value associations where they weren’t strongly represented Entity relationship strengthening: Creating explicit content that reinforces the connection between their brand and key product categories Competitive gap analysis: Identifying niches where competitors weren’t strongly represented Dataset contribution: Publishing structured datasets on Hugging Face that establish their expertise in specific sportswear categories Implementing a proactive AI strategy Based on these insights, here’s how forward-thinking brands can adapt to the AI-driven search landscape. Direct dataset contributions The most direct path to influence AI responses is contributing datasets for model training: Create a Hugging Face account (huggingface.co). Prepare structured datasets that prominently feature your brand. Upload these datasets for use in model fine-tuning. When models are trained using your datasets, they develop stronger associations with your brand entities. Creating RAG-optimized content Retrieval-augmented generation (RAG) enhances LLM responses by pulling in external information. To optimize for these systems: Structure content for easy retrieval: Use clear, factual statements about your products/services. Provide comprehensive product information: Include detailed specifications and use cases. Craft content for direct quotability: Create concise, authoritative statements that RAG systems can extract verbatim. Building brand associations through entity relationships LLMs understand the world through entities and their relationships. To strengthen your brand’s position: Define clear entity relationships: “Owayo is a leading provider of custom cycling jerseys.” Create content that reinforces these relationships: Expert articles, case studies, authoritative guides. Publish in formats that LLMs frequently index: Technical documentation, structured knowledge bases. Measure, optimize, repeat Implement continuous measurement of your brand’s representation in AI systems: Regularly probe LLMs to track brand and entity associations. Monitor both grounded and ungrounded responses to identify gaps. Analyze competitor positioning to identify opportunities. Use insights to guide content strategy and optimization efforts. From SEO to AI influence The shift from traditional search to AI-driven information discovery requires a fundamental strategic revision. Rather than focusing solely on ranking individual pages, forward-thinking marketers must now: Use advanced forecasting to better understand algorithm impacts. Monitor SERP intent shifts to adapt content strategy accordingly. Measure brand representation within AI models. Strategically influence training data to shape AI understanding. Create content optimized for both traditional search and AI systems. By combining these approaches, brands can thrive in both current and emerging search paradigms. The future belongs to those who understand how to shape AI responses, not just how to rank pages. Future work Savvy data scientists will notice that some data tidying is in order, starting with normalizing terms by removing capitalization and various artifacts (e.g., numbers before entities). In the coming weeks, we’ll also work on better concept merging/canonicalization, which can further reduce noise and perhaps even add a named entity recognition model to aid the process. Overall, we feel that much more can be derived from the collected raw data and invite anyone with ideas to contribute to the conversation. Disclosure and acknowledgments: AI visibility data was collected via AI Rank with written permission from Owayo brand representatives for exclusive use in this article. For other uses, please contact the author. [Watch] How to withstand Google algorithm updates in 2025 Watch my SMX Next session for more insights on how to improve your site and withstand future algorithm updates. View the full article

-

Google: Hotlink Protection Carve Outs For Search Engines Are Fine

Google's John Mueller said that implementing hotlink protections with carve-outs for search engines are fine. He added that this is not really a thing sites do that much these days, stating it was a thing in 2010's or so but not now.View the full article

-

Google Merchant Center Adds Bulk Request Review

Google Merchant Center has apparently added the ability to dispute and/or request a review in bulk. This does not work for all notices but it works for some and it can save you a lot of time, if the same disapproval is issued across a number of products or issues. View the full article

-

The Trump backlash offers Starmer a mainstream moment

Prospect of reaching peak populism is an opportunity View the full article

-

Microsoft Advertising Tests Updated Reports Templates

Microsoft Advertising is reportedly testing a new reporting template design, which is refreshed and looks new to look at. The new design is under the reporting and then templates section of the Microsoft Advertising console.View the full article

-

Bing Ads Blue Local Business Tag & Other Labels

Microsoft is testing placing a new tag on some sponsored ads within the Bing Search results. This tag is in blue and says "Local Business," which I guess helps promote local businesses near you.View the full article

-

Starmer says he will abolish NHS England

Prime minister says move will slash bureaucracy and bring health system ‘back into democratic control’View the full article

-

Google Ads Search Max Spotted Again

Earlier this year, we spotted a new type of Google Ads campaign option named Search Max. Well, now it was spotted again, the configuration and set up of Search Max with a beta label on it.View the full article

-

Russia says it does not want a temporary ceasefire in Ukraine

Putin’s foreign policy adviser says Moscow wants long-term settlement taking its interests into accountView the full article

-

The Future Of Content Distribution: Leveraging Multi-Channel Strategies For Maximum Reach via @sejournal, @rio_seo

Is your content working as hard as it should? Learn how to amplify your reach with a multi-channel strategy that delivers results. The post The Future Of Content Distribution: Leveraging Multi-Channel Strategies For Maximum Reach appeared first on Search Engine Journal. View the full article

-

How to 'Reset' Your Instagram Algorithm When Your Feed Needs a Fresh Start

As a type A perfectionist, there are few things that give me more satisfaction than a good old spring clean. Come change of seasons, I’m always overcome with the urge to clear out drawers, declutter cupboards, and rearrange shelves. Doing it is a bit of a slog, of course, but that feeling you get after a good tidy-up? Worth every second I spend cursing over piles of old clothes on my bedroom floor. Now, wouldn’t it be neat if we could do the same for our online spaces? (You see where I’m going with this) As it happens, you can — on Instagram, at least. And, unlike spring cleaning, it won’t do a number on your back. In November 2024, the social media platform quietly launched a way for users to hit the reset button on their Instagram algorithm — completely wiping the slate clean on their home, reels, and explore feeds. Instagram launched this feature with teens in mind (more on this below), but honestly, it’s a wildly underrated new tool for anyone looking to refresh their social media experience without starting from scratch with a new account. Here’s everything you need to know about this handy feature. Bye-bye, algorithmic shift of a sad late-night doom-scroll. It’s time for a fresh start. What exactly is an Instagram algorithm reset?An Instagram algorithm reset is a feature that lets you clear your recommended content across explore, reels, and feeds to start fresh. To understand this, it’s helpful to know a little bit about how the Instagram algorithm works. In all your time on the platform, you’ve given Instagram hundreds of thousands of signals about the kinds of content you want to see more of. Likes, comments, and shares are the obvious ones, but even the more subtle, passive signals can shape your feed. Think, time spent lingering on a shocking video you just can’t turn away from — all of those little moments can add up. Best case scenario, you might find your feeds are no longer helpful for where you’re at in your life (for example, videos about wedding dresses that keep popping up after your wedding). In the worst case, you might find your feed peppered with videos you find triggering or distressing. So resetting your algorithm isn't just about decluttering your feed. It's about taking control of your Instagram experience. I’d argue it’s a very necessary feature as the platform shifts more and more to recommended content over content from family and friends — and incredibly helpful in protecting your mental health. As Instagram puts it, they want to give users "new ways to shape their Instagram experience, so it can continue to reflect their passions and interests as they evolve." Why would you want to reset your algorithm? There are plenty of reasons you might want to hit the reset button: Your interests have changed (goodbye sourdough!)You've been getting too much content on sensitive topics, which may cause distressYou're tired of seeing the same types of posts over and overYou accidentally fell down a rabbit hole of content you're not actually interested inYou want to discover new creators and fresh perspectivesThe reset feature was developed as part of the platform’s evolving protections for Teen Accounts, who Instagram wants to help cultivate "safe, positive, age-appropriate experiences." That said, the content reset feature is available for all users who feel that their feeds no longer reflect who they are. How to reset your Instagram algorithm in 5 stepsReady for that fresh start? Here's how to reset your Instagram recommendations: From your profile, tap the three-bar ‘More options’ icon in the top rightScroll down to ‘What you see,’ and tap ‘Content preferences’Tap ‘Reset suggested content’Follow the on-screen instructions and tap ‘Next’Tap ‘Reset suggested content’ to confirmScreenshots showing how to reset your algorithm on instagramPro tip: Before you finalize the reset (at step 5 above), Instagram will give you the option to review the accounts you're following. If you’re not sure you want a full reset and would prefer to remove certain accounts or add different ad topics, you can do that here, too. What happens after you reset your Instagram algorithm?Once you hit that reset button, your recommendations will start with a clean slate. But that doesn’t mean your feeds will be empty. Instagram will give you a mix of content to explore in your explore, reels, and other recommended feeds. As you interact with content by liking, sharing, or engaging with it, Instagram will begin personalizing your recommendations again based on these new signals. It's worth noting what the reset doesn't change: The accounts you follow (unless you manually unfollow them during the review process)The ads you seeYour existing posts, stories, or direct messagesHere’s a helpful way to visualize what it will be like: think moving to a new neighborhood while keeping all your furniture and friends. Other ways to change your Instagram experienceIf you don’t fancy a full reset, there are still plenty of tools on Instagram that will help you be more intentional about curating your feeds. Here are some other features you might want to try: Tap "Interested" on posts you want to see more of (tap the three dots on a post to find the option).Select "Not interested" for content you'd rather skip (as above, you’ll find the option by tapping the three dots on a post in your feeds). Note that these options are only available on your reels and explore feeds. Use Hidden Words to filter out posts with specific phrases (found in the same menu above, but under the ‘How others can interact with you,’ section).Switch to your Following Feed to see posts chronologically (tap the Instagram logo on your home feed to switch).Create a Favorites list to prioritize content from accounts you love (found in the same menu above, also under the ‘What you see,’ section).Ready for a reset?Let’s go back to my spring cleaning analogy: you know how, when you clear out your closet, you rediscover clothes you love and haven’t worn in ages, or make space for a new style you’re thinking of trying. It’s a bit like that. Sometimes, you don’t quite know what you need until you’ve taken stock of what you have. That said, you'll need to be intentional about how you engage after the reset. Those first few days post-reset are important for training the algorithm on what you actually want to see. Be mindful about what you like, comment on, and how long you spend watching certain types of content. Ready for that algorithm reset? Marie Kondo would be proud. View the full article

-

Content Marketing for Small Businesses: 10 Steps to Succeed in 2025

Find out how to grow your small business organically with content marketing—from building a foundation to convert site visitors to creating effective content calendars. View the full article

-

DOGE details its cuts at HUD, VA, CFPB

The task force terminated vendor contracts at the Department of Housing and Urban Development worth a combined $305 million, according to its wall of receipts. View the full article

-

These trains could carry giant batteries across Colorado and deliver clean energy to Denver

Across the U.S., dozens of proposed solar, wind, and battery projects—encompassing thousands of gigawatts of potential power—are backlogged as they wait to be allowed to plug into the power grid. And, even in areas where renewable energy projects are already online, their output is often heavily curtailed. This clean energy bottleneck stems from the fact that, as demand for renewable energy rises, the U.S. isn’t building new transmission lines fast enough to transport large amounts of clean energy from point A to point B. Now, there’s a company looking to address that problem with a simple yet radical solution: Putting renewable energy into giant batteries and transporting those batteries by train. SunTrain is a San Francisco-based company founded by green energy developer Christopher Smith, who now serves as the company’s president and chief technology officer. The idea, he explains, is to use the existing U.S. freight train system—which covers around 140,000 miles of terrain—to bring renewable energy that’s being curtailed by transmission bottlenecks to the areas that need it most. SunTrain is currently working on a pilot project that would run between Pueblo, Colorado, and Denver. If it’s approved by regulators, Smith says, he expects the pilot could be off the ground in just two years. [Photo: SunTrain] Current challenges to transporting renewables Clean energy is the fastest-growing source of electricity in the U.S. According to a report from American Clean power, 93% of the new energy capacity last year was solar, wind, and battery storage. The issue, Smith says, is that transmission line infrastructure lags far behind the rate of clean energy growth. “The United States needs 300,000 miles of new transmission lines, like, right now. That’s the amount that we need immediately to keep up with current demand,” Smith says. “It’s also estimated that, to reach 100% renewables, as well as the electrification demand that we’ll have by 2050, we’ll need over a million miles of new transmission lines by 2050. Currently, we’re building less than 1,000 miles a year.” [Image: SunTrain] Building new transmission lines is challenging for a number of reasons, including environmental regulations, the time it takes, and the fact that any new lines would have to cross thousands of miles of privately owned land. In Colorado, for example, Smith says there’s a lot of renewable energy in the state’s southeast corner, which flows through the grid to Pueblo. However, because there’s not enough transmission line capacity between Pueblo and Denver, much of that power can’t ultimately be used. “Once that renewable energy gets to Pueblo, there’s not enough transmission line capacity to get it into downtown Denver,” Smith says. “So that energy basically gets curtailed—a fancy word for being wasted.” Until now, the costly construction of new transmission lines has been the main solution that’s available. But Smith says this discussion overlooks a resource that’s been used to transport energy for almost 200 years: railroads. “The freight railroad network already moves virtually every single form of energy known to man that’s used in a real way: natural gas, coal, oil, ethanol, biomass, spent nuclear waste, various fossil fuels, and the list goes on and on,” Smith says. “So there’s this huge amount of overlap of our great railroad network and our electrical systems already. There is no reason why we cannot be moving battery trains over the freight rail network like we move every other form of energy.” [Photo: SunTrain] SunTrain’s solution For its pilot project, SunTrain is partnering with Xcel Energy, Colorado’s largest electric utility. Xcel owns a coal plant in Pueblo (Comanche Generating Station) and a natural gas plant in Denver (Cherokee Generating Station) that are both set to be decommissioned within the next several years. Through a collaboration with SunTrain, these plants could potentially be re-powered with battery stored energy. Smith says SunTrain would use the existing substation inside Comanche Generating Station—which already has extensive railroad infrastructure from its history as a coal plant—to charge its batteries from Pueblo’s bottlenecked grid. During the day, Smith says, the energy will likely be 100% renewable. Then, the batteries would be transported to Denver and the energy offloaded at the Cherokee Generating Station onto Denver’s grid. (Charging and discharging take between four and six hours each, and the 139-mile trip from Pueblo to Denver takes about five hours by train.) “A substation can turn energy into a format that can cover long distances without losing much energy,” Smith says. “A substation can also turn electricity generated from a power plant into a format that can be used by local homes and businesses. For SunTrain’s purposes, the Pueblo substation allows us to get the electricity formatted properly for our batteries while also collectively accessing all the various renewable energy generators in the region.” [Photo: SunTrain] SunTrain’s proposed railcars will be made of 20-foot shipping containers, each of which will hold about 40 tons of batteries. The company designed proprietary charging and discharging systems that “allow the energy to flow right from where it’s generated, whether it’s a solar array or a substation, right under the batteries on the railcar,” Smith says. Then, once the train arrives at its destination, the discharging system would similarly allow the energy to flow right off the batteries. The whole process is designed so that the batteries never actually need to be removed from the train. [Image: SunTrain] In an interview with the podcast In the Noco, Smith said SunTrain’s first generation railcars are designed to match the freight railroad’s existing standards for coal trains, to ensure that the system itself doesn’t need to change anything in order for SunTrain to come to market. Based on those parameters, each train will be built at between 8,000 and 9,000 feet long, with the capacity to carry around two gigawatt hours of power in total. That’s enough to power a city of 100,000 for a full day. Smith says the team has already tested a proof-of-concept train on several trips amounting to more than 10,000 miles on the Union Pacific network, traveling from SunTrain’s San Francisco testbed to discharge locations across California, Nevada, and Colorado. Now, the company is waiting for Colorado’s Public Utilities Commission to approve Xcel’s expenditure of about $125 million to begin construction on the pilot project. “We tested the technology, the feasibility, made sure the mechanical standards were there,” Smith says. “Our manufacturing partners can deliver entire unit trains of these—meaning 200 rail cars of batteries that could carry about 1.75 gigawatt hours of energy. So this isn’t something that’s far away, coming in the pipeline, or needing some kind of technological breakthrough. This is an immediately executable idea. It just needs the capital.” View the full article

-

Russia says its forces have retaken key Kursk town of Sudzha

Push comes as Russian and US officials gear up for meeting to discuss end to Ukraine warView the full article

-

This volunteer crew is on a mission to fix Wikipedia’s worst celebrity photos

Visit a celebrity’s Wikipedia page and there’s a good chance you’ll be greeted by a blurry, outdated, or unflattering photo. These images often look like they were snapped in passing at a public event—because, in many cases, they were. The reason? Wikipedia requires all images to be freely available for public use. Since professional photographers typically sell their work, high-quality portraits rarely make it onto the site. That’s bad news for celebrities, for whom this page is often their most-viewed online presence—and therefore the face they present to the world. Some photos are so notoriously bad, they’ve even earned a spot on a dedicated Instagram page. Enter WikiPortraits: a team of volunteer photographers on a mission to fix this injustice. Armed with their own camera gear—and often covering their own travel—these photographers attend festivals, award shows, and industry events to capture high-quality, freely licensed images of celebrities and other notable figures. They’ve brought portrait studios to major events like the Sundance Film Festival, SXSW, and Cannes, helping to refresh outdated Wikipedia photos or fill in the blanks for biographies missing images altogether. “It’s been in the back of our minds for quite a while now,” Kevin Payravi, one of WikiPortraits’ cofounders, told 404 Media in a recent interview. Last year, the team decided to turn the idea into action. They secured press credentials for Sundance 2024, sent a few photographers to the festival, and set up a portrait studio on site. It marked WikiPortraits’s first coordinated effort in the U.S. to capture high-quality, freely licensed images specifically for Wikipedia. Since launching last year, WikiPortraits has grown to over 30 photographers, collectively covering about 10 global festivals and snapping nearly 5,000 freely licensed celebrity portraits. Their photos have racked up millions of views on Wikipedia and have even been picked up by news outlets around the world. Celebrities? They’re often thrilled. Just ask Jeremy Strong. At a New York screening of The Apprentice, photographer Nikhil Dixit approached the Succession star about taking an updated Wikipedia photo. Strong’s publicist initially declined, Dixit told 404 Media, but the actor interrupted. “Wait, you’re from Wikipedia?” he asked. “For the love of God, please take down that photo. You’d be doing me a service.” View the full article

-

Capacity Planning Template: Download Here

Our capacity planning template will help you keep track of how much time your team has to work on your project. You'll be able to make sure deliverables are completed on time and within budget, and deliver more successful projects. The post Capacity Planning Template: Download Here appeared first on The Digital Project Manager. View the full article

-

Hungary threatens to cancel sanctions on 2,000 Russians unless EU exempts Mikhail Fridman

Travel restrictions and asset freeze orders are due to expire on SaturdayView the full article

-

WordPress Backup Plugin Vulnerability Affects 5+ Million Websites via @sejournal, @martinibuster

The All-in-One WP Migration and Backup plugin has a high-severity vulnerability that puts over 5 million sites at risk The post WordPress Backup Plugin Vulnerability Affects 5+ Million Websites appeared first on Search Engine Journal. View the full article

-

Cisco’s chief product officer explains the company’s transformation around AI

For 40 years, Cisco has been best known for building routers, switches, and other networking technology that connects computers within offices, data centers, and across the internet. Cisco’s also a software business, known for its cybersecurity products and familiar applications like the conferencing and communications platform Webex. And last year, the company announced the $28 billion acquisition of big data company Splunk, part of Cisco’s growing role in powering data-driven AI technology. Cisco also last year named Jeetu Patel as executive vice president and chief product officer, with the goal of breaking down barriers within the company as it bets big on providing hardware, software, and security infrastructure for the rapidly growing AI sector. Patel spoke to Fast Company about his role, what the AI-powered transformation looks like inside Cisco and in the industry as a whole, and why the company is well-positioned to play a key role in the future of artificial intelligence. Can you tell me a little bit about what this AI transformation looks like? This is an exciting time at Cisco. We’re a $55-billion+ company, but the goal and the hope is that we’re going to be able to operate like the world’s largest startup, meaning we’ll operate at speed with scale. Every once in a while, you have this kind of massive shift in the market that creates an unpredictable opportunity none of us would have thought about even 10 years ago. It feels like the foundation we’ve been laying for 40 years is now going to come to life. I frankly feel like there are going to be two types of companies in the world: companies that are really dexterous with the use of AI and companies that really struggle for relevance, and it’ll be the largest shift we’ve seen from a platform perspective in our lifetimes. We’ve done a lot of studies with customers, and 97% of them are really excited about the possibilities of AI, but only 1.7% feel prepared that they know how to tackle it. So there’s a big gap between the possibilities and what needs to happen. And when you ask them a follow-on question, what holds you back, they answer three things. The first is, they’re not sure they have the necessary infrastructure or technical know-how to construct that infrastructure. The second is they feel like there are 1,000 ideas within their company, but adoption slows down because safety and security become big concerns. The third area is that they just don’t feel they’ve got the right level of training and skills internally. In each of those three areas, Cisco can be meaningfully accretive to their mission, and it’s an amazing opportunity for Cisco to shape this entire next wave. What will Cisco’s role be in that transition? We will provide infrastructure for AI. You’re going to have many AI agents talking to each other across the data center, as well as physical AI, with robotics and humanoids. A world of 8 billion people will feel like a world of 80 billion from a throughput perspective, because of the digital workers that will be added to the mix. And I think that order of magnitude differential might be a gross understatement. That means there’s going to be much more demand for high-performance, low-latency, power-efficient networking so one agent can talk to another and coordinate and come back with an answer. You’ll see much more demand for high scale infrastructure, not just around GPUs but around [data processing units (DPUs)] and different types of compute paradigms. Five key areas will be networking, security, safety, data, and models, and Cisco will participate in each of them and we will partner with others. We just announced a partnership with Nvidia. We just made an investment in Anthropic. We are investors in Scale.AI and we’re investors in Groq. All of these innovative things that kind of refactor the world will start happening, and Cisco will be at the center of it all. What puts Cisco in a position to lead in all of these different areas? Cisco historically has been very oriented on different technologies that operated well in their own siloes. But the true opportunity is not in acting like a holding company but as an integrated platform, tightly integrated but loosely coupled. The first characteristic of a platform is to lower the marginal cost for existing customers for every new technology from Cisco. It’s good for customers and good for us. But the second thing is to compound the value of things you might already have. For instance, networking is great, but networking without security is just selling pipes. When you take security and bake it into the fabric of the network, you can deliver a trusted network and trusted communications. Cisco can be a secure networking company that’s AI first and completely change the value proposition for the market. Our goal is to make sure to tie things together, build amazing products people love and talk to friends and family about, operate in an open ecosystem, and make sure we’re at least 10 times better than others in the market. Nobody switches to you for 10% better, but when it’s 10 times better, it’s irresponsible for a customer to not consider switching. We want to make it irresponsible for the customer to not consider Cisco. What does being AI first mean in the security context? To handle attacks that are happening at an extremely sophisticated level, you have to have defenses not at human scale but at machine scale. So you have to natively build AI capabilities into your product, not as an afterthought that’s bolted on. But it’s not just about using AI in your security stack. It’s about securing AI itself. The characteristic of AI that’s scary to companies is that models are, by definition, non-deterministic, which means they’re by definition unpredictable. If I ask the same question twice, I might get two slightly different answers. But enterprises bank on predictability. You need to have a common way that safety and security can be addressed regardless of which model you’re using, which application you’re using, and how many agents you have. The huge opportunity is, can we make AI safe and secure so that when it does hallucinate, we’ve got guardrails for it, and when it has toxicity and harmful content, we have guardrails for it. And when something like DeepSeek comes out, we can figure out how to jailbreak it through an algorithmic red teaming exercise, and figure out guardrails organizations can put around it so if they do end up using it, they’ll know it doesn’t behave in an unpredictable way. That’s where a company like Cisco comes in, as that common, neutral-party security substrate across every model, every application, every cloud, every agent. A product that just went into general availability is AI Defense, an AI safety and security product. If you believe in a world that’s going to be multi-model and multi-agent, we would be the common security layer that could provide visibility into every company about what data is being used and what applications of AI are being used by developers and users. We can also provide validation of AI models—are they working the way we intend them to work? What would have taken seven to 10 weeks to validate a model can now be done within a matter of seconds or minutes, because we have the algorithmic process to do it. For example, in the first 48 hours that DeepSeek came out, we were able to jailbreak that model on 50 different prompts that HarmBench had. But then we were able to say, given that, we can now give you guardrails on what you do. How are the investments and partnerships you mentioned important to your work in AI? One of the key principles we talk about is that you have to operate with the broader ecosystem. You cannot be a walled garden in this day and age. With our partnership with Nvidia, Nvidia now includes Cisco in its reference architecture, and where an enterprise has Nvidia and Cisco, they want the two of them to work together. As companies we’re committed to making that happen, and we’ll work with Nvidia and ensure our switches work well with Nvidia SmartNICs, and their GPU clusters will have CIsco networking to connect clusters with low latency and high performance and power efficiency. How have you worked to break down silos internally? I can give you an example. I was just with the Splunk team. That’s a very large acquisition—about $28 billion. People on the all-hands call asked what we think about preserving the Splunk culture. Culture is basically an agreed-upon set of norms of how we’re going to work together. I think Splunk culture is amazing, and not only should we preserve the Splunk culture, we should actually take elements of it and make it part of the CIsco culture, so Cisco can learn from what the Splunkers have learned. But I also feel that Splunk has a lot to learn from Cisco, so we should integrate elements of Cisco culture into Splunk culture. And if both Cisco and Splunk don’t do certain things well, we might learn from the market. If another company’s doing it well, we should evolve with the market. Just staying stagnant is actually our biggest enemy. We need to constantly be curious, keep learning ferociously, and also remember to keep unlearning certain patterns. When I explained this to the team, what the possibilities were, it took very little time for them to get on board, and before you knew it, the tempo of the organization changed. We have amazing people in the company, and we have to make sure we can unlock their creativity while still using Cisco’s size and scale. The good news is, I’ve done this a few times. When I came in I was running the Webex business, and it’s now a beautifully built product with AI infused into its fabric. It does really well with the team that built Webex in the past but had struggled because the clarity was not there from leadership. We’ve got an amazing broad portfolio that, if we harmoniously weave together, magic starts to happen. You’re Cisco’s first chief product officer. What’s your vision for that role? We want to be an AI-first, product-first company. We have to build amazing products that people love, that they talk to their friends and family about. There’s no marketing engine that’s as good as word of mouth. We want to make sure we really sweat the details and build amazing products that people love. We want to make sure that we stay slightly dissatisfied, slightly paranoid and superbly humble, keeping our heads down, just obsessed about the customer and how we’re going to get better and better. The reason this role is consequential is the decision-making velocity. You don’t have to go out and get in a room with a committee to make a decision. There’s very clearly one team, and the goal is to make sure we build a platform that accretes value on top of each other. What would have taken us three years to get done, we were able to do within three months. There was that much of a difference in decision-making velocity. We have a key set of values and guiding principles that we make sure everyone’s aligned on. The people here believe in the power of AI and what it’s going to be able to do. It’s less about me and more about taking the friction out of the organization to move at speed and move with scale, with a combination of systems thinking and a level of grit, being able to be scrappy. If you can be scrappy with systems thinking, you get the best of both worlds. Operate like the world’s largest startup, and you can do things no startup can do, and things no large company can do. View the full article

-

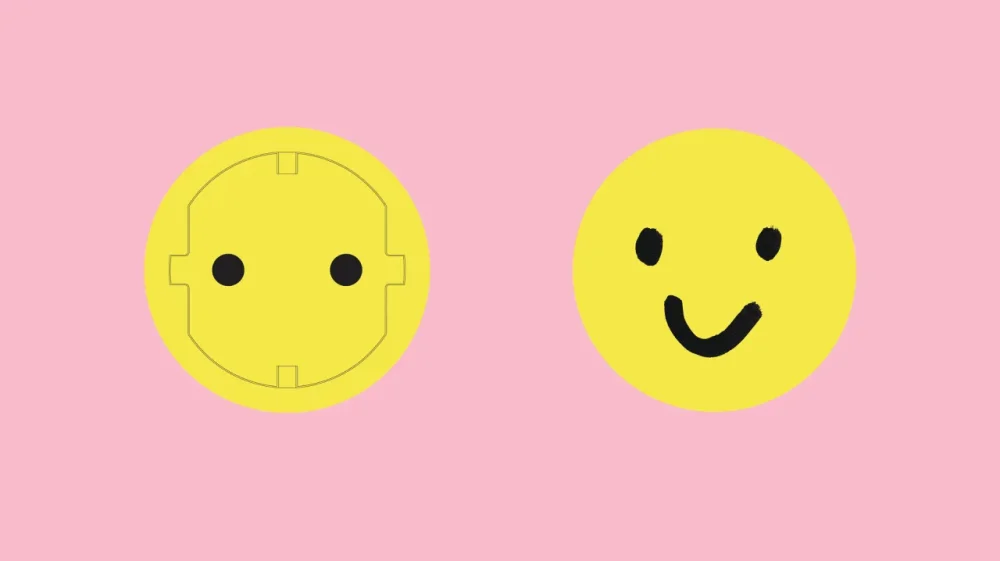

This charming new electrical outlet looks like a smiley face

When you think of an electrical outlet, the first thing that likely comes to mind is a simple, rectangular device mounted on the wall—purely functional, often hidden from sight. Architect and designer India Mahdavi has different ideas, though. Working with the high-end electrical brand 22 System, Mahdavi reimagined the outlet as a cheerful pop of color that’s reminiscent of a smiley face. [Photo: Thierry Depagne/22 System] Omer Arbel, co-founder of 22 System and design brand Bocci, asked Mahdavi to bring an unexpected element of joy to this everyday utility by creating a distinct colorway for the existing outlet face—transforming it from a discreet necessity into a bold design statement. Her vision led to the Smiley, an outlet with a fluorescent yellow hue that infuses playfulness into an otherwise technical product. Mahdavi says she wanted something that could stand out even in the dark. Instead of concealing the outlets, as people typically do, she aimed to celebrate them, making them an intentional part of the space. Mahdavi not only provided input on the product’s color but also crafted a narrative around it—one that challenges traditional notions of how electrical outlets fit into homes. [Photo: Celia Spenard-Ko/courtesy 22 System] “How could we perceive it differently, perceive it in a more homey, familiar, and maybe more feminine way? Instead of having these technical, sophisticated elements, how can you make them your own? How can you use them in your own home?” Mahdavi says. To show off the outlet, she transformed her classic Oliver armchair into a multi-socket appliance adorned with vibrant yellow outlets. [Photo: Thierry Depagne/22 System] Mahdavi believes the pandemic heightened our awareness of the unseen, prompting deeper consideration of the objects around us. People started asking more questions like: How was it made? Who designed it? How is it presented? What is the story behind it? This shift in perspective encouraged Mahdavi to reimagine an often-overlooked necessity as an object of intrigue and design. “It was about how do you turn something that is not supposed to be seen into something that is really seen,” says Mahdavi. View the full article