Everything posted by ResidentialBusiness

-

Google Emails Reminder About Business Profile Policies

A couple of days ago, Google sent out an email to owners of Google Business Profile listings. The email was a reminder about the Google Business Profile policies, and it highlighted policies around reviews.View the full article

-

The 3 biggest arguments against DEI, Debunked

Recently, I overheard a conversation at a local coffee shop: “Thank god for the new administration and finally taking a stand against DEI,” said one of the men to another, as they sipped their coffee. “It’s ridiculous and unfair, completely ruining work. We can finally get back to business.” I leaned in a bit further to try and listen in as I paid for my Earl Gray tea. “Well . . . I’m not sure that’s entirely true,” the other man said hesitating. “I think that . . . ” “Finally, we can get back to raising standards,” the other individual interrupted. “It’s about time! By the way, are you going to the game next week?” The other individual looked uncomfortable as the conversation swiftly shifted in a completely different direction. While I was done paying, and also done eavesdropping, I left knowing that what I heard in this local coffee shop was not an isolated conversation. The backlash against diversity, equity, and inclusion is playing out on the national and world stage almost every single day. And the backlash is also taking place on much smaller stages, in conversations in our conference rooms and in our hallways, amongst colleagues loudly and in whispers in our workplaces. And in these conversations, there’s an opportunity to talk and educate each other about what diversity, equity, and inclusion is and what diversity, equity, and inclusion is not. Here are three of the most common statements I am hearing from individuals for the case against diversity, equity, and inclusion, and here’s how we can debunk these statements and continue to help educate each other on what is true and what is not. False argument against DEI: We lower our standards when it comes to talent Diversity, equity, and inclusion is not about lowering our standards; diversity, equity, and inclusion is about setting fair and equitable standards on how we evaluate all talent. The term “DEI hire” is being used to make us believe that we have lowered standards by hiring individuals from different backgrounds and different lived experiences. In reality, “DEI hire” is a harmful and a hurtful phrase that leads many to believe that someone was handed a job simply because they may “look different” or “be different” or are a “quota hire.” And it is increasingly becoming an acceptable way to discredit, demoralize, and disrespect leaders of color. One of the key outcomes of diversity, equity, and inclusion is creating standardized processes on how we hire talent, and also on who we choose to develop and promote. This includes using software tools like Greenhouse, which helps you ensure that every candidate for a role meets with the same set of interviewers, that interview questions are aligned in advance, and that there’s a way to evaluate and score the interviews and debrief together as an interview team. Otherwise, we fall prey to our biases and may hire people who look like us, think like us, and act like us, or simply hire them because we really just like them. When it comes to how we develop and promote talent, software tools like Lattice help us ensure we set clear and reasonable goals for all, and not just some employees. We can then track progress in weekly meetings, we can give and receive coaching and feedback, and we can have a consistent framework when we evaluate talent during performance review time. And how we evaluate talent is also then connected to how we compensate individuals, and ultimately who we chose to promote. Without these standardized processes, we may end up giving better performance reviews and more money to those who are the most vocal, who spend the most time managing up to us, and who we just find ourselves having more in common with. Diversity, equity, and inclusion efforts help us raise standards and make sure we are getting the best out of our talent. False argument against DEI: It distracts ourselves from driving revenue Diversity, equity, and inclusion does not distract us from leading our businesses; in fact, diversity, equity, and inclusion is a driver of the business. It’s not a separate initiative that sits apart from the business; it should be integrated into everything we do in our workplaces. These efforts not only help us ensure that we get the best out of our talent, but it also ensures we are able to best serve our customers. According to Procter & Gamble, the buying power of the multicultural consumer is more than $5 trillion. Procter & Gamble reminds us that it’s no longer multicultural marketing; it’s in fact mainstream marketing. There is growth to be had when we ensure we connect and authentically serve not just the multicultural consumer, but also veterans, individuals with disabilities, the LGBTQ+ community, and many more communities. Understanding their consumer needs and how your businesses’ products and services can surprise and delight them, and enhance the quality of their lives, is an untapped competitive advantage. Companies like E.L.F. understand this, with a strong focus on diversity, equity, and inclusion efforts that have paid off: It has posted 23 consecutive quarters of sales growth. Over the past five years, the company has also seen its stock increased by more 700%. In contrast, since Target announced a roll back on its diversity, equity, and inclusion efforts, it’s experienced a decline in sales. Black church leaders are now calling on their congregations to participate in a 40 day boycott of Target. Black consumers have $2 trillion in buying power, setting digital trends and engagement. “We’ve got to tell corporate America that there’s a consequence for turning their back on diversity,” said Bishop Reginald T. Jackson, to USA Today. “So let us send the message that if corporate America can’t stand with us, we’re not going to stand with corporate America.” False argument against DEI: An inclusive work environment only benefits a few Diversity, equity, and inclusion is not about creating an inclusive environment for a select few. Diversity, equity, and inclusion is about creating workplaces where we all have an opportunity to reach our potential and help our companies reach their potential. In my book, Reimagine Inclusion: Debunking 13 Myths to Transform Your Workplace, I tackle the myth that diversity, equity, and inclusion processes and policies only have a positive effect on a certain group of individuals. I share “The Curb-Cut Effect” which is a prime example of this. In 1972, faced with pressure from activists advocating for individuals with disabilities, the city of Berkeley, California, installed its first official “curb cut” at an intersection on Telegraph Avenue. In the words of a Berkeley advocate, “the slab of concrete heard ‘round the world.” This not only helped people in wheelchairs. It also helped parents pushing strollers, elderly with walkers, travelers wheeling luggage, workers pushing heavy carts, and the curb cut helped skateboarders and runners. People went out of their way and continued to do so, to use a curb cut. “The Curb-Cut Effect” shows us that one action targeted to help a community ended up helping many more people than anticipated. So, in our workplaces, policies like flexible work hours and remote work options, parental leave and caregiver assistance, time off for holidays and observances, adaptive technologies, mental health support, accommodations for individuals with disabilities, and more have a ripple effect and create workplaces where everyone has an opportunity to thrive. Don’t fall for the rhetoric against “DEI” being exclusive, unfair, or a distraction. The goal of diversity, equity, and inclusion efforts has always been about leveling the playing field and ensuring we are creating workplaces where each and everyone of us have an opportunity to succeed. View the full article

-

Google iOS App Drops Gemini Toggle

A year or more ago, Google added a toggle switch to use Gemini directly in the iOS Google Search app. Well, that was removed this week and you now need to go to the native Gemini app to use Gemini, you cannot use the Google app.View the full article

-

Political scientist Bjorn Lomborg: ‘You can’t spend on everything’

The ‘sceptical environmentalist’ used cost-benefit analysis to argue against emissions cuts. Now he has turned his attention to overseas aidView the full article

-

Development minister resigns in protest at Starmer’s aid cuts

Move by Anneliese Dodds comes after prime minister announces plan to shift resources to defenceView the full article

-

Bing Tests Outlining Search Result Snippets

Microsoft is testing outlining the individual search result snippets within Bing Search. So when you look at a snippet, it has this outline around it, and then when you hover your cursor over it, it highlights more.View the full article

-

301 vs. 302 Redirect: Which to Choose for SEO and UX

A 301 redirect should be used for permanent redirects, while a 302 should be used for temporary redirects. View the full article

-

Google Shopping Adds Search Google Button For Some Queries

If you go to Google Shopping and enter a query that is not shopping related, Google will add a button above the shopping results asking you to search Google for that query.View the full article

-

UK house prices rise more than expected in February

Average cost of £270,493 is 3.9% higher year on year as buyers try to get ahead of stamp duty changes in AprilView the full article

-

Hollywood’s obsession with AI-enabled ‘perfection’ is making movies less human

The notion of authenticity in the movies has moved a step beyond the merely realistic. More and more, expensive and time-consuming fixes to minor issues of screen realism have become the work of statistical data renderings—the visual or aural products of generative artificial intelligence. Deployed for effects that actors used to have to create themselves, with their own faces, bodies, and voices, filmmakers now deem these fixes necessary because they are more authentic than what actors can do with just their imaginations, wardrobe, makeup, and lighting. The paradox is that in this scenario, “authentic” means inhuman: The further from actual humanity these efforts have moved, the more we see them described by filmmakers as “perfect.” Is perfect the enemy of good? It doesn’t seem to matter to many filmmakers working today. These fixes are designed to be imperceptible to humans, anyway. Director Brady Corbet’s obsession with “perfect” Hungarian accents in his Oscar-nominated architecture epic, The Brutalist, is a case in point. Corbet hired the Ukraine-based software company Respeecher to enhance accents by using AI to smooth out vowel sounds when actors Adrien Brody and Felicity Jones (American and British, respectively) speak Hungarian in the film. Corbet said it was necessary to do that because, as he told the Los Angeles Times, “this was the only way for us to achieve something completely authentic.” Authenticity here meant integrating the voice of the film’s editor, Dávid Jánsco, who accurately articulated the correct vowel sounds. Jánsco’s pronunciation was then combined with the audio track featuring Brody and Jones, merging them into a purportedly flawless rendition of Hungarian that would, in Corbet’s words in an interview with GQ, “honor the nation of Hungary by making all of their off-screen Hungarian dialogue absolutely perfect.” The issue of accents in movies has come to the fore in recent years. Adam Driver and Shailene Woodley were, for instance, criticized for their uncertain Italian accents in 2023’s Ferrari. Corbet evidently wanted to make sure that would not happen if any native Hungarian speakers were watching The Brutalist (few others would notice the difference). At times, Brody and Jones speak in Hungarian in the film, but mostly they speak in Hungarian-accented English. According to Corbet, Respeecher was not used for that dialogue. Let’s say that for Corbet this will to perfection, with the time and expense it entailed, was necessary to his process, and that having the voice-overs in translated Hungarian-accented English might have been insultingly inauthentic to the people of Hungary, making it essential that the movie sound, at all times, 100% correct when Hungarian was spoken. Still, whether the Hungarian we hear in The Brutalist is “absolutely perfect” is not the same as it being “completely authentic,” since it was never uttered as we hear it by any human being. And, as it turns out, it was partially created in reaction to something that doesn’t exist. In his interview with the Los Angeles Times, Corbet said that he “would never have done it any other way,” recounting when he and his daughter “were watching North by Northwest and there’s a sequence at the U.N., and my daughter is half-Norwegian, and two characters are speaking to each other in [air quotes] Norwegian. My daughter said: ‘They’re speaking gibberish.’ And I think that’s how we used to paint people brown, right? And, I think that for me, that’s a lot more offensive than using innovative technology and really brilliant engineers to help us make something perfect.” But there is no scene in Alfred Hitchcock’s 1959 film North by Northwest set at the United Nations or anywhere else in which two characters speak fake Norwegian or any other faked language. Furthermore, when Corbet brings in the racist practice of brownface makeup that marred movies like 1961’s West Side Story, he is doing a further disservice to Hitchcock’s film. The U.N. scene in North by Northwest features Cary Grant speaking with a South Asian receptionist played by Doris Singh, not an Anglo in brownface. Corbet’s use of AI, then, is based on something that AI itself is prone to, and criticized for: a “hallucination” in which previously stored data is incorrectly combined to fabricate details and generate false information that tends toward gibberish. While the beginning of Hitchcock’s Torn Curtain (1966) is set on a ship in a Norwegian fjord and briefly shows two ship’s officers conversing in a faked, partial Norwegian, Corbet’s justification was based on a false memory. His argument against inauthenticity is inauthentic itself. AI was used last year in other films besides The Brutalist. Respeecher also “corrected” the pitch of trans actress Karla Sofía Gascón’s singing voice in Emilia Pérez. It was used for blue eye color in Dune: Part Two. It was used to blend the face of Anya Taylor-Joy with the actress playing a younger version of her, Alyla Browne, in Furiosa: A Mad Max Saga. Robert Zemeckis’s Here, with Tom Hanks and Robin Wright playing a married couple over a many-decade span, deployed a complicated “youth mirror system” that used AI in the extensive de-agings of the two stars. Alien: Romulus brought the late actor Ian Holm back to on-screen life, reviving him from the original 1979 Alien in a move derided not only as ethically dubious but, in its execution, cheesy and inadequate. It is when AI is used in documentaries to re-create the speech of people who have died that is especially susceptible to accusations of both cheesiness and moral irresponsibility. The 2021 documentary Roadrunner: A Film About Anthony Bourdain used an AI version of the late chef and author’s voice for certain lines spoken in the film, which “provoked a striking degree of anger and unease among Bourdain’s fans,” according to The New Yorker. These fans called resurrecting Bourdain that way “ghoulish” and “awful.” Dune: Part Two [Photo: Warner Bros. Pictures] Audience reactions like these, though frequent, do little to dissuade filmmakers from using complicated AI technology where it isn’t needed. In last year’s documentary Endurance, about explorer Ernest Shackleton’s ill-fated expedition to the South Pole from 1914 to 1916, filmmakers used Respeecher to exhume Shackleton from the only known recording of his voice, a noise-ridden four-minute Edison wax cylinder on which the explorer is yelling into a megaphone. Respeecher extracted from this something “authentic” which is said to have duplicated Shackleton’s voice for use in the documentary. This ghostly, not to say creepy, version of Shackleton became a selling point for the film, and answered the question, “What might Ernest Shackleton have sounded like if he were not shouting into a cone and recorded on wax that has deteriorated over a period of 110 years?” Surely an actor could have done as well as Respeecher with that question. Similarly, a new three-part Netflix documentary series, American Murder: Gabby Petito, has elicited discomfort from viewers for using an AI-generated voice-over of Petito as its narration. The 22-year-old was murdered by her fiancé in 2021, and X users have called exploiting a homicide victim this way “unsettling,” “deeply uncomfortable,” and perhaps just as accurately, “wholly unnecessary.” The dead have no say in how their actual voices are used. It is hard to see resurrecting Petito that way as anything but a macabre selling point—carnival exploitation for the streaming era. Beside the reanimation of Petito and the creation of other spectral voices from beyond the grave, there is a core belief that the proponents of AI enact but never state, one particularly apropos in a boomer gerontocracy in which the aged refuse to relinquish power. That belief is that older is actually younger. When an actor has to be de-aged for a role, such as Harrison Ford in 2023’s Indiana Jones and the Dial of Destiny, AI is enlisted to scan all of Ford’s old films to make him young in the present, dialing back time to overwrite reality with an image of the past. Making a present-day version of someone young involves resuscitating a record of a younger version of them, like in The Substance but without a syringe filled with yellow serum. When it comes to voices, therefore, it is not just the dead who need to be revived. Ford’s Star Wars compatriot Mark Hamill had a similar process done, but only to his voice. For an episode of The Mandalorian, Hamill’s voice had to be resynthesized by Respeecher to sound like it did in 1977. Respeecher did the same with British singer Robbie Williams for his recent biopic, Better Man, using versions of Williams’s songs from his heyday and combining his voice with that of another singer to make him sound like he did in the 1990s. Here [Photo: Sony Pictures] While Zemeckis was shooting Here, the “youth mirror system” he and his AI team devised consisted of two monitors that showed scenes as they were shot, one the real footage of the actors un-aged, as they appear in real life, and the other using AI to show the actors to themselves at the age they were supposed to be playing. Zemeckis told The New York Times that this was “crucial.” Tom Hanks, the director explained, could see this and say to himself, “I’ve got to make sure I’m moving like I was when I was 17 years old.” “No one had to imagine it,” Zemeckis said. “They got the chance to see it in real time.” No one had to imagine it is not a phrase heretofore associated with actors or the direction of actors. Nicolas Cage is a good counter example to this kind of work, which as we see goes far beyond perfecting Hungarian accents. Throughout 2024, Cage spoke against AI every chance he got. At an acceptance speech at the recent Saturn Awards, he mentioned that he is “a big believer in not letting robots dream for us. Robots cannot reflect the human condition for us. That is a dead end. If an actor lets one AI robot manipulate his or her performance even a little bit, an inch will eventually become a mile and all integrity, purity, and truth of art will be replaced by financial interests only.” In a speech to young actors last year, Cage said, “The studios want this so that they can change your face after you’ve already shot it. They can change your face, they can change your voice, they can change your line deliveries, they can change your body language, they can change your performance.” And he said in a New Yorker interview last year, speaking about the way the studios are using AI, “What are you going to do with my body and my face when I’m dead? I don’t want you to do anything with it!” All this from a man who swapped faces with John Travolta in 1997’s Face/Off with no AI required—and “face replacement” is now one of the main things AI is used for. In an interview with Yahoo Entertainment, Cage shared an anecdote about his recent cameo appearance as a version of Superman in the much-reviled 2023 superhero movie The Flash. “What I was supposed to do was literally just be standing in an alternate dimension, if you will, and witnessing the destruction of the universe. . . . And you can imagine with that short amount of time that I had, what that would mean in terms of what I could convey—I had no dialogue—what I could convey with my eyes, the emotion. . . . When I went to the picture, it was me fighting a giant spider. . . . They de-aged me and I’m fighting a spider.” Now that’s authenticity. View the full article

-

Microsoft criticises CMA over ‘fundamental mistake’ in UK cloud probe

Tech group says antitrust regulator has ignored how AI is reshaping the tech industryView the full article

-

17 Proven SaaS Marketing Strategies From 11 CMOs & Founders

From Crypto.com to Airwallex, Paddle, and Surfer, these battle-tested methods have fueled growth in competitive markets with limited resources. In this article, we share their best insights so you can apply them to your own SaaS business. Let’s dive in!…Read more ›View the full article

-

Why Costco is targeting the rich, with deals on Rolexes, 10-carat diamonds, and gold bars

Branded is a weekly column devoted to the intersection of marketing, business, design, and culture. Costco chair Hamilton “Tony” James caused a bit of a stir this week when, in an interview, he mentioned a retail category that’s done surprisingly well for the big-box chain: luxury goods. “Rolex watches, Dom Pérignon, 10-carat diamonds,” James offered as examples of high-end products and brands that have fit into a discount-club model more typically associated with buying staples in bulk. “Affluent people,” he explained, “love a good deal.” Courting that group may be particularly timely right now—and not just for Costco. According to a recent report from research firm Moody’s Analytics, the top 10% of U.S. earners (with household incomes of $250,000 and up) now account for almost 50% of all spending; 30 years ago, they accounted for 36%. Moody’s calculates that spending by this top group contributes about one-third to U.S. gross domestic product. While that reflects a serious squeeze further down the income ladder, where the price of eggs and other basics remain high, it also suggests that the comparatively well-off not only have money to spend, but they’re spending it. As of September, the affluent had increased their spending 12% over the prior year, while working-class and middle-class households had spent less. That’s having a clear effect on such sectors as travel, where the affluent have always been part of the mix but are now even more important. Delta recently reported that premium ticket sales are up 8%, much more than main-cabin sales. Bank of America found the most affluent 5% of its customers spent over 10% more on luxury goods than a year ago. “They’re going to Paris and loading up their suitcases with luxury bags and shoes and clothes,” BofA Institute senior economist David Tinsley told the Wall Street Journal. For discounters and dollar stores targeting lower-income consumers, this has meant more competition for fewer dollars spent. (Dollar stores have struggled, and the discount chain Big Lots has filed for bankruptcy.) Addressing that challenge by courting the wealthy isn’t an easy move, but Costco isn’t alone in trying. Walmart CFO John David Rainey told Fox Business that the retail giant has expanded its selection of high-end Apple products, Bose headphones, and other items “sought after by more-affluent customers,” as part of a stab at “upleveling” the Walmart brand. Of course, it may not be easy for most bargain-oriented brands to swiftly pivot, but Costco does seem to have more of a track record of pushing a more upscale-friendly element to its image. James noted that Costco has long counted affluent shoppers among its members—36% of them have incomes of $125,000 and higher, according to consumer-data firm Numerator. A Coresight analysis from a couple years ago found that Costco customers have higher average incomes than those who shop at rival Sam’s Club, and that’s reflected in its brand and product variety. “Costco’s reputation for serving that somewhat higher-income demographic makes the chain more attractive to brands that target those shoppers as well,” Morningstar analyst Zain Akbari told CNBC. And of course it doesn’t hurt that the chain has famously been selling gold and platinum bars. (It’s also worth noting that Costco’s business is doing well in general, not just with high-end customers—and its reputation seems to have benefited from its reaffirmed commitment to DEI as a sound business practice.) “We’ve always known we could move anything in volume if the quality was good and the price was great,” Costco’s James said, and that includes higher-end items that might seem like a stretch for a price-focused warehouse chain. In fact, he argued, it’s a natural part of the Costco brand: Both the company and its fans like to talk about the “treasure hunt”-feel of finding something unexpected that’s not necessarily cheap, but a bargain. “We’re not interested in selling just anything at a low price,” James added. “If someone wants to buy a $500 TV for $250 at Costco, we want to sell them a $1,000 TV for $500 instead. We’re always trying to find better items to sell to members, giving them a great deal. We’re by no means a dollar store.” It (almost) goes without saying that from a macro perspective, the massive wealth and spending-power imbalance underlying Moody’s analysis points to potential problems that shifts in retail strategy won’t solve: Consumer debt in delinquency is rising, and the whole economy is vulnerable if splurging by the affluent were to plummet. So while retailers will likely continue to chase after wealthier customers, the majority of consumers are left to “treasure hunt” for reasonably priced eggs. View the full article

-

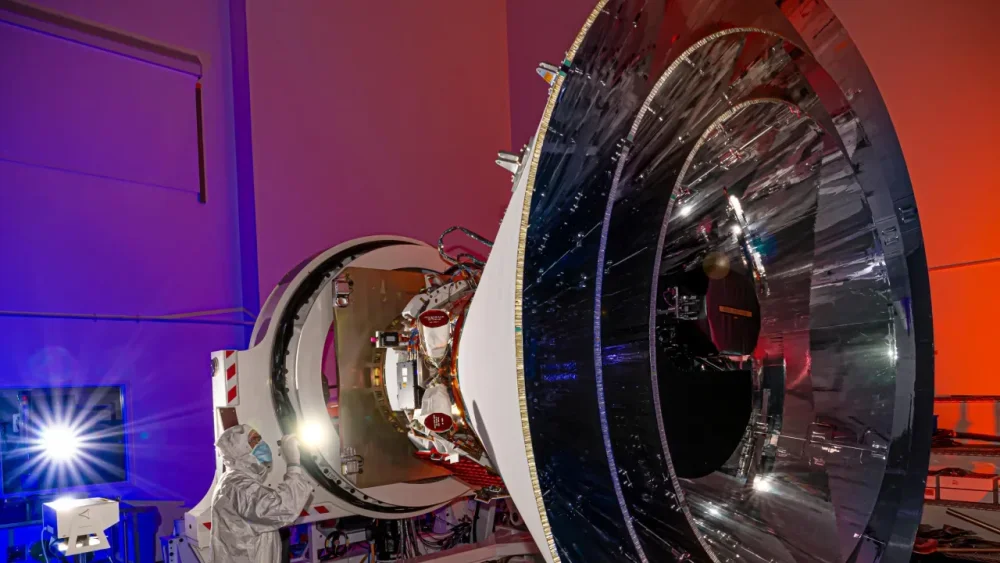

NASA’s SPHEREx Space Telescope will create the world’s most complete sky survey

The sky is about to get a lot clearer. NASA’s latest infrared space telescope, SPHEREx—short for Spectro-Photometer for the History of the Universe, Epoch of Reionization, and Ices Explorer—will assemble the world’s most complete sky survey to better explain how the universe evolved. The $488 million mission will observe far-off galaxies and gather data on more than 550 million galaxies and stars, measure the collective glow of the universe, and search for water and organic molecules in the interstellar gas and dust clouds where stars and new planets form. The 1107-lb., 8.5 x 10.5-foot spacecraft is slated to launch March 2 at 10:09 pm (ET) aboard a SpaceX Falcon 9 rocket from Vandenberg Space Force Base in California. (Catch the launch on NASA+ and other platforms.) From low-Earth orbit, it will produce 102 maps in 102 infrared wavelengths every six months over two years, creating a 3D map of the entire night sky that glimpses back in time at various points in the universe’s history to fractions of a second after the Big Bang nearly 14 billion years ago. Onboard spectroscopy instruments will help determine the distances between objects and their chemical compositions, including water and other key ingredients for life. SPHEREx Prepared for Thermal Vacuum Testing [Photo: NASA/JPL-Caltech/BAE Systems] Mapping how matter dispersed over time will help scientists better understand the physics of inflation—the instantaneous expansion of the universe after the Big Bang and the reigning theory that best accounts for the universe’s uniform, weblike structure and flat geometry. Scientists hypothesize the universe exploded in a split-second, from smaller than an atom to many trillions of times in size, producing ripples in the temperature and density of the expanding matter to form the first galaxies. “SPHEREx is trying to get at the origins of the universe—what happened in those very few first instances after the Big Bang,” says SPHEREx instrument scientist Phil Korngut. “If we can produce a map of what the universe looks like today and understand that structure, we can tie it back to those original moments just after the Big Bang.” [Photo: BAE Systems/Benjamin Fry] SPHEREx’s approach to observing the history and evolution of galaxies differs from space observatories that pinpoint objects. To account for galaxies existing beyond the detection threshold, it will study a signal called the extragalactic background light. Instead of identifying individual objects, SPHEREx will measure the total integrated light emission that comes from going back through cosmic time by overlaying maps of all of its scans. If the findings highlight areas of interest, scientists can turn to the Hubble and James Webb space telescopes to zoom in for more precise observations. To prevent spacecraft heat from obscuring the faint light from cosmic sources, its telescope and instruments must operate in extreme cold, nearing—380 degrees Fahrenheit. To achieve this, SPHEREx relies on a passive cooling system, meaning no electricity or coolants, that uses three cone-shaped photon shields and a mirrored structure beneath them to block the heat of Earth and the Sun and direct it into space. Searching for life In scouting for water and ice, the observatory will focus on collections of gas and dust called molecular clouds. Every molecule absorbs light at different wavelengths, like a spectral fingerprint. Measuring how much the light changes across the wavelengths indicates the amount of each molecule present. “It’s likely the water in Earth’s oceans originated in a molecular cloud,” says SPHEREx science data center lead Rachel Akeson. “While other space telescopes have found reservoirs of water in hundreds of locations, SPHEREx will give us more than nine million targets. Knowing the water content around the galaxy is a clue to how many locations could potentially host life.” More philosophically, finding those ingredients for life “connects the questions of how `did the universe evolve?’ and `how did we get here?’ to `where can life exist?’ and `are we alone in that universe?’” says Shawn Domagal-Goldman, acting director of NASA’s Astrophysics Division. Solar wind study The SpaceX rocket will also carry another two-year mission, the Polarimeter to Unify the Corona and Heliosphere (PUNCH), to study the solar wind and how it affects Earth. Its four small satellites will focus on the sun’s outer atmosphere, the corona, and how it moves through the solar system and bombards Earth’s magnetic field, creating beautiful auroras but endangering satellites and spacecraft. The mission’s four suitcase-size satellites will use polarizing filters that piece together a 3D view of the corona capture data that helps determine the solar wind speed and direction. “That helps us better understand and predict the space weather that affects us on Earth,” says PUNCH mission scientist Nicholeen Viall. “This`thing’ that we’ve thought of as being big, empty space between the sun and the Earth, now we’re gonna understand exactly what’s within it.” PUNCH will combine its data with observations from other NASA solar missions, including Coronal Diagnostic Experiment (CODEX), which views the inner corona from the International Space Station; Electrojet Zeeman Imaging Explorer (EZIE), which launches in March to investigate the relationship between magnetic field fluctuations and auroras; and Interstellar Mapping and Acceleration Probe (IMAP), which launches later this year to study solar wind particle acceleration through the solar system and its interaction with the interstellar environment. A long journey SPHEREx spent years in development before its greenlight in 2019. NASA’s Jet Propulsion Laboratory managed the mission, enlisting BAE Systems to build the telescope and spacecraft bus, and finalizing it as the Los Angeles’s January wildfires threatened its campus. Scientists from 13 institutions in the U.S., South Korea, and Taiwan will analyze the resulting data, which CalTech’s Infrared Processing & Analysis Center will process and house, and the NASA/IPAC Infrared Science Archive will make publicly available. [Image: JPL] “I am so unbelievably excited to get my hands on those first images from SPHEREx,” says Korngut. “I’ve been working on this mission since 2012 as a young postdoc and the journey it’s taken from conceptual designs to here on the launcher is just so amazing.” Adds Viall, “All the PowerPoints are now worth it.” View the full article

-

Rightmove pushes for growth as web traffic bump signals property revival

Property listings group says house-hunters spent 1bn more minutes on its site last year View the full article

-

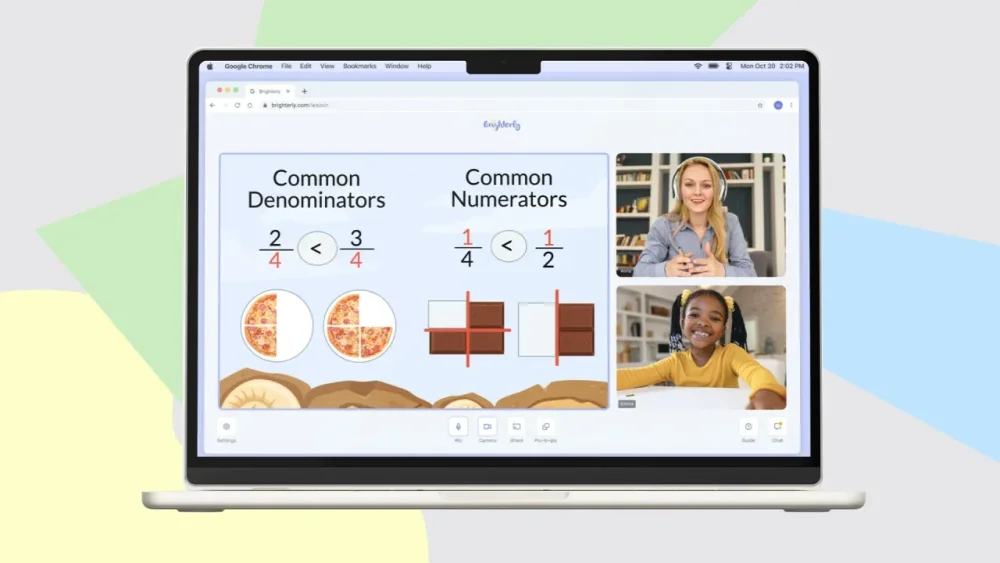

This education startup is using AI to help students learn. It says tech should never replace human teachers

In 2021, Eugene Kashuk was looking for a new venture. The Ukrainian entrepreneur realized in the wake of the pandemic that there was a large gap in education. Students were lagging behind, particularly in math. Kashuk started Brighterly, a platform that connects math teachers from all across the globe with students in the United States for private tutoring. Brighterly offers private lessons for $20 per 45-minute lesson—much cheaper than the average rate of about $40 per hour in the United States. In part, Brighterly is able to keep costs down because it uses AI to generate lessons so teachers are able to use their time to focus on their student instead of coming up with problem sets and exams. Fast Company chatted with Kashuk about Brighterly’s growth story and the role of AI in education. How does Brighterly work? Brighterly founder and CEO Eugene Kashuk [Photo: Brighterly] We are a marketplace that connects teachers with students, but we’re not an ordinary marketplace where there’s no control over quality levels or standardization. We have a pretty sophisticated way of hiring and managing teachers and only accept 3% of applicants. We look for teaching skills and soft skills: 90% of our teachers have prior teaching experience. We also have our own custom methodology and curriculum designed by a team of in-house experts, and then we use AI to generate content. On the other side, we’ve got a platform that connects teachers, parents, and kids and allows for lessons to happen. Lessons themselves are fun, interactive, and gamified, and it all revolves around the school curriculum. It’s a high-impact education where we are able to gain academic results as fast as possible. You mentioned using AI to create lessons. How do you make sure it’s not hallucinating or creating misinformation? We are human driven in terms of the content that we create. There are some processes that you can automate using AI. For example, you need to explain fractions to a kid. The best way to do it is to find a circular object such as a pizza you split into pieces. You can use AI to generate images. You can use AI to generate ideas on what kind of circular object will work the best here and will be engaging and fun for the kids. We don’t ask AI to tell us what to teach. As we see it, you can only use AI to create content that is aligned with what you teach. What do you see as the role of AI in education? When I think of AI technologies replacing human educators, I’m more afraid than excited. I’ll caveat and say the technology evolves so fast that you never know what it will look like in a few years. At this point, I don’t see any potential that AI tutors might replace real human educators. In order for education to work, teachers need to form an emotional bond with their students. Not everything can be covered by logic and algorithms; you need to have that human input to understand what the child really needs. We’re not at a moment where AI can really replace human interaction in education. However, we have great capabilities to generate content or to generate language to help create lessons and personalized assessments, which can be very useful for educators. So you’re saying AI can lighten the load for teachers so they can focus on nourishing the emotional bond with students. And so teachers can also focus on decision-making. You need a human teacher to understand how well a student is doing and assess what they need next. For example, maybe a student seems to grasp a topic but the teacher can sense that they’d benefit from some more repetition. I don’t see at this point how AI can pick up on moments like this. Is there anything else you’d like to add? The education gap isn’t just in math—there’s also a reading problem. We get daily requests for more reading lessons, so we’ll be launching a reading course as well. There was a COVID relief program that allocated resources to cover those gaps, but it’s unclear if it’ll be funded in the future. In the meantime, the knowledge gap is growing. Currently, we only cover elementary and middle school, but we’ll also be launching a high school product to help with that gap. Children are the future and right now existing solutions for the knowledge gap aren’t working. We need more. View the full article

-

We Added a $250 AI Tools Stipend for the Buffer Team: Here’s Why

At Buffer, we are always looking for ways to help our team work more effectively and creatively. Our latest investment in productivity is a company-wide AI Tools Stipend of $250 per teammate per year. This initiative reinforces our ongoing commitment to supporting innovation while giving our teammates the autonomy to choose tools that best fit their individual workflows. Here's why we're introducing this stipend and how we expect it to impact our work. The evolution of AI in our workflowsOver the past year, we've seen AI tools rapidly transform how many of us work. What started as occasional experimentation with tools like ChatGPT has evolved into more regular integration of AI assistants in various aspects of our workflow — from drafting content and generating ideas to debugging code and analyzing data. Late last year, our Engineering Managers began exploring the idea of offering a stipend specifically for Engineers to experiment with productivity-boosting AI software. We noticed some inconsistencies in reimbursement requests for these tools and aligned on providing structured support for this exploration. The positive impact on our engineering team made it clear that AI tools could benefit everyone at Buffer, regardless of their role. That's why we've decided to extend this stipend to all teammates. Why we're introducing an AI tools stipendThe pace of AI tool development has accelerated dramatically, and we want to empower our team to experiment and find what works best for their specific needs. There are a few key reasons behind this decision: Autonomy and flexibility: Different roles benefit from different AI tools, and we want teammates to have the freedom to choose what works for them rather than prescribing a one-size-fits-all solution.Reducing friction: By providing a dedicated stipend, we remove financial barriers to trying new tools that might significantly improve productivity.Learning together: As we all explore different AI tools, we can share insights and recommendations, collectively building our understanding of how these technologies can best support our work.Staying ahead of the curve: AI technology is evolving rapidly, and we want to ensure our team has access to cutting-edge tools that help us continue delivering exceptional service to our customers.How our AI stipend worksWe've allocated $250 per teammate per year for AI tools. With our current team size of 71 people, this represents a potential additional operating expense of up to $17,750 annually. The stipend offers flexibility, allowing teammates to maintain a yearly subscription to one primary tool, access others occasionally for specific projects, or mix and match several tools throughout the year. We've designed the AI Tools Stipend to be straightforward and flexible: Eligibility: All Buffer teammates are eligible to use the full $250 stipend annually.Covered tools: The stipend covers AI-powered tools such as ChatGPT, Claude, Midjourney, Cursor, Cody, Spiral, Raycast, and similar tools that enhance productivity and creativity.Subscription recommendation: We suggest opting for monthly subscriptions rather than annual commitments to maintain flexibility in trying different tools.Free alternatives: Many AI tools offer free versions, so we recommend exploring those first before committing to a paid plan. Additionally, Gemini Advanced is already available through our Google Workspace.Knowledge sharing: In the spirit of transparency, teammates who use the stipend are encouraged to share the tool they're exploring in our #culture-ai Slack channel so we can all learn together.Learning together: building an AI knowledge baseOne of the most exciting aspects of this program is the opportunity to collectively discover and share best practices for using AI tools. By encouraging discussion in our #culture-ai Slack channel, we hope to: Create a repository of real-world use cases specific to Buffer's workHelp teammates discover tools that might benefit their particular workflowIdentify patterns in how different teams are leveraging AIWe already have some early success stories: Content Team: Experimenting with AI for research and ideation while maintaining our unique voice. The stipend is already having an impact here as our content writer, Tami, wrote an article detailing an experiment with five AI chatbots and how they might fit into creators’ workflows.Engineering Team: Boosting productivity through AI-assisted coding.Customer Advocacy Team: Exploring ways to enhance customer interactions with AI support.Through these shared experiences, we'll continue to refine our understanding of how AI can best support our work at Buffer. Over to youThis stipend is just one part of our broader approach to thoughtfully integrating AI into our work at Buffer. We see AI tools as assistants that can handle routine tasks and provide creative inspiration, freeing up our human team to focus on the work that requires genuine human connection, creativity, and strategic thinking. We’d love to hear your thoughts: Do you have questions about how we're approaching AI adoption at Buffer?Are you using AI tools in your work? Which ones have you found most valuable?As always, we're learning as we go — we believe in the potential of AI to enhance our work while still keeping human creativity, connection, and judgment at the center of what we do. Read more about AI at Buffer🧠 The AI Mindset Shift: How I Use AI Every Day 📚 How 1.2 Million Posts Created with Buffer’s AI Assistant Performed vs Human-Only — and What That Means for Your Content 🤖 How Buffer’s Content Team Uses AI 🛠️ The Story Behind Buffer’s AI: An Interview with Diego Sanchez View the full article

-

There’s a reason the new ‘White Lotus’ opening credits feel different

“But what is death?” I am sitting down with Katrina Crawford and we are here to talk about the White Lotus Season 3 opening credits. Together with Mark Bashore, Crawford runs the creative studio Plains of Yonder, which has crafted the White Lotus main titles for every season so far. But that question about death wasn’t posed by me. It was posed by her. And it challenges us to reflect on the meaning of death, and the many ways to die. Since White Lotus season 3 premiered on February 16, the internet has been abuzz with theories and criticisms around who died and what the opening sequence means. In response, HBO has said: “You’ll get it soon enough.” So while we wait, we decided to call up Crawford and Bashore so we can dissect one of the most iconic main titles in modern history. The biggest takeaway? Some things can have more than one meaning. [Photo: Courtesy Plains of Yonder] Easter eggs or red herrings? Plains of Yonder has made over a dozen main titles for shows like The Decameron and The Lord of the Rings TV show, Rings of Power. But Crawford says that this title, for White Lotus Season 3, is by far the longest they’ve ever spent developing. While some showrunners don’t consider the main title until the end, Crawford says that Mike White gave the team a whole 10 months to craft the sequence. When they started, Cristobal Tapia de Veer, who wrote the music for previous seasons’ openers, was still composing the new soundtrack. The crew wasn’t even shooting yet. All they had was the script. White Lotus Season 3 has eight episodes. They got the script for the first seven episodes. “We know a lot,” Crawford tells me. But they don’t know who dies. [Photo: Courtesy Plains of Yonder] Crawford combed through those scrips and crafted meticulous profiles for every character, where she tried to understand who these characters are—or who she thinks they are. Once the profiles were complete, she assigned dozens of images to them. Sometimes, she paired a character with an animal (a stoned monkey for the North Carolina mom played by Parker Posy). Sometimes, she crafted a scenario around them (Jason Isaacs’s Timothy Ratliff character appears coiled up in a tree with knives for branches.) These images might intimate a character’s fate or personality, but of course, some interpretations are more literal than others. “Maybe someone is presenting one way, but something else is truth,” she says. [Photo: Courtesy Plains of Yonder] Animal instincts As with every season, animals carried much of the symbolism. “We’ve always found that using animals are a better metaphor than people,” says Bashore. Season 1 was obsessed with monkeys; Season 2 introduced humping goats; Season 3 goes all in on mythological creatures that are half-human, half-beast. (Crawford spent a month poring over Thai mythology books.) The intro opens with a circus of animals, and the first humans to be portrayed are human faces attached to bird bodies. A bit later into the sequence, Lek Patravadi’s Sritala Hollinger character (the hotel owner) appears by a pond, holding a creature that is half human, half bird—perhaps a clue about her mysteriously absent husband. Most of these metaphors are predictably obscure. Amy Lou Wood (who plays Chelsea) appears in the middle of an incriminating scene depicting a leopard that has bitten off a deer’s head with two foxes bearing witness. Which animal is she? Meanwhile, Natasha Rothwell, who plays Belinda from Season 1, is portrayed next to a stork staring down at its reflection in the water, while a crocodile is lying in lurk. Will it snap her up in its jaws? [Photo: Courtesy Plains of Yonder] Fiction or reality? That the team spent ten months developing the title isn’t so surprising considering the complexity of these characters. But there is one more character in the story, and that is Thailand. Like with the first two seasons, the location plays a key role in the story. To build that sense of place, the team spent ten days filming at three Royal temples in Thailand. “We shot the daylights out of it,” says Bashore, noting that they took over 1,000 photos of patterns, colors, outfits, and of course, those iconic Thai rooflines. “We looked for quick visual cues, patterns that feel iconically Thailand.” [Photo: Courtesy Plains of Yonder] Getting permits to film was no small feat considering the sacred nature of the temples. The team had to coordinate access with HBO, and other logistical challenges meant that the title took longer to make. But could the delay signal something else? Some shows, like Game of Thrones, have created main titles that change from week to week. Was the White Lotus Season 3 main title so challenging to make because the team had to tweak it as the season progresses? Crawford gives a cheeky shrug that neither confirms nor denies it: “We can’t talk about anything you haven’t seen.” What we can talk about is what Crawford calls “the Temple of White Lotus.” Indeed, the temples help anchor the show in Thailand, but the real star of the show is the White Lotus resort, which may have been filmed at the Four Seasons Resort Koh Samui, but remains a fictional place. To emphasize the otherworldly setting, the team broke down the photographs they took into little squares and shuffled them to create new images out of them—like a patchwork that looks and feels real, but ultimately isn’t. “We’re not trying to say this is a real place,” says Bashore. “It’s just a vibe we’re soaking in.” [Photo: Courtesy Plains of Yonder] This vibe ultimately blossoms in the seven worlds the team created for the title sequence. The story begins in the jungle, then unfolds in a village, a temple, a pond (in which we see Sam Nivola’s body afloat), a gloomier forest with ominous snakes coiled around trees, an epic battle scene, and the grand finale—“disaster at sea”—where a throng of men gets swallowed by giant fish. Could this mass killing scene signify more than one death in the show? Crawford’s response? You know it already: “But what is death?” View the full article

-

Google’s AI summaries are changing search. Now it’s facing a lawsuit

For more than two decades, users have turned to search engines like Google, typed in a query, and received a familiar list of 10 blue links—the gateway to the wider web. Ranking high on that list, through search engine optimization (SEO), has become a $200 billion business. But in the past two years, search has changed. Companies are now synthesizing and summarizing results into AI-generated answers that eliminate the need to click through to websites. While this may be convenient for users (setting aside concerns over hallucinations and accuracy) it’s bad for businesses that rely on search traffic. One such business, educational tech firm Chegg, has sued Google in federal district court, alleging that AI-generated summaries of its content have siphoned traffic from its site and harmed its revenue. Chegg reported a 24% year-on-year revenue decline in Q4 2024, which it partly attributes to Google’s AI-driven search changes. In the lawsuit, the company alleges that Google is “reaping the financial benefits of Chegg’s content without having to spend a dime.” A Google spokesperson responded that the company will defend itself in court, emphasizing that Google sends “billions of clicks” to websites daily and arguing that AI overviews have diversified—not reduced—traffic distribution. “It’s going to be interesting to see what comes out of it, because we’ve seen content creators anecdotally complaining on Reddit or elsewhere for months now that they are afraid of losing traffic,” says Aleksandra Urman, a researcher at the University of Zurich specializing in search engines. Within the SEO industry, anxiety over artificial intelligence overviews has been mounting. “Chegg’s legal arguments closely align with the ethical concerns the SEO and publishing communities have been raising for years,” says Lily Ray, a New York-based SEO expert. “While Google has long displayed answers and information directly in search results, AI overviews take this a step further by extracting content from external sites and rewording it in a way that positions Google more as a publisher than a search engine.” Ray points to Google’s lack of transparency, particularly around whether users actually click on citations in AI-generated responses. “The lack of visibility into whether users actually click on citations within AI overviews leaves publishers guessing about the true impact on their organic traffic,” she says. Urman adds that past research on Google’s featured snippets—which surfaced excerpts from websites—showed a drop in traffic for affected sites. “The claim seems plausible,” she says, “but we don’t really have the evidence to say how the appearance of AI overviews really affects user behavior.” Not all companies are feeling the squeeze, however. Ziff Davis CEO Vivek Shah said on an earnings call that AI overviews had little effect on its web traffic. “AI’s presence remains limited,” he said. “AI overviews are present in just 12% of our top queries.” It remains to be seen whether Chegg is an outlier or a bellwether. Ray, for her part, believes its lawsuit could be a pivotal moment in the fight over AI and SEO. This case will be fascinating to watch,” she says. “Its outcome could have massive implications for millions of sites beyond just Chegg.” View the full article

-

New boss? Here’s what you need to do

Unless you’re at the very top of the food chain in your organization, you report to someone. And that manager is important for your career success. They will evaluate your performance, give you feedback and mentoring, greenlight ideas, and provide support elsewhere in the organization for things you’re doing. Because of all the roles that a supervisor plays for you, it can be stressful when a new person steps in, or you get promoted and start reporting to someone new. There are several ways you can make this transition easier and lay the groundwork for a fruitful relationship with your new boss: Be mindful of the firehose When your supervisor is replaced with someone else, that person is stepping into a new role. Whenever you take on something new, there is a lot that comes at you those first few weeks. You need to get to know your team, your new responsibilities, your new peer groups. All of that happens while work needs to continue. That’s the reason the information dump you face when you first start a role is often referred to as “drinking from the firehose.” If your new boss is drinking from that hose, then you want to provide them with information slowly. Invite them to let you know when you can have a chance to meet and talk, recognizing that it may take them a week or more to get settled in before they’re ready to have an in-depth conversation. As your supervisor gets settled, you can expand the amount of information you provide. Giving them that information when they’re ready to receive it will greatly increase the likelihood it gets read at all. Provide an introduction You do want to introduce yourself in stages. Start with a quick, one-paragraph introduction to share who you are, how long you’ve been with the organization, and your role on the team. If there are one or two critical things that your supervisor needs to call on you for, then highlight those as well. After that, you can add more information about your broader responsibilities. Highlight projects that you’re working on that may need your supervisor’s input or attention. You might consider creating a cheat sheet for your new boss that lists the things you’re working on, your best contact information, and your key strengths and weaknesses. That way, your supervisor knows when to call on you and the kinds of projects where it might be helpful to include you. After the initial rush, you can add details about projects that don’t need immediate attention and other projects you have been involved in so that your supervisor can recognize the contributions you’ve made. Talk about expectations Your previous supervisor not only knew what you had done but also had a sense of your career trajectory, interests, and goals. Hopefully this person was also actively engaged in helping you grow and providing opportunities for you to move forward with your career goals. Your new boss doesn’t know any of these things about you. If you have expectations for how you want to be treated and what you would like your supervisor to do to help you succeed in your role and advance, you need to talk about those expectations. It’s also helpful for you to discuss any weaknesses that you would like help to address. It is common to want to start your interactions with your supervisor by focusing on your strengths. And you should do that. But you might also be tempted to hide your weaknesses. Instead, it can be useful to be up front with those aspects of your work that you are still developing. It can ensure that you get help when you need it and that you’re considered for professional development opportunities. Ask for what you need Consistent with highlighting your weaknesses, you should generally ask your new boss for what you need from them. If you like to get feedback in the middle of projects, ask for it. If you need more information about the strategy behind a project or plan, ask. Nobody is a mind reader. And the less well someone knows you, the less able they are to anticipate what you need from them. Rather than hoping your new boss will give you everything you need, you’ll have to ask for it. Usually, a supervisor will be glad to have some guidance about how you’d like to engage with them. Asking for what you need is the best way to get the relationship with your new supervisor off to a good start. View the full article

-

OpenAI: Internal Experiment Caused Elevated Errors via @sejournal, @martinibuster

OpenAI published a new write-up explaining how an internal experiment caused significant errors. The post OpenAI: Internal Experiment Caused Elevated Errors appeared first on Search Engine Journal. View the full article

-

This $295 gadget is like a Keurig machine for makeup

Humanity has sequenced the genome and built artificial intelligence, and yet it’s still shockingly hard to find the right foundation shade. I’ve spent hours at Sephora searching for a shade that doesn’t make my skin look ashy or unnatural. Then, when I finally do find a match, my skin gets darker after a day in the sun, and the color no longer works. I’m not alone in my frustration. Last year, makeup brands sold $8.4 billion of foundation around the world, but you can still find social media brimming with people complaining about how hard it is to find the right shade. A new brand, Boldhue, wants to solve this problem forever. The company has created a machine that scans your face in three places, then instantly dispenses a customized foundation shade. Using a system similar to Keurig pods, the machine comes with five color cartridges that mix to create the right color; once they run out, you order more. Boldhue Co-Founder and CEO Rachel Wilson and Artistic Director Sir John [Photo: Boldhue] The product could revolutionize the way that everyday consumers do their makeup at home—and also make it far easier for professional makeup artists to create the right shade for their clients. Fueled by $3.37 million in venture funding from Mark Cuban’s Lucas Venture Group, BoldHue believes it can bring this technology to all kinds of other cosmetic products. The Quest To Find Your Shade Karin Layton, BoldHue’s co-founder and CTO, was an aerospace engineer who worked at Raytheon. Five years ago, she realized that her high-end foundation didn’t accurately match her skin. As a hobby, Layton dabbled in painting and had a fascination with color theory. So she began tinkering with building a machine that would produce a person’s exact skin shade. During the pandemic, after Layton decided to turn her idea into real company, she brought her childhood friend and serial entrepreneur, Rachel Wilson, as her business partner. “I really resonated with the pain points she was trying to solve because I am half Argentinian,” says Wilson, who is now CEO. “And while I present as white, I have undertones that make it complicated for me to find the right shade. “I always look like a pumpkin or a ghost when I wear foundation.” [Photo: Boldhue] Women of color, in particular, have trouble finding the right shade. For years, the makeup industry focused on creating products for caucasian women, leaving Black and brown women to come up with their own solutions. This only began to change a decade ago. In 2015, I wrote about a chemist at L’Oreal, Balanda Atis, went on a personal quest to develop a darker foundation that wouldn’t make her skin look too red or black. L’Oreal eventually commercialized the product she created and promoted Atis to become the head of the Women of Color lab, which focuses on creating products for women of color. Danessa Myricks, a self-taught makeup artist, spent years mixing her own foundation using dark pigments she found at costume makeup stores and mixed them with drugstore foundations. In 2015, she launched her own beauty brand, Danessa Myricks Beauty, and four years ago, Sephora began to carry it. [Photo: Boldhue] Today, there are more options for women of color, but many women still struggle to find the right shade. BoldHue believes the solution lies in technology. Color matching technology already exists, but it is not particularly convenient for consumers. Lancome has a machine that color matches, but it’s only available in certain stores. Sephora has ColorIQ, which scans your face and matches you to different brands. But part of the problem is that your skin tone isn’t static; it is constantly changing based on how much sun exposure you have, especially if you are have a lot of melanin. “If you order a shade online, your complexion may have changed by the time you receive it seven days later,” says Wilson. Color-Matching At Home Wilson and Layton believe that having an affordable, at-home solution to color matching could be game-changing. The machine comes with a wand. When you want to create a new foundation shade, you scan your skin on your forehead, your cheek, and your neck. Then the machine instantly dispenses about a week’s worth of that shade into a little container. The machine can store that shade for you to use in the future. But having the machine in your house means that you can easily re-scan your face after a day at the beach to get a more accurate shade. If there are multiple people in a household (or say, a sorority house) who wear foundation, they can each scan their faces to produce the perfect shade. And it could transform the work of makeup artists who typically mix their own shades for their clients throughout the day. “They’re lugging around pounds of products to set and are forced to play chemist all day long,” says Wilson. “If we can shade match for them in one minute, they can focus on the artistry part of their job, and they’re wildly excited about that. It also means they can book more clients in a day, because they have more time.” While on the surface, BoldHue’s technology seems to disrupt to the foundation industry, Wilson believes it could actually empower other makeup brands. Each makeup brand has its own formula that influences the creaminess and coverage of their foundations. BoldHue could create a brand’s formula in the machine, but have the added benefit of highly specific color matches. BoldHue is already in talks to partner with brands to create customized foundations for them, much the way that Keurig partners with brands like Peet’s and Illy to create pods for the coffee machines. But ultimately, the possibilities go beyond foundation. With this technology BoldHue could create other color cosmetics, from concealer to lipstick to eyeshadow. “We think of ourselves as a technology company with a beauty deliverable,” Wilson says. View the full article

-

Bank of England rate setter warns of higher UK inflation risk driven by wages

Deputy governor Dave Ramsden says interest rate cuts must be gradualView the full article

-

Top Keyword Planning Project Management Template

Key takeaways Embarking on the journey of enhancing your website’s visibility can be daunting, especially if you’re new to the realm of SEO. A fundamental step in this process is conducting effective keyword research, which serves as the cornerstone of organic growth by driving traffic to your site. To streamline this task, various free templates… The post Top Keyword Planning Project Management Template appeared first on project-management.com. View the full article

-

Uh oh. TikTok just made its desktop experience way better

TikTok just updated its desktop viewing experience to offer a smoother UX, expanded features, and more ways to watch. I wish it would go back to how it was before. It’s no secret that TikTok has a mobile-first design. Its beloved hyper-specific algorithm and For You page, as well as its wholehearted embrace of short-form video, has inspired copycats the likes of which include everyone from Instagram to LinkedIn and Substack. TikTok has even changed the fabric of culture itself, shortening attention spans and shaping the music industry as we know it. While TikTok shines on mobile, its desktop experience has historically been significantly less intuitive. The new desktop browser is, by all counts, a marked improvement. But, for those of us who turn to the clunky desktop TikTok to cut down on screen time, it’s not necessarily a good thing. [Photo: TikTok] Ugh, TikTok’s new desktop browser is better The biggest change to TikTok’s desktop browser is the noticeably smoother UX. Previously, scrolling through videos on the homepage could feel delayed and glitchy, which quickly becomes frustrating given that it’s the platform’s main function. Now, each clip transitions smoothly into the next—an element of TikTok’s new “optimized modular layout” that offers “a more immersive viewing experience and seamless feed exploration,” according to a press release. The look of the platform has also been cleaned up and simplified, including via a minimized navigation bar, to “reduce distractions” during doomscrolling. Beyond the improved UX, updated desktop TikTok also comes with a few new features. It’s poached the Explore tab straight from the app, giving users another, less tailored feed to explore. There’s now also full-screen live streaming modes for gamers, a web-exclusive floating player on Google Chrome so users can watch brainrot Subway Surfers TikToks while they shop online, and a collections tool that can organize saved videos into subcategories. And, yeah, on the surface, all of these changes are reasonable responses to TikTok’s lackluster web browser experience. They make it more frictionless, intuitive, and enjoyable. But did the developers ever consider that maybe some of us liked it when it was bad? Can we just not? When TikTok entered the mainstream around the early pandemic, I downloaded it on my phone for a total of about two days. The reason it didn’t make the cut in my app library was not because it was bad, but because it was actually too fun—so much so that reading my AP Lit homework started to feel like an insurmountable task when those little videos were, like, right there. For me, the ideal screen time solution has been to delete social media apps from my phone and only check them when I’m on my computer, where their desktop counterparts tend to be more outdated and, sometimes, downright annoying. My one exception to this rule is YouTube Shorts, but only because its algorithm is leagues behind TikTok’s and therefore tends to drive me away by recommending one too many English hobby horsing videos. Am I still addicted to these apps? Most definitely. But do I feel like I have to check them every 30 seconds? Thankfully, no. TikTok’s desktop experience used to similarly serve as a refuge from the mobile app itself. It was a safe place to get a quick taste of what’s happening online without getting sucked into a three-hour rabbit hole about giving butter to babies. Its irritating quirks were precisely the point—and, I would argue, plenty of other desktop users likely turned to this version for the same reason. Now, though, as the desktop experience creeps ever-closer to the actual mobile app, we’re all going to have to figure out where to relegate TikTok so that we can hack our brains out of craving it. View the full article