Everything posted by ResidentialBusiness

-

Is there a better way to teach business?

I was asked to be the keynote speaker recently for an important conference at Rutgers Business School on the future of business education. I thought it would be helpful for business school leadership and students and for recruiters of business school graduates to recap my message in this Playing to Win/Practitioner Insights (PTW/PI) piece. It is called The Future[s] of Business Education: Two Strategy Paths. And as always, you can find all the previous PTW/PI here. Audience participation The conference attendees were mainly U.S. business school deans and other senior faculty members. The array of deans was quite impressive with deans from leading schools including Cornell, Goizueta, Haas, Kellogg, Stern, Ross, Tepper, Tuck, and Wharton. I started with a bit of audience participation by asking all tenure stream academics from business schools to stand up. I then asked them to sit down if their school has in its MBA program a required statistics course that provides instruction on how to make an inference from a sample to the universe from which the sample is drawn. As I expected, 100% of the audience sat down. That is now completely standard fare. I asked them to stand back up and then to sit down if their school seeks to convince MBA students that they should make their decisions based on rigorous data analysis. Again, as I expected, 100% sat down. So, I got confirmation that business education universally teaches students both how to make inferences from data and that they should make data-based decisions. Making inferences from data I then dove into making inferences from data. As I have pointed out many times before and recently at Nudgestock in London, statistics teaches students that the only legitimate way to make an inference to the universe from which a sample is drawn is to ensure that the sample is representative. You can’t ask a sample of men what they want in their Electric Vehicle (EV) and infer what consumers want in their EV because men are not representative of all consumers. The same would hold for a sample of women or young consumers or east coast consumers. Statistics teaches that you can legitimately use a sample of men only if you are trying to determine what male EV buyers want—because that sample is representative of the universe. In addition, the sample must be big enough to be statistically significant. However, it is important to realize that 100% of all data that we use in such statistical analysis is from the past. We never have data from the future. Hence, when we use data analysis to tell us what to do, we are implicitly assuming that the future is identical to the past. Otherwise, the sample wouldn’t be representative and business school statistics class tells us that we shouldn’t be using it. Yet our marketing, strategy, finance, operations, and HR classes tell students to make decisions based on rigorous data analysis. The Aristotelian distinction I then explained the Aristotelian distinction about which I have written before. Greek philosopher Aristotle was the father of science and his Analytica Posteriora the most important work in the history of science. While he created the scientific method, which was formalized in the Scientific Revolution 2000 years later, he did not prescribe its use everywhere. He made a critical distinction between two parts of the world. In one part, things cannot be other than they are. For example, anywhere on the earth’s surface, gravity has always and will always cause objects to accelerate toward the ground at 32 feet/second2—because when it comes to gravity, things cannot be other than they are. But when it comes to smartphones, there were zero in the world in 1999 and probably (the estimates are all over the place) over seven billion now. Smartphones exist in the part of the world where things can be other than they are. That world changed dramatically with the introduction of the BlackBerry in 2000 and has changed pretty much every year since. Aristotle did more than make this distinction. He encouraged the use of his scientific method in the part of the world where things cannot be other than they are but warned against ever using it in the part of the world where things can be other than they are. The father of science was crystal clear and modern-day statisticians would affirm his logic. In essence, he was warning against the use of unrepresentative samples. For business educators this calls for an assessment of the degree to which business is in the cannot part or the can part of the world. The whole business obsession with VUCA (i.e., volatility, uncertainty, complexity, and ambiguity) suggests businesspeople see the future of business as constantly shifting—i.e., can, not cannot. Of course, there are exceptions. Plastic cools at a certain rate in an injection molding machine. But that phenomenon represents a tiny, tiny fraction of the business world. Consumers change, competitors change, technology changes, regulations change, and so on. The future is routinely different than the past. The business school schism Therein lies the fundamental business school schism. Business schools teach two things that can’t coexist in business. Businesspeople live in a world in which the future is routinely different than the past. But they are educated—and universally so as demonstrated by my audience participation—to use methods appropriate only for a world in which the future is identical to the past. This leaves business school students with a choice. On one hand, they can ignore their business education, but that begs the question: why spend time and money on something that you subsequently ignore? On the other hand, they can embrace their education and become terribly flawed technocrats—following the analysis despite its inherent logical inconsistency. I think they are choosing a bit of both. On one hand, they are actually doing more than ignoring their business education: they are skipping it entirely, especially at the MBA level. I pointed out in a 2013 speech at the Academy of Management that U.S. students applying to U.S. MBA programs was in secular decline and from what I can see, the decline has continued. On the other hand, the MBA is still the second biggest volume graduate degree in America (after one-year Master of Education—which has a built-in demand because teachers get an automatic salary bump with one). So, many are still embracing it. Two strategy paths This leaves two strategy paths for business schools. On one hand, they can keep teaching fundamentally flawed, logically inconsistent content and watch business education continue to decline for two reasons. First, many prospective students will take a pass on business education because they don’t want to be trained to be data technocrats. Second, the business world has only a limited appetite for absorbing data technocrats. On the other hand, they can do what I recommended in my speech. That is to teach the Aristotelian distinction and equip students to follow Aristotle’s instruction in the part of the world that can be other than it is, which is the dominant part of business. That entails teaching business students to imagine possibilities and to understand the logic of possibilities well enough to choose the one for which the most compelling argument can be made—which means focusing more on developing students’ logic capabilities than their analytical prowess. The business school reaction Sadly, I don’t come out of the conference feeling that business education will choose the second path. In business education (and probably any other kind of tertiary education), when convention is challenged it is attacked, which is what Thomas Kuhn described in The Structure of Scientific Revolutions—and it is exactly what happened at the end of my talk. The first audience question wasn’t a question; it was an assertion from a dean (don’t know who he was but I think he said his name was Bruce): “That was a lot of arm-waving.” My immediate reaction, which I verbalized, was that this was why I was delighted to have left the academy six years ago and haven’t thought a single day about going back. This is what the academy does. When it doesn’t like something because it challenges convention, somebody takes responsibility for launching an attack. And since they know behavioral economics, they know that the rest of the audience will anchor on the attack, and the challenger will be destroyed by brute force. Childish but true. I didn’t take the bait and instead of defending, I simply asked what in my talk constituted “arm-waving?” He didn’t like that much and mumbled around for a while then asked me to put up slide 13 and pointed to the second point and said I hadn’t explained it much. So, not explaining one point on one slide as thoroughly as he wished meant that the entire talk could be dismissed as “arm-waving.” Suffice it to say, he didn’t get the satisfaction he was looking for—and I think I can give myself credit for not eviscerating him. Twenty years ago, I would have. But I realize now that this is theater, and he was just playing his assigned role. Since the designated attack dog hadn’t succeeded, the rest of the audience questions were mild and not unfriendly. But I am quite convinced that nothing is going to change on this front. Business schools will continue to teach the schism—though perhaps they will do it more sheepishly. Practitioner insights Paradigms die hard—per Kuhn. The paradigm of business education teaching students to make rigorous data-based decisions is well entrenched—super well-entrenched. The standard approach of the people who depend on the continuation of the dominant paradigm is to fight any attempt to challenge it—whether they have any useful argument or not. That is where business education is today—and it isn’t going to change from within. My advice then is for two kinds of practitioners—business school students (prospective or actual), and companies that recruit from business schools. For students, lower your expectations, though it is a bit different for undergraduate business versus MBA education. For undergrads, you will pick up a language system for business and learn some useful business concepts. One way or another, you will have to do that—and this is one plausible way. But protect yourself. Understand that they are teaching across a schism, and it doesn’t make sense. Just ignore them. You can’t be a useful businessperson making rigorous data-based decisions the way it will be taught to you. For MBAs, think carefully. Your opportunity costs are much higher than for undergrads in business because the average full-time MBA has 4–5 years of business experience—and they give up two years of an already attractive salary to take a full-time MBA. You share some of the undergrad reasons for attending, but at a far higher opportunity cost. Many of you should take a pass. This isn’t an institution that is learning and getting better. It is entrenched in an agenda that isn’t helpful to the world—or you. For employers, it makes sense to recruit there. The biggest value of business education programs is selectivity. It is hard to get into a quality business program, so the schools have presorted for you. The second value, in the case of MBAs, is commitment due to the high opportunity cost they pay. They must have high commitment to personal improvement to incur the out-of-pocket and opportunity costs to get their education. So, it is a high-value cohort from which to recruit. But you need to recognize that you will have to deprogram many of them who will graduate believing that they need to make all their decisions entirely based on rigorous data analysis—because that is what they are taught. You will have to deprogram them for them to be useful to you. But if you understand that and have a system for deprograming, you will get human capital that it is worth recruiting. View the full article

-

The paradox of the resilient, fragile global economy

A new IMF report exaggerates the gloomy economic backdrop, but there are valid reasons for policymakers to be glumView the full article

-

AI investment boom shielding US from sharp slowdown, says IMF

Fund upgrades outlook for global growth but warns of more ‘pass-through’ of tariff costs to consumersView the full article

-

UK risks higher inflation becoming entrenched, IMF warns

Bank of England should not lower interest rates before price pressure shows signs of easing, says chief economistView the full article

-

JBL’s Tune Buds With Active Noise Cancellation Are Over 50% Off Right Now

We may earn a commission from links on this page. Deal pricing and availability subject to change after time of publication. Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding Lifehacker as a preferred source for tech news. If you’ve been looking for a great pair of noise-canceling earbuds that don’t cost a fortune, the JBL Tune Buds are now down to $44.95, their lowest price yet, according to price trackers. That’s less than half of what they’re originally sold for, which makes them a strong contender in the under-$50 range. JBL Tune Buds $44.95 at Amazon $99.95 Save $55.00 Get Deal Get Deal $44.95 at Amazon $99.95 Save $55.00 These are true wireless earbuds that focus on delivering that signature JBL bass (deep and thumpy) but with enough flexibility to tweak the sound using the JBL app. Out of the box, they lean heavy on the low end, which works well for pop and EDM, but you can easily adjust the EQ if you prefer something more neutral. Performance-wise, the Tune Buds pack in more than you’d expect for the price. They support Bluetooth 5.3, AAC, and SBC codecs, along with multipoint pairing, so you can jump between your phone and laptop without reconnecting. The active noise cancellation is solid for casual use—good enough to dull traffic noise or a chatty office, even if it can’t fully block the low rumble of a subway, notes this PCMag review. There’s also an IP54 rating, meaning these can handle sweat and light rain, making them suitable for workouts or daily commutes. Touch controls are customizable through the companion app, and there’s built-in Alexa support if you like using voice commands hands-free. As for the battery life, JBL claims up to 10 hours per charge with ANC on and 12 hours without, plus an extra 30 to 36 hours from the charging case. That’s easily a few days of use (your mileage may vary) without needing to plug in. The 10mm drivers cover the standard 20Hz to 20kHz range, and the earbuds come with three silicone tip sizes to help with comfort and fit. They don’t offer premium-level sound or the silence of high-end ANC sets from Sony or Bose, but they strike a good balance between affordability, sound, and everyday convenience. Our Best Editor-Vetted Tech Deals Right Now Apple AirPods Pro 2 Noise Cancelling Wireless Earbuds — $197.00 (List Price $249.00) Samsung Galaxy S25 Edge 256GB Unlocked AI Phone (Titanium JetBlack) — $819.99 (List Price $1,099.99) Apple iPad 11" 128GB A16 WiFi Tablet (Blue, 2025) — $319.00 (List Price $349.00) Blink Mini 2 1080p Indoor Security Camera (2-Pack, White) — $34.99 (List Price $69.99) Ring Battery Doorbell Plus — $149.99 (List Price $149.99) Blink Video Doorbell Wireless (Newest Model) + Sync Module Core — $34.99 (List Price $69.99) Ring Indoor Cam (2nd Gen, 2-pack, White) — $79.99 (List Price $99.98) Amazon Fire TV Stick 4K (2nd Gen, 2023) — $29.99 (List Price $49.99) Shark AV2501S AI Ultra Robot Vacuum with HEPA Self-Empty Base — $359.89 (List Price $549.99) Amazon Fire HD 10 (2023) — $69.99 (List Price $139.99) Deals are selected by our commerce team View the full article

-

Tracking AI search citations: Who’s winning across 11 industries

Citations in AI search assistants reveal how authority is evolving online. Analyzing results across 11 major sectors shows which domains are most often referenced and what that says about credibility in an AI-driven landscape. As assistants condense answers and surface fewer links, being cited has become a powerful signal of trust and influence. Based on Semrush data from more than 800 websites, the findings highlight how AI reshapes visibility across industries. AI citation trends across industries The analysis surfaced several clear patterns in how authority is distributed across industries. Universal authorities Some domains appeared in the top 50 cited URLs across nearly all 11 sectors, with four domains appearing in every one: reddit.com (~66,000 AI mentions across 11 sectors) en.wikipedia.org (~25,000, 11 sectors) youtube.com (~19,000, 11 sectors) forbes.com (~10,000, 11 sectors) linkedin.com (~9,000, 10 sectors) quora.com (~8,000, 10 sectors) Other domains are sector-strong but globally influential: amazon.com (ecommerce and five other sectors). nerdwallet.com (finance-focused). pmc.ncbi.nlm.nih.gov (health and academic citations). Concentration and diversity by sector Citation concentration varies by sector. Most concentrated: Computers and electronics, entertainment, education. Most diverse: Telecom, food and beverage, healthcare, finance, travel and tourism. This means some sectors rely on a handful of go-to sources, while others distribute authority across a broader field. Relationships between visibility and SEO metrics AI visibility and AI mentions are strongly correlated (0.87). Organic keywords correlate more strongly with AI visibility (0.41) than backlinks (0.37). Keywords and backlinks themselves correlate at 0.79. By sector, the coupling between AI visibility and backlinks is strongest in computers and electronics, automotive, entertainment, finance, and education. In these sectors, the scale of authority clearly helps drive AI references. Sector breakdowns Finance Media brands such as Forbes and Business Insider dominate citations, reflecting the importance of timely commentary and market analysis. However, NerdWallet shows that specialized finance experts can achieve high AI visibility by building deep evergreen guides and comparison content. This sector also shows one of the strongest correlations between AI visibility and backlink scale, suggesting that authority signals remain highly influential. Healthcare Academic and government domains are heavily cited. The dominance of PubMed Central (PMC), CDC, and national health portals underlines the central role of trusted peer-reviewed or official information. Wikipedia also appears consistently, often serving as a layperson-friendly entry point. Diversity is lower here compared with consumer-facing sectors, reflecting the need for evidence-based references. Travel and tourism Citations are spread across government advisories (for example, gov.uk travel advice), booking platforms, forums, and user-generated communities. This diversity reflects the mix of practical (visa, safety), inspirational (guides, blogs), and transactional (booking) content users need. The sector’s Herfindahl-Hirschman Index (HHI) score is low, suggesting no single authority dominates, and visibility is earned by serving very specific user needs. Entertainment User-generated platforms dominate. Reddit, YouTube, and Quora all appear near the top of cited domains, alongside reference sources such as Wikipedia and IMDb. This highlights how conversational, community-driven content is central to how AI assistants explain and contextualize entertainment. In this space, backlink counts are less predictive than breadth of coverage. Education Citations concentrate around reference authorities including Wikipedia, university portals, and open-courseware providers. Specialist learning platforms and forums also feature, but the dominance of well-known academic sources creates a more concentrated citation environment. Here, AI assistants lean heavily on authoritative, structured content. Computers and electronics Technology news and review sites dominate, with CNET, The Verge, and Tom’s Guide appearing prominently. Wikipedia is again present, but the sector is notable for its concentration, with citations clustering around a few highly recognizable review hubs. This sector also shows one of the highest correlations between AI visibility and backlink scale, underlining the competitive role of authority signals. Automotive A mix of consumer guides (for example, Autotrader, AutoZone) and publisher content. Insurance and financing providers also receive citations, reflecting user queries that span from buying cars to managing ownership. Citations are somewhat more evenly distributed, but AI assistants lean on a balance of transactional and informational sources. Beauty and cosmetics Influencer-led platforms and community discussion spaces are frequently cited alongside brand websites and review hubs. The combination of user-generated content and brand authority makes this sector more diverse than average. Here, social-driven citations compete with established publishing brands. Food and beverage Recipe hubs, nutrition authorities, and community cooking sites dominate. Wikipedia also features, especially for ingredient-level explanations. The sector has one of the lowest HHI values, meaning a wide diversity of domains are being cited. Backlink totals are less correlated with visibility here. Instead, topical coverage breadth seems to matter more. Telecoms Citations are relatively diverse, ranging from provider help portals to tech media and consumer advocacy sites. Forums like Reddit often feature in troubleshooting contexts. The sector’s low HHI suggests no single authority dominates, but users’ practical questions drive AI systems to reference customer-support-style material. Real estate Cited domains include large listing platforms (for example, Zillow-type sites), financial services tied to mortgages, and government portals for regulation and housing data. While concentrated, the sector also pulls from news sources when market conditions are being explained. Get the newsletter search marketers rely on. See terms. Implications for brands and SEOs The patterns in AI citations carry direct lessons for brands and SEOs, highlighting: How authority is built. What types of assets AI prefers to reference. Why traditional SEO levers now interact differently with visibility. Reference assets matter Evergreen guides, standards, and explainers attract citations from both search engines and AI models. To compete with Wikipedia or government sites, brands need to publish authoritative, fact-checked material that others can comfortably reference. Breadth of coverage drives visibility Domains with a wide organic keyword footprint consistently show stronger AI visibility. This means that covering an entire topic area comprehensively – not just optimizing for a handful of high-volume keywords – positions a brand as a reliable reference source. Sector rules differ Each sector rewards different authority signals. In healthcare, peer-reviewed or government-backed resources dominate. In entertainment, community-driven and UGC platforms rise to the top. In finance, explainers and calculators from expert brands are frequently cited. Brands need to adapt their content strategy to the trust model of their sector. Fewer links, higher stakes AI assistants often cite only a handful of sources per response. Being included delivers disproportionate visibility. Conversely, being absent means competitors capture nearly all of the exposure. This concentration raises the bar for what counts as a reference-worthy asset. Backlinks still matter, but less directly While backlink scale correlates with AI visibility, the correlation is weaker than for organic keyword breadth. This suggests backlinks remain an authority signal, but the breadth and relevance of content may be more critical in an AI-driven environment. User intent alignment AI assistants pull from sources that best align with the specific intent behind a query. Brands that anticipate user needs – whether transactional, informational, or troubleshooting – stand a better chance of being cited. Creating layered content (guides, FAQs, tools) that matches different intents strengthens visibility. Becoming a referenced brand Citations in AI search results reveal the trust networks that underpin the next wave of search. Wikipedia, Reddit, and YouTube are universal reference points, but sector-specific authorities also matter. For brands, the lesson is clear: to win visibility in AI-driven search, you need to be the page that others cite. That means authoritative content, breadth of coverage, and assets designed to be referenced. Analysis methodology The analysis drew from AI citation data spanning 11 sectors and more than 800 domains, using responses from Google AI Mode, Perplexity, and ChatGPT search. Two primary metrics were calculated: AI visibility score: The average share of responses in which a domain was cited across Google AI Mode, Perplexity, and ChatGPT search. AI mentions: The total number of times a domain was cited across those engines in a given sector. These metrics were then enriched with: Organic keywords (Semrush): The number of keywords for which a domain ranks in organic search. Backlinks (Semrush): The total backlinks pointing to a domain. Spearman correlation To measure the degree of correlation between metrics, I used the Spearman correlation coefficient. Unlike Pearson correlation, which assumes linear relationships, Spearman looks at whether the ranking of one metric moves in step with another. In simple terms, if domains with higher keyword counts also tend to rank higher for AI visibility, the Spearman value will be high even if the relationship is not a perfectly straight line. A value near +1 means the two rise together consistently, near -1 means one rises as the other falls, and near 0 means no clear pattern. Concentration of the HHI I then measured citation concentration using the Herfindahl-Hirschman Index, a metric borrowed from economics. It is calculated by summing the squares of market shares, in this case, each domain’s share of AI mentions in a sector. An HHI closer to 1 means a sector is dominated by just a few domains, while values closer to 0 indicate citations are spread more evenly. For example, an HHI of 0.05 suggests a concentrated landscape, whereas 0.02 points to greater diversity. By combining AI visibility, citation counts, SEO scale (keywords and backlinks from Semrush), Spearman correlations, and HHI concentration, I built a cross-sector picture of who holds authority in AI-driven search. View the full article

-

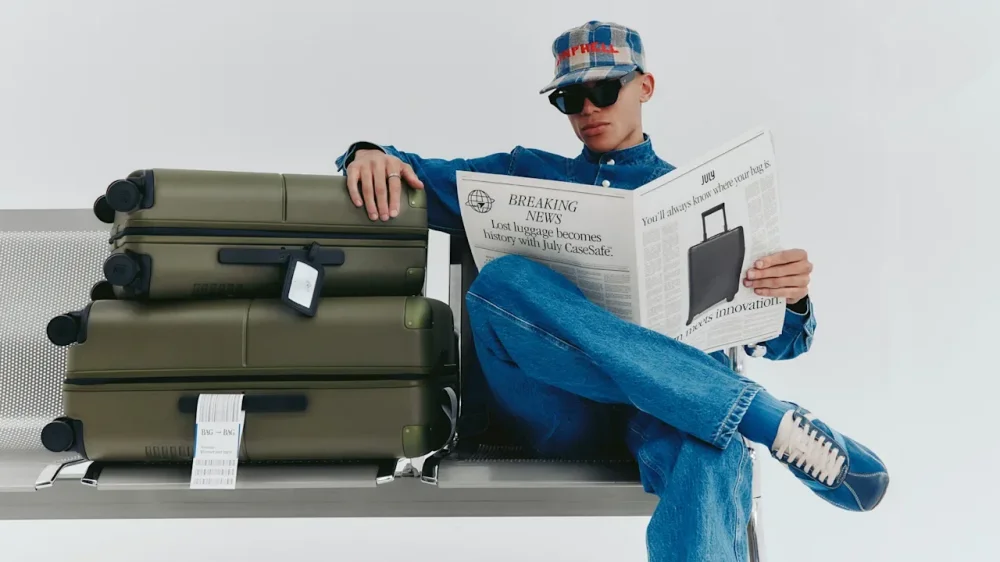

Meet the first-ever luggage with built-in AirTags

When Apple’s AirTag came out four years ago, one of the most obvious uses for it was for luggage. On my long trips to Asia, I always breathe a sign of relief when I glance at my phone and find that my checked suitcase has been loaded onto the aircraft. And I often wish I had one in my carry-on suitcase, especially when the overhead bins run out of space and the flight attendant checks my bag at the gate. July, a fast-growing Australian startup, has become the first luggage brand to incorporate AirTags directly into its suitcases. The technology was made in partnership with Apple and Google, so the tags are integrated with both Apple’s Find My and Google’s Find Hub networks. On October 14, July unveils this new feature, which will eventually be incorporated into its full line of carry-ons and suitcases. Cofounder Athan Didaskalou believes trackers will soon become standard in all suitcases, but it is still important to him for July to be first to market with this technology. “We have a team of industrial designers on hand, and we like making things,” Didaskalou says. “The only way to stand out today is by continuing to innovate.” The Ubiquitous Roller Suitcase Step into an airport today, and you’ll see virtually every traveler pushing wheeled luggage. Didaskalou points out that each element in the now-ubiquitous roller suitcase was the result of a breakthrough in design. In 1987, an airline pilot developed the concept of a wheeled suitcase with a telescoping handle—a vast improvement over having to carry your suitcase like a briefcase. By the 1990s, most suitcase brands had shifted to this design. In recent decades there have been incremental improvements. After September 11, 2005, the Transportation Security Administration imposed a new regulation that all luggage locks had to have a keyhole that agents could access. Soon after, it became standard for all suitcases to have TSA locks. And a decade ago, brands began incorporating phone chargers into their suitcases so travelers could charge their phones on the go. (The TSA now forbids phone chargers in checked luggage, so chargers in suitcases must be removable.) The global luggage market is enormous: It was $38.8 billion in 2023, according to Grand View Research, and it’s projected to grow to $61.49 billion by 2030. Given that most suitcases today have the same set of standard features, brands often end up competing with each other based on aesthetics. Samsonite dominates the industry, owning a fifth of the market with its many brands, which include American Tourister and Tumi. Samsonite generated $3.68 billion in 2023. But there are many other players. At the high end, there’s Rimowa, known for making durable suitcases with distinct grooves. Over the past decade, a wave of startups has popped up with sleeker and more colorful designs at an affordable price point of $200 to $300 for a carry-on. Direct-to-consumer startups like Away, Monos, Béis, and Floyd all create trendy cases that target the millennial and Gen Z traveler. But it’s a crowded, competitive market, and some brands have struggled. Paravel, for instance, tried to create an eco-friendly suitcase, but it filed for bankruptcy in May of this year, and was acquired by the British suitcase brand Antler. Another luggage brand, Baboon to the Moon, was struggling to grow its revenue and was acquired by turnaround firm the Hedgehog Co. in 2023. Improving the Design of a Suitcase July was founded in Australia in 2019. Its sleek, colorful suitcases have become very popular in Australia and across the Asia Pacific region, which North American brands like Away and Monos have been slower to enter. Didaskalou wants July to stand out from competitors by rethinking the design of its suitcases in a more fundamental way. Over the past few years, the brand has been playing with the configuration of suitcases. It was among the first brands to launch the trunk format, where the suitcase doesn’t open in the middle, but rather toward the top. “Some people want depth when they’re packing,” he says. “It can be awkward to open your luggage in the middle and try to balance it on the luggage stand at your hotel.” When Apple and Google opened up the API for the AirTag, it occurred to Didaskalou that luggage tracking was the next frontier of suitcases. Many consumers were already putting AirTags in their suitcases, but it was not a seamless solution. “They might need to move the AirTag from one suitcase to another, or take it out to use it for something else,” he says. “Or they might forget to use it altogether. It’s just another thing to worry about when you’ve already got a lot on your mind.” July’s designers spent months trying to figure out how to incorporate AirTags seamlessly into suitcases. They ended up putting them into the strip of plastic at the top of the suitcase, right next to the TSA lock. When you first get the suitcase, you pull on the plastic tab that separates the battery from the AirTag to activate it. Then you press a button on the strip to activate it, to see your luggage in your Apple Find My or Google Find Hub app on your smartphone. Didaskalou says that while the experience is simple for consumers, it was complex to design. It was important to make the underside of the AirTag accessible to change its battery. However, the AirTag is on the same strip as the TSA lock. “We needed to have access to the AirTag but not expose the TSA lock,” he says. “We also needed everything to be very tightly secured, because things bounce and move when you travel.” July has patented some aspects of this AirTag component, but Didaskalou believes many other luggage brands will soon realize that consumers now expect to be able to easily track their luggage. So they will soon begin incorporating AirTags or other trackers directly into their suitcases. But he believes they will only become widespread in the next year or so. And ultimately, Didaskalou believes that it’s the larger luggage makers that are more likely to update their suitcases first. In many ways, he’s looking to compete with Samsonite, an innovator with roughly 20% of the market share. “Samsonite has always been first to come up with new materials and manufacturing processes,” Didaskalou says. “We’re proud because this is the first time we’ve beat them.” View the full article

-

Use the '2357' Method to Remember What You Study

Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding Lifehacker as a preferred source for tech news. Many established study methods grew out of old research and stuffy pedagogical theories, so when a new one crops up on social media it’s worth checking out—if only to gauge whether a modernized approach can pay dividends. Sometimes, the most tried-and-true, old-school methods are best, but that doesn't mean fresh updates on traditional ideas can't be great, too. One study technique that frequently makes the rounds on social media is dubbed the “2, 3, 5, 7” method (or usually just "2357"). What’s interesting about this method is that while it is new in a sense, it is a modification of one of those older, time-tested techniques. More on that below, as well as what you need to know to use 2357 for your next study session. What is the 2357 study method?When using the 2357 technique, you revise your notes and study materials over and over again, following a set schedule: Day 1: Revise your initial notes Day 2: Revise and review them Day 3: Revise and review again Day 5: Revise and review again Day 7: Revise and review again Each time you revise, you should identify and expand upon key facts that you need to remember. If you usually take notes by hand, digitizing them can serve as your first revision. Conversely, you can play around with note types. On Day 2, you can redo your notes using the Cornell method, for instance, then make a mind map on Day 3. Taking a slightly different approach each time will force you to reconsider the material, identify elements you can expand on or you're not quite grasping, and think a little differently about how it all fits together. By the time you have completed that final revision on day seven, the content should be easy to retrieve from your memory with minimal effort. Days 5 and 7 should also focus a little more on reviewing, not just revising. One of those days can be dedicated to blurting, for instance, which is a technique that asks you to write down everything you can remember about a topic before checking against your notes and other resources for anything you missed. Try blurting a new note outline entirely. Why the 2357 method worksThis study method is effective because it combines elements of a few tried-and-true techniques, including spaced repetition, an established way to combat the so-called “forgetting curve” by increasing the amount of time between your study sessions until the information enters your long-term memory. This TikTok-beloved hack also employs elements of distributed practice, which operates on a similar theory. Studying via 2357 will work best if you slowly start weaning yourself off your notes and materials as you go, which forces you to practice active recall as you progress through the latter days of the cycle. Like I said, those final days of the cycle should be more about reviewing than revising. If you're running across information you're not quite grasping, mix in other techniques, like the Leitner flashcard method, which also relies on distributed practice to help you entrench material in your memory. Doing this all sounds easy, but it isn't quite. It might be harder than you think to remember to stick to this very specific schedule. If you find yourself struggling, call for backup. A school-focused planning app, like My Study Life, can help you create a schedule and carve out time for all these little studying tricks. View the full article

-

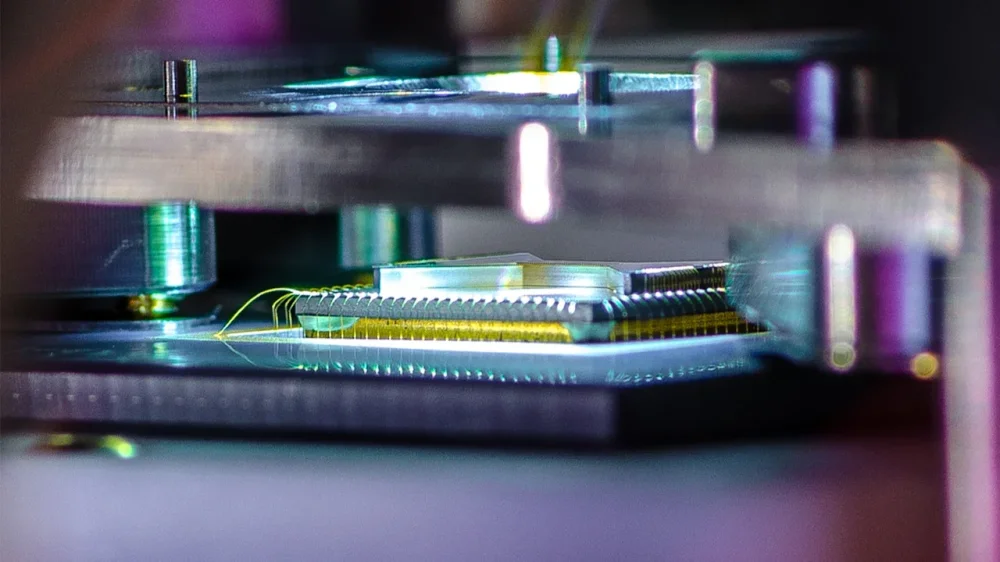

Quantum computing stocks soared again yesterday. The reason why may surprise even their biggest boosters

Shares in America’s “Quantum Four” quantum computing companies surged again yesterday. D-Wave, IonQ, Quantum Computing, and Rigetti all saw their stock prices jump by double-digit percentages. But why? The Quantum Four’s big stock price gains had nothing to do with radical new quantum computing breakthroughs. Instead, investors can thank banking giant JPMorganChase for the gains. Here’s what you need to know. Why did quantum computing shares surge yesterday? Yesterday, America’s four most prominent quantum computing companies saw their stock prices surge by double-digit percentages. But the genesis behind these soaring share prices wasn’t directly related to news about the companies. Instead, the upward movement in the Quantum Four’s share prices was largely due to financial giant JPMorganChase. On Monday, the investment bank announced a “Security and Resiliency Initiative” to invest in industries critical to America’s national economic security interests. This initiative will see JPMorganChase invest $1.5 trillion in select industries over the next 10 years. And the first wave of this funding—to the tune of up to $10 billion—has already been decided upon. The banking giant announced it will invest the 11-figure sum via “direct equity and venture capital investments” in companies operating across four key areas, which include: Supply Chain and Advanced Manufacturing Defense and Aerospace Energy Independence and Resilience Frontier and Strategic Technologies For quantum computing investors, it’s that last area—frontier and strategic technologies—that matters. Included in that grouping are companies in the AI, cybersecurity, and quantum computing space. “It has become painfully clear that the United States has allowed itself to become too reliant on unreliable sources of critical minerals, products, and manufacturing—all of which are essential for our national security,” JPMorganChase CEO Jamie Dimon said in a press release announcing the initiative. He added, “This new initiative includes efforts like ensuring reliable access to life-saving medicines and critical minerals, defending our nation, building energy systems to meet AI-driven demand, and advancing technologies like semiconductors and data centers. Our support of clients in these industries remains unwavering.” However, it is worth noting that Dimon did not specify which quantum computing companies would receive investments from the bank. In an accompanying chart, the bank merely said that strengthening capabilities in quantum computing and other areas, including AI and cybersecurity, “could directly translate into higher GDP and create military, intelligence, biotech, and cyber resilience benefits.” Yet despite not name-dropping any of the Quantum Four, their stocks surged. Quantum stocks soared by double digits In the United States, there are four prominent publicly traded quantum computing companies: D-Wave, IonQ, Quantum Computing, and Rigetti. All four companies saw their stock soar yesterday after JPMorganChase’s announcement. D-Wave Quantum (NYSE: QBTS): up 23% to $40.62 IonQ (NYSE: IONQ): up 16% to $82.09 Quantum Computing (Nasdaq: QUBT): up 12% to $21.46 Rigetti Computing (Nasdaq: RGTI): up 25% to $54.91 In addition to America’s Quantum Four, shares in the United Kingdom’s Arqit Quantum (Nasdaq: ARQQ) also jumped 20% to close at $58.27. Despite Monday’s price surges, all Quantum Four stocks and the U.K.’s Arqit are currently down in premarket trading on Tuesday morning, as of the time of this writing. The drops aren’t large: QBTS is down less than 3%, IONQ and QUBT are down around 4%, RGTI is down just over 3%, and ARQQ is down just under 3%. These modest declines suggest that some investors are engaging in profit-taking after yesterday’s share price surge. Still, many quantum investors are likely buoyed by the notion that one of America’s biggest investment firms thinks quantum computing will be critical to national security in the years ahead. If that conjecture is correct, companies operating in those spaces have a lot to gain. Shares in the Quantum Four have had a great year While the real-world benefits of quantum computing, which uses the properties of quantum mechanics to solve computational problems that classical computers couldn’t hope to, are likely still years away, the companies operating in the nascent space have seen tremendous returns over the past year. When it comes to the Quantum Four, all have had incredible returns both year-to-date (YTD) and over the past twelve months (12/m), as of yesterday’s stock market close: D-Wave Quantum (NYSE: QBTS): up 383% YTD, up 4,087% (12/m) IonQ (NYSE: IONQ): up 96% YTD, up 670% (12/m) Quantum Computing (Nasdaq: QUBT): up 29% YTD, up 3,001% (12/m) Rigetti Computing (Nasdaq: RGTI): up 259% YTD, up 6,629% (12/m) These are gains that many investors are hoping will continue well into the future. View the full article

-

IBM and Anthropic Team Up to Revolutionize Enterprise Software Development

IBM’s recent partnership with Anthropic marks a significant milestone for small businesses aiming to streamline their software development processes. By integrating Anthropic’s advanced AI model, Claude, into its suite of tools, IBM aims to deliver enhanced productivity, security, and governance within enterprise environments. This strategic alliance is particularly beneficial for small business owners looking for ways to leverage technology in their operations. With productivity gains averaging an impressive 45% reported by over 6,000 early adopters using IBM’s new AI-first integrated development environment (IDE), the potential for cost savings while maintaining high standards of code quality is a compelling reason to consider these new tools. Dinesh Nirmal, Senior Vice President of Software at IBM, stated, “This partnership enhances our software portfolio with advanced AI capabilities while maintaining the governance, security, and reliability that our clients have come to expect.” The IDE is designed specifically for enterprise software development lifecycles (SDLC), facilitating tasks like application modernization, code generation, and intelligent code review. These capabilities can not only save time but also lessen the burden on smaller development teams with limited resources. As digital transformation accelerates, the needs of small businesses often mirror those of larger enterprises, particularly in terms of efficient software development and data management. Developers using the new IDE can expect features such as: Application Modernization at Scale: Support for automated system upgrades and multi-step refactoring can help small businesses transition from legacy systems to modern frameworks without extensive downtime. Intelligent Code Generation and Review: AI-assisted code generation tailored to enterprise architecture patterns ensures that security and compliance needs are met, which can be crucial for small businesses operating in regulated environments. Security-First Development: The integration of security measures directly into development workflows allows for quicker addresses to vulnerabilities and compliance with industry standards, enhancing operational safety. End-to-End Orchestration: This capability coordinates tasks across the entire software lifecycle—from development through testing to maintenance—making it easier for small businesses to manage complex projects. Moreover, the partnership extends beyond the tools themselves. IBM has introduced a guide for enterprises on architecting secure AI agents, emphasizing a structured approach to managing AI within business contexts. This guide aims to make AI applications more reliable and safe, aspects that many small business owners find critical when adopting new technologies. However, while the advantages are compelling, small business owners should remain aware of certain challenges. Transitioning to AI-enhanced tools may require initial investment in training, as well as potential disruptions during onboarding. Ensuring that existing systems integrate smoothly with new AI solutions is paramount, particularly for those operating within tight budgets or facing resource constraints. Another consideration is the security aspect of deploying advanced AI tools. Small businesses must remain vigilant regarding data protection and compliance with regulations—challenges that could be heightened with the introduction of new technologies. As Mike Krieger, Chief Product Officer at Anthropic, noted, “Enterprises are looking for AI they can actually trust with their code, their data, and their day-to-day operations.” Ultimately, the partnership between IBM and Anthropic signifies a shift toward more accessible, secure, and efficient software development tools that can directly benefit small businesses. As the marketplace evolves, utilizing enhanced AI capabilities not only helps in modernizing operations but can also offer a competitive advantage. For small business owners eager to explore what AI can bring to their operations, this collaboration could pave the way for transformative growth. For further details about this collaboration and its implications for enterprise software development, you can access the full press release at IBM’s newsroom here. This article, "IBM and Anthropic Team Up to Revolutionize Enterprise Software Development" was first published on Small Business Trends View the full article

-

IBM and Anthropic Team Up to Revolutionize Enterprise Software Development

IBM’s recent partnership with Anthropic marks a significant milestone for small businesses aiming to streamline their software development processes. By integrating Anthropic’s advanced AI model, Claude, into its suite of tools, IBM aims to deliver enhanced productivity, security, and governance within enterprise environments. This strategic alliance is particularly beneficial for small business owners looking for ways to leverage technology in their operations. With productivity gains averaging an impressive 45% reported by over 6,000 early adopters using IBM’s new AI-first integrated development environment (IDE), the potential for cost savings while maintaining high standards of code quality is a compelling reason to consider these new tools. Dinesh Nirmal, Senior Vice President of Software at IBM, stated, “This partnership enhances our software portfolio with advanced AI capabilities while maintaining the governance, security, and reliability that our clients have come to expect.” The IDE is designed specifically for enterprise software development lifecycles (SDLC), facilitating tasks like application modernization, code generation, and intelligent code review. These capabilities can not only save time but also lessen the burden on smaller development teams with limited resources. As digital transformation accelerates, the needs of small businesses often mirror those of larger enterprises, particularly in terms of efficient software development and data management. Developers using the new IDE can expect features such as: Application Modernization at Scale: Support for automated system upgrades and multi-step refactoring can help small businesses transition from legacy systems to modern frameworks without extensive downtime. Intelligent Code Generation and Review: AI-assisted code generation tailored to enterprise architecture patterns ensures that security and compliance needs are met, which can be crucial for small businesses operating in regulated environments. Security-First Development: The integration of security measures directly into development workflows allows for quicker addresses to vulnerabilities and compliance with industry standards, enhancing operational safety. End-to-End Orchestration: This capability coordinates tasks across the entire software lifecycle—from development through testing to maintenance—making it easier for small businesses to manage complex projects. Moreover, the partnership extends beyond the tools themselves. IBM has introduced a guide for enterprises on architecting secure AI agents, emphasizing a structured approach to managing AI within business contexts. This guide aims to make AI applications more reliable and safe, aspects that many small business owners find critical when adopting new technologies. However, while the advantages are compelling, small business owners should remain aware of certain challenges. Transitioning to AI-enhanced tools may require initial investment in training, as well as potential disruptions during onboarding. Ensuring that existing systems integrate smoothly with new AI solutions is paramount, particularly for those operating within tight budgets or facing resource constraints. Another consideration is the security aspect of deploying advanced AI tools. Small businesses must remain vigilant regarding data protection and compliance with regulations—challenges that could be heightened with the introduction of new technologies. As Mike Krieger, Chief Product Officer at Anthropic, noted, “Enterprises are looking for AI they can actually trust with their code, their data, and their day-to-day operations.” Ultimately, the partnership between IBM and Anthropic signifies a shift toward more accessible, secure, and efficient software development tools that can directly benefit small businesses. As the marketplace evolves, utilizing enhanced AI capabilities not only helps in modernizing operations but can also offer a competitive advantage. For small business owners eager to explore what AI can bring to their operations, this collaboration could pave the way for transformative growth. For further details about this collaboration and its implications for enterprise software development, you can access the full press release at IBM’s newsroom here. This article, "IBM and Anthropic Team Up to Revolutionize Enterprise Software Development" was first published on Small Business Trends View the full article

-

Credit Suisse bondholder wipeout was unlawful, court rules

Watchdog did not have proper basis for decision to write down SFr16.5bn of AT1 debt as part of rescue deal, judges findView the full article

-

Get Your Ad Campaigns Ready Before Black Friday via @sejournal, @brookeosmundson

Set your Black Friday PPC campaigns up for success by tightening budgets, optimizing feeds, guiding automation, and building safeguards before peak shopping hits. The post Get Your Ad Campaigns Ready Before Black Friday appeared first on Search Engine Journal. View the full article

-

How to know if your GEO is working

Let’s get one thing straight before the industry turns “GEO” into yet another three-letter source of confusion. Generative engine optimization isn’t SEO with a new hat and a LinkedIn carousel. It’s a fundamentally different game. If you’re still debating whether to swap the “S” for a “G,” you’ve already missed the point. At its core, GEO is brand marketing expressed through generative interfaces. Treat it like a technical tweak, and you’ll get technical-tweak results: plenty of noise, very little growth. CMOs, this is where you step in. SEOs, this is where you either evolve or get automated into irrelevance. The question isn’t what GEO is – that’s been done to death. It’s how to tell if your GEO is actually working. The North Star: Share of search (not ‘share of voice,’ not ‘topical authority’) The primary metric for GEO is the same one that should already anchor any brand-led growth program: share of search. Les Binet didn’t coin a vanity metric for dashboards. Share of search is a leading indicator of future market share because it reflects relative demand – your brand versus competitors. If your share is rising, someone else’s is falling, and the future tilts your way. If it’s declining, you’re mortgaging tomorrow’s revenue. That’s the unglamorous magic of it. It isn’t perfect. But across category after category, share of search predicts brand outcomes with a level of accuracy that should make “awards case studies” blush. And yes, GEO affects it, often through PR. When an LLM recommends your brand (linked or not), some users still open a new tab and Google you. Recommendation sparks curiosity. Curiosity drives search. Search is the signal. Expect branded search volume to rise as generative usage grows, because people back-check what they see in AI results. It’s messy human behavior, but it’s consistent. Your first diagnostic: plot your brand’s share of search against your closest competitors. Use Google Trends or My Telescope for branded demand, and triangulate with Semrush. Watch the trend, not the weekly wobbles. And do not confuse share of search with share of voice. Different metric. Different lineage. Different purpose. Dig deeper: From search to answer engines: How to optimize for the next era of discovery The two halves of the signal: Brand demand and buyer intent Share of search has two practical layers for GEO diagnostics: Brand search: The purest signal of salience. Are more people looking for you than last quarter, relative to the category? That’s how you know your brand availability is increasing inside generative engines and the culture around them. Buyer-intent traffic: The money end. Of your non-branded search clicks, how much is clearly commercial or buyer-intent versus informational fluff? And how does your share of that buyer-intent traffic compare to competitors? You won’t know a rival’s exact click-through rates – and you don’t need to. Use Semrush to estimate non-branded commercial demand at the topic level for you and them, then compare proportions. Cross-reference with your own Google Search Console (GSC) data. Export everything and segment aggressively by intent. Where tool estimates diverge from your actuals, you’ll learn something about the noise in third-party data and the real shape of your market. If your brand search is flat but buyer-intent share is rising, congratulations – you’re harvesting demand but not creating enough of it. If brand search is rising but buyer-intent share isn’t, you have a conversion or content problem – your GEO is sparking curiosity, but your site and assets aren’t turning that into qualified traffic. If both are up, pour fuel. If both are down, stop fiddling with prompts and fix your positioning, advertising, and PR. Dig deeper: Fame engineering: The key to generative engine optimization Category entry points: The prompts behind the prompts GEO lives or dies on category entry points (CEPs) – Ehrenberg-Bass’ useful term for the situations, needs, and triggers that put buyers into the category. CEPs are how real people think. “I just left the gym and I’m thirsty.” That’s why there’s a Coke fridge by the exit. “I’ve just come out of a show near Covent Garden and need food now.” That’s why certain restaurants cluster and advertise there. These are not keywords. They’re human contexts that later materialize as words. Translating that to GEO: your customers’ prompts in ChatGPT, Gemini, Perplexity, and AI Mode reflect their CEPs. Newly appointed marketing manager under pressure to fix organic? That’s a CEP. Fed up with a current tool because the price doubled and support disappeared? Another CEP. Map the CEPs first, then outline the prompt families that those CEPs produce. The wording will vary, but the thematic spine stays consistent: a role, a pain, a job to be done, a timeframe. Once you’ve mapped CEPs to prompt families, you can evaluate your prompt visibility – how often and in what context generative engines surface you as a credible option. This is a brand job as much as a content job. LLMs don’t “decide” like humans. They triangulate across signals and citations to reduce uncertainty. Distinctive brand assets, third-party coverage (PR), credible reviews, and consistent evidence of capability all raise your odds of being recommended. Notice I didn’t say “more blog posts.” We’ll come back to that. Get the newsletter search marketers rely on. See terms. Measure prompt visibility, then validate in GSC Once you’ve outlined your prompt families, test visibility systematically. Run qualitative checks in the major models. Log the sources they cite and the types of evidence they appear to weight. Are you visible when the CEP is “newly promoted CMO, six-month plan to grow organic pipeline”? Are you visible when it’s “VP of ecommerce losing non-brand traffic to marketplace competitors, needs an alternative”? If you’re absent, don’t complain about model bias – earn your spot with PR, credible case studies, and assets that reinforce what the engines are trying to prove about you. Next, switch to the quantitative side. In GSC, build regex filters for conversational queries – the long, natural-language strings (4 to 10 words, often more) that resemble prompts with the serial numbers filed off. We don’t yet know how much of this traffic comes from bots, LLM scaffolding, or humans typing into AI-powered SERPs, but we do know it’s there. Track impressions, clicks, and the proportion that are clearly buyer-intent versus informational. If your conversational query clicks are growing and skewing commercial, that’s a strong signal your GEO is turning curiosity into consideration. The two-second rule: Why informational content won’t save you Here’s a hard truth for the SEO content mills: informational traffic is about to become even less valuable. Most AI citations offer only fleeting exposure. Brand recall takes more than a glance – in both lab and field data, you get roughly two seconds of attention to make anything stick. Most sidebar mentions and AI Overview snippets don’t deliver that, and the memory fades fast anyway. If your GSC export shows that 70% or more of your clicks come from “how-to” mush with no buyer intent, your GEO isn’t working. It’s subsidizing the LLMs that will summarize you out of existence. Fix the mix – shift your asset portfolio toward category entry points that actually precede purchase. Dig deeper: Revisiting ‘useful content’ in the age of AI-dominated search A simple GEO scoreboard for grown-ups Here’s your weekly CMO/SEO standup. Four lines, no fluff. 1. Share of search (brand) Your brand’s share versus your top three competitors, trended over 13 weeks. Up is good. Flat is a warning. Down means it’s time to get comms and PR moving. 2. Share of buyer-intent traffic Your estimated share of non-brand commercial clicks versus competitors (from tool triangulation), plus your actual buyer-intent clicks from GSC. The gap between the two is your reality check. 3. Prompt visibility index For each priority CEP, how often are you recommended by major models, and with what supporting evidence? Track monthly. Celebrate gains. Fix absences with PR and proof. 4. Conversational query conversion Impressions and clicks on 4–10+ word natural-language queries, segmented by intent. Are the commercial ones rising as a share of total? If not, your GEO is a content cost center, not a growth driver. How to read the scoreboard If those four lines are improving together, your GEO is working. If only one is improving, you’re playing tactics without strategy. If none are improving, stop thinking you can “Wikipedia” your way to growth with topical-authority fluff. The levers that actually move GEO What moves the dial? Not more “SEO content.” GEO responds to the levers of brand availability: PR that builds credible third-party evidence: Reviews, analyst notes, earned features, and founder or expert commentary with substance. LLMs love corroboration. Distinctive assets used consistently: Names, taglines, proof points, tone. Engines triangulate. Recognizable signals reduce ambiguity. Customer-centered case studies: Framed around CEPs, not your product roadmap. “Marketing manager replaces X to cut acquisition costs in 90 days” beats “New feature launch.” Tighter copy: Precise, functional language matched to CEPs and prompt families. Kill the poetry. Experience signals: Your site must resolve buyer intent fast. The conversation from AI should land on pages that continue – not restart – the dialogue. Content still matters, but only as support for these levers. Most of your old blog inventory was never going to build memory or distinctiveness, and in an AI-summarized world, it certainly won’t. Scrap the vanity spreadsheets. Build assets that make both engines and humans more certain you’re the right choice in buying situations. Yes, content marketing is back in a big way – but that’s another article. GEO isn’t just SEO When AI modes become the default interaction layer, and they will – whether through chat, answers, or blended SERPs – the game rewards brands that are easy for machines to recommend in buying moments. That is GEO’s beating heart: increasing AI availability. Think of it like free paid search. If you’re still obsessing over informational traffic and topical hamster wheels, you’ll be caught with the lights on and no clothes. Some of you already are. SEOs who make the leap become organic-search strategists. You’ll speak CEPs, buyer intent, and brand effects. You’ll partner with PR, product marketing, and sales enablement. You’ll still use the tools – Semrush and GSC – but you’ll use them to evidence strategy, not to justify content churn. The rest of you? You’ll be replaced by an agentic workflow that writes better filler faster than you ever could. The humbling truth about GEO Marketing rewards humility. You are not the consumer, and you are certainly not the model. Stop guessing. Measure the four lines. Map the category entry points. Build the assets that make you easy to recommend. Cross-reference tool estimates with your own data and let the differences teach you. GEO isn’t mystical – it’s brand marketing meeting machine mediation. So, how do you know if your GEO is working? Your share of search rises. Your share of buyer-intent traffic rises. Your prompt visibility expands across the CEPs that actually precede purchase. Your conversational queries convert at a higher rate. Everything else is noise. Ignore the noise, fix the fundamentals, and remember the only mantra that matters in this brave, generative world: Be recommended by AI, when it matters and not when it doesn’t. Dig deeper: SEO in the age of AI: Becoming the trusted answer View the full article

-

Owl Labs + Lenovo collaborate to launch AI-powered 360-degree Microsoft Teams Rooms Solutions globally

Owl Labs, a leader in 360-degree AI-powered video conferencing and hybrid collaboration technology, today announced solutions with Lenovo, a global technology leader and powerhouse, to launch Lenovo-powered 360° Microsoft Teams Rooms (MTR-W) solutions. The collaboration combines Owl Labs' award-winning Meeting Owl 3 and 4+ products used by nearly 250,000 organizations globally with Lenovo’s cutting-edge ThinkSmart Core and Tiny Kit to create full-room solutions that are flexible, cost-effective, and meet the needs of both SMBs and Enterprise customers. View the full article

-

The '123' Method Can Help You Better Recall What You've Studied

Did you know you can customize Google to filter out garbage? Take these steps for better search results, including adding Lifehacker as a preferred source for tech news. Studying is about so much more than just rereading some chapters and notes. That said, while it's a good idea to have a strategy for actually retaining what you’re going over, if your method is too convoluted, you’ll never stick to it—and then it's just as useless as mindlessly rereading the same section five times. The best study methods not only rely on research and established understandings of how memory works, but are easy to incorporate in a practical way. The "123" method meets all the criteria of a good study method. What is the 123 study method?The 123 study method is a lot like the 2357 method, except it’s much easier to stay on top of and actually execute. With 2357, you review and revise your notes and materials on days two, three, five, and seven after first learning them, which is a tricky schedule to remember and maintain. You can and should, however, call on a study-scheduling app, like My Study Life, to help you with this and other time-based academic tasks. The 123 method is simpler: On day one, you learn your material. On day two, you review it. Review it again on day three, then don’t think about it for a week, at which point you'll review it again. Again, use of a planner, calendar, or scheduler is encouraged here. These techniques are useful, but only if you actually execute and stick to them, so don't be afraid to get a little boost from an app or even your phone's built-in "reminders" function. Why the 123 study method worksThe 123 method relies on distributed practice, which calls for you to review your materials at spaced intervals to better retain them in your long-term memory. That's a technique that works fabulously, but often, adherents expect you to distribute the practice in ways that are difficult to manage. By going over it for three days, then giving your brain a week and seeing how much you retained, you can fit distributed practice into your life a lot more easily than if you follow some elaborate, torturous schedule of off days and on days. This method is best done about 10 days out from a big test, so you can study and review on those first three days, then once more the day before the test. How you review is up to you, but you can try flashcards, which help you with memory retrieval, or blurting, which helps you identify your problem areas by forcing you to recall as much information as you can without looking at your notes. Whatever method you choose for the actual review, try to make sure it incorporates some element of active recall, or the act of forcing yourself to pull key information from your memory. Just know that the one-week interval between reviews is key. When your brain has almost forgotten something, it works a little harder to pull the information out of your memory, which is what will truly help get the facts to stick before your big test. View the full article

-

Flight delays today: Air travel headaches continue as government shutdown enters its third week

Headaches continued for U.S. travelers over the weekend as a combination of bad weather and impacts from the ongoing government shutdown ensnarled many would-be fliers. Flight delays and cancellations piled up over the three-day holiday period, with flight-tracking service FlightAware showing nearly 30,000 delays in and out of U.S. airports from Sunday of last week through Monday. Here’s the latest on the situation at U.S. airports and what travelers need to know: How bad have flight delays been? Delays and cancellations at many airports have grown progressively worse since the U.S. government shut down on October 1. With no end in sight to the political impasse in Washington that brought us here, the shutdown will enter its third week tomorrow. FlightAware data shows there were 7,928 delays in and out of U.S. airports yesterday, along with 592 cancellations. Saturday and Sunday were roughly the same, with 5,007 delays and 114 cancellations on Saturday and 7,981 and 271 cancellations on Sunday. Airlines for America, a trade group representing U.S. airlines, had warned before the weekend that shutdown-related shortages in air traffic controllers could create travel headaches at a number of airports, although the group insisted that flying remains safe, as CNN reported. Bad weather, including a nor’easter that made its way up the East Coast, contributed to the chaos, causing delays at Northeast airports including New York’s John F. Kennedy International Airport and Newark International Airport in New Jersey. Will delays continue this week? As of early Tuesday morning, FlightAware data showed significantly fewer delays and cancellations for today (499 and 26, respectively), but the number was rising rapidly and only time will tell what the future has in store. (We’ll update this post later today with the most recent numbers.) Meanwhile, Republicans and Democrats on Capitol Hill remain deadlocked over key sticking points. Most crucially, Democrats want to extend Affordable Care Act (ACA) tax credits that are set to expire this year. According to estimates from the Kaiser Family Foundation (KFF), the loss of the credits would lead to significantly higher healthcare premiums for millions of Americans. View the full article

-

Google: Ads Coming Soon To AI Mode In EU

There is a report coming out of a Google event named Google Access that has a Googler saying that ads are coming to AI Mode very soon in the EU. The thing is, ads in AI Mode, even in the US, is just a test, and is not fully live. View the full article

-

Russia accuses Mikhail Khodorkovsky of plotting coup

FSB launches fresh criminal case against former Yukos oil magnate and 22 other dissidents in exileView the full article

-

JPMorgan gets Wall Street lift, warns of economy 'softening'

The $4.6 trillion-asset company's report comes after it committed to funneling $1.5 trillion into industries it said were important to national security. View the full article

-

Israel kills several Palestinians in Gaza strikes

Attack on Gazans inspecting homes comes days after fragile ceasefire goes into effect View the full article

-

Google Merchant Center Clarifies Misrepresentation Policies

Google has updated its Misrepresentation policy for both Shopping ads and free listings within Google Merchant Center. Google said the "update provides additional examples of what the policy covers, more guidance on how to be compliant, and more information about our appeals process."View the full article

-

New Google Ads Optimization Insights Recommendations

Google is testing a new optimization insights layer or recommendation interface for Google Ads. This has three columns named (1) build a foundation, (2) drive performance and (3) expand and grow.View the full article

-

Google Hiring Google Discover User Generated Content Engineer

Google is looking to hire a software engineer with the responsibility of improving the quality of User Generated Content (UGC) contents in Google Discover.View the full article

-

US-China déjà vu all over again

The President’s latest piece of brinkmanship is likely to result in another climbdownView the full article